Chapter 3 Missing values and imputation

3.1 Load data

3.2 Examine predictor missingness

3.2.1 Missingness table

# Look at missingness among predictors.

missing = is.na(data[, vars$predictors])

# This will be a zero-row tibble if there is no missingness in the data.

missing_df =

data.frame(var = colnames(missing),

missing_mean = colMeans(missing),

missing_count = colSums(missing)) %>%

filter(missing_count > 0) %>% arrange(desc(missing_mean))

missing_df## var missing_mean missing_count

## oldpeak oldpeak 0.02310231 7

## ca ca 0.01980198 6

## thal thal 0.01980198 6

## age age 0.01650165 5

## restecg restecg 0.01650165 5

## cp cp 0.01320132 4

## fbs fbs 0.01320132 4

## slope slope 0.01320132 4

## chol chol 0.00990099 3

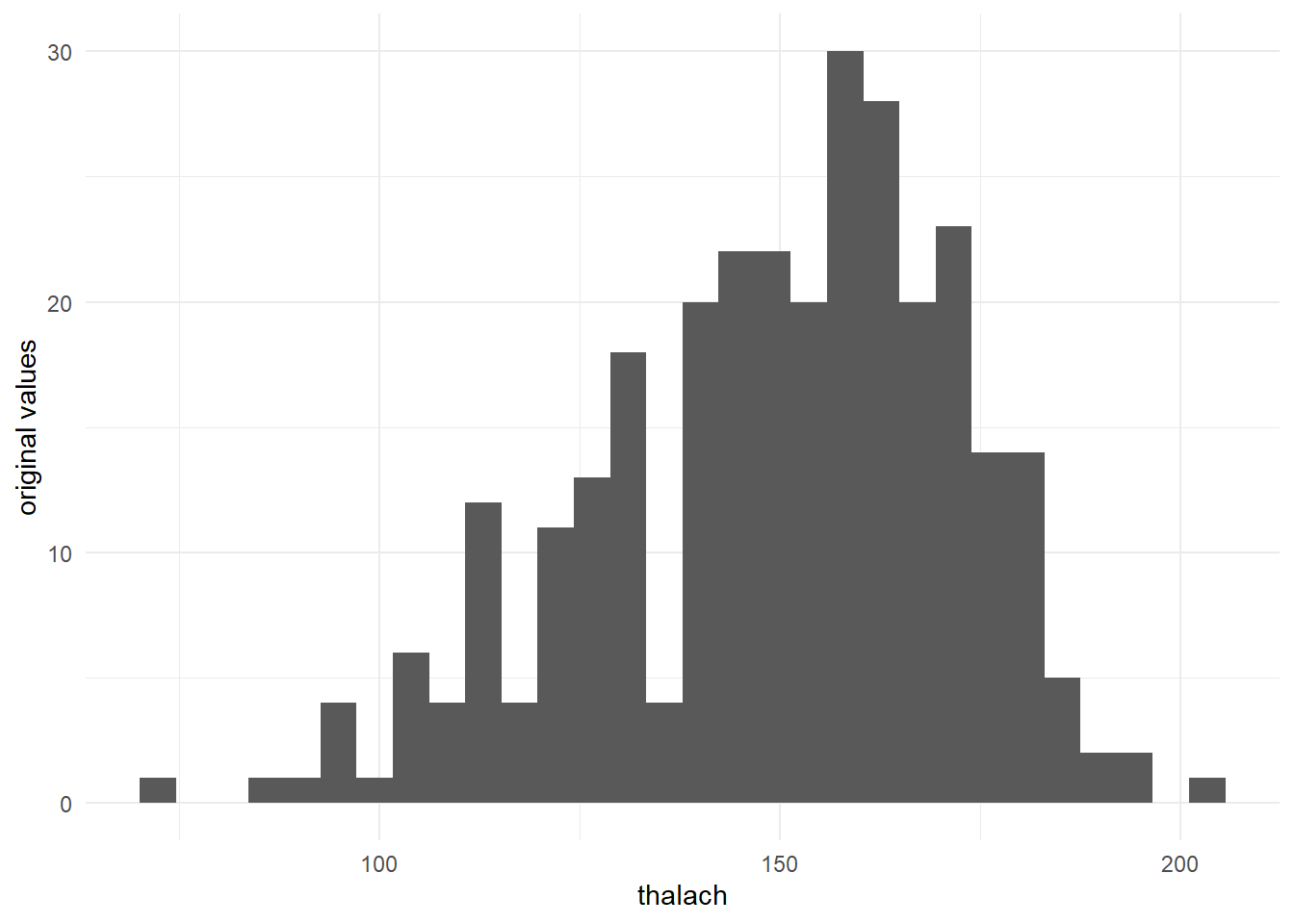

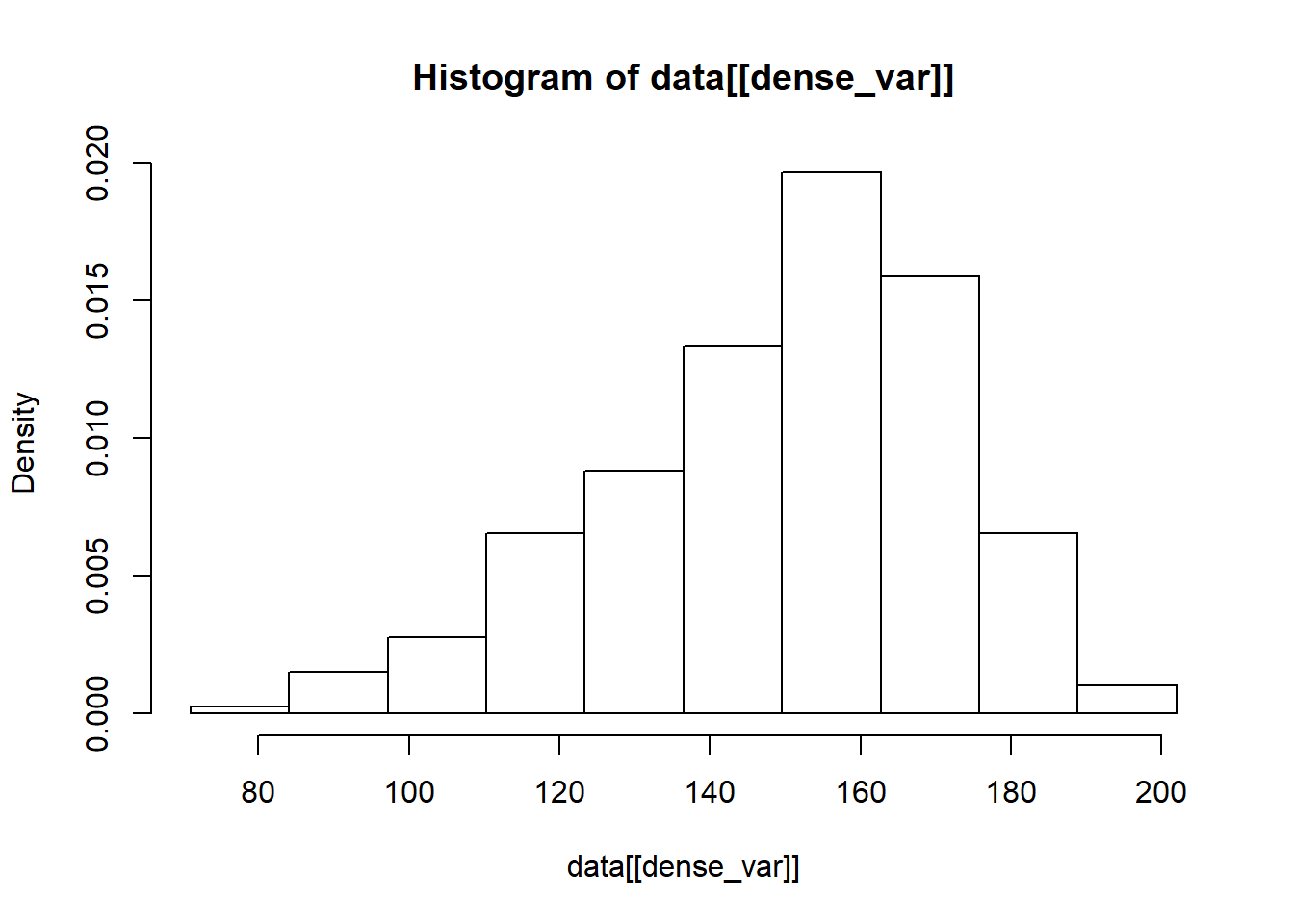

## thalach thalach 0.00990099 3

## sex sex 0.00660066 2

## exang exang 0.00330033 1if (nrow(missing_df) == 0) {

cat("No missinginess found in the predictors.")

} else {

missing_df$missing_mean = paste0(round(missing_df$missing_mean * 100, 1), "%")

missing_df$missing_count = prettyNum(missing_df$missing_count, big.mark = ",")

colnames(missing_df) = c("Variable", "Missing rate", "Missing values")

print({ kab_table = kable(missing_df, format = "latex", digits = c(0, 3, 0),

booktabs = TRUE) })

cat(kab_table %>% kable_styling(latex_options = "striped"),

file = "tables/missingness-table.tex")

}##

## \begin{tabular}{llll}

## \toprule

## & Variable & Missing rate & Missing values\\

## \midrule

## oldpeak & oldpeak & 2.3\% & 7\\

## ca & ca & 2\% & 6\\

## thal & thal & 2\% & 6\\

## age & age & 1.7\% & 5\\

## restecg & restecg & 1.7\% & 5\\

## \addlinespace

## cp & cp & 1.3\% & 4\\

## fbs & fbs & 1.3\% & 4\\

## slope & slope & 1.3\% & 4\\

## chol & chol & 1\% & 3\\

## thalach & thalach & 1\% & 3\\

## \addlinespace

## sex & sex & 0.7\% & 2\\

## exang & exang & 0.3\% & 1\\

## \bottomrule

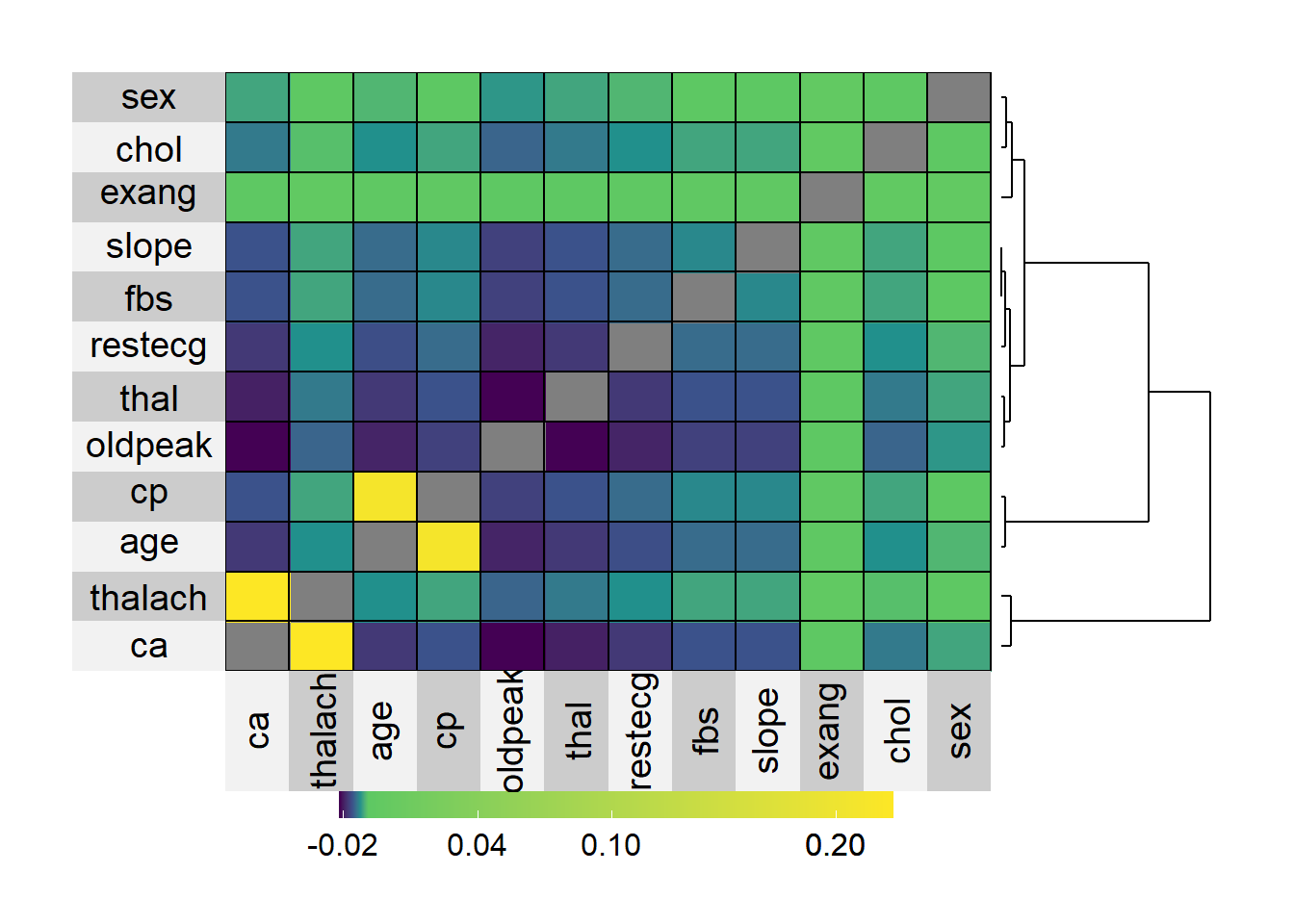

## \end{tabular}3.2.2 Missingness heatmap

if (nrow(missing_df) == 0) {

cat("Skipping missingness heatmap, no missigness found in predictors.")

} else {

# Correlation table of missingness

# Only examine variables with missingness > 0%.

missing2 = is.na(data[, as.character(missing_df$Variable)])

colMeans(missing2)

cor(missing2)

# Correlation matrix of missingness.

(missing_cor = cor(missing2))

# Replace the unit diagonal with NAs so that it doesn't show as yellow.

diag(missing_cor) = NA

# Heatmap of correlation table.

#png("visuals/missingness-superheat.png", height = 600, width = 900)

superheat::superheat(missing_cor,

# Change the angle of the label text

bottom.label.text.angle = 90,

pretty.order.rows = TRUE,

pretty.order.cols = TRUE,

row.dendrogram = TRUE,

scale = FALSE)

#dev.off()

}

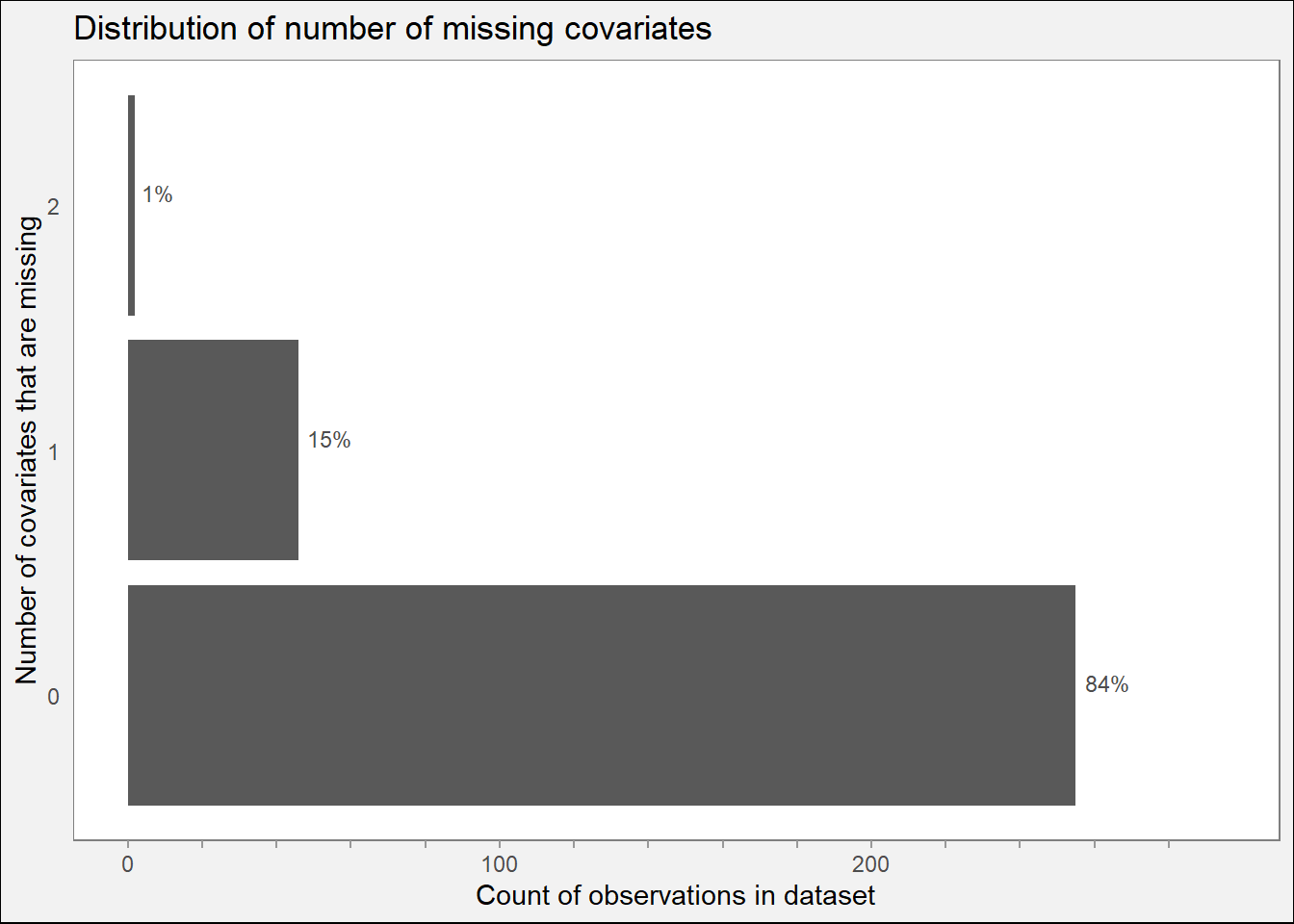

3.2.3 Missingness count plot

if (nrow(missing_df) == 0L) {

cat("Skipping missingness count plot, no missingness found in predictors.")

} else {

# Table with count of missing covariates by observation.

missing_counts = rowSums(missing2)

table(missing_counts)

# Typical observation is missing 6 covariates.

summary(missing_counts)

# Code from:

# https://stackoverflow.com/questions/27850123/ggplot2-have-shorter-tick-marks-for-tick-marks-without-labels?noredirect=1&lq=1

# Major tick marks

major = 100

# Minor tick marks

minor = 20

# Range of x values

# Ensure that we always start at 0.

(range = c(0, 2* minor + sum(missing_counts == as.integer(names(which.max(table(missing_counts)))))))

# Function to insert blank labels

# Borrowed from https://stackoverflow.com/questions/14490071/adding-minor-tick-marks-to-the-x-axis-in-ggplot2-with-no-labels/14490652#14490652

insert_minor <- function(major, n_minor) {

labs <- c(sapply(major, function(x, y) c(x, rep("", y) ), y = round(n_minor)))

labs[1:(length(labs) - n_minor)]

}

# Getting the 'breaks' and 'labels' for the ggplot

n_minor = major / minor - 1

(breaks = seq(min(range), max(range), minor))

(labels = insert_minor(seq(min(range), max(range), major), n_minor))

if (length(breaks) > length(labels)) labels = c(labels, rep("", length(breaks) - length(labels)))

print(ggplot(data.frame(missing_counts), aes(x = missing_counts)) +

geom_bar(aes(y = ..count..)) +

theme_minimal() +

geom_text(aes(label = scales::percent(..prop..), y = ..count..),

stat = "count", hjust = -0.2, size = 3, nudge_x = 0.05,

color = "gray30",

NULL) +

scale_x_continuous(breaks = seq(0, max(table(missing_counts)))) +

scale_y_continuous(breaks = breaks,

labels = ifelse(labels != "", prettyNum(labels, big.mark = ",", preserve.width = "none"), ""),

limits = c(0, max(range))) +

labs(title = "Distribution of number of missing covariates",

x = "Number of covariates that are missing",

y = "Count of observations in dataset") +

# Remove grid axes, add gray background.

# Label each value on x axis.

theme(panel.grid = element_blank(),

axis.ticks.x = element_line(color = "gray60", size = 0.5),

panel.background = element_rect(fill = "white", color = "gray50"),

plot.background = element_rect(fill = "gray95")) +

coord_flip())

ggsave("visuals/missing-count-hist.png", width = 8, height = 4)

# X variables with missingness

print(ncol(missing2))

}

## [1] 123.3 Examine outcome missingness

##

## 0 1

## 138 1653.4 Impute missing predictor values

3.4.1 Missingness indicators

## age sex cp trestbps chol fbs restecg

## 0.01650165 0.00660066 0.01320132 0.00000000 0.00990099 0.01320132 0.01650165

## thalach exang oldpeak slope ca thal

## 0.00990099 0.00330033 0.02310231 0.01320132 0.01980198 0.01980198# First create matrix of missingness indicators for all covariates.

miss_inds =

ck37r::missingness_indicators(data,

skip_vars = c(vars$exclude, vars$outcome),

verbose = TRUE)## Generating 12 missingness indicators.

## Checking for collinearity of indicators.

## Final number of indicators: 12## miss_age miss_sex miss_cp miss_chol miss_fbs miss_restecg

## 0.01650165 0.00660066 0.01320132 0.00990099 0.01320132 0.01650165

## miss_thalach miss_exang miss_oldpeak miss_slope miss_ca miss_thal

## 0.00990099 0.00330033 0.02310231 0.01320132 0.01980198 0.019801983.4.2 Impute to 0

Some variables we want to explicitly set to 0 if they are unobserved.

# Manually impute certain variables to 0 rather than use the sample median (or GLRM).

impute_to_0_vars = c("exang")

# Review missingness one last time for these vars.

colMeans(is.na(data[, impute_to_0_vars, drop = FALSE]))## exang

## 0.00330033## Min. 1st Qu. Median Mean 3rd Qu. Max. NA's

## 0.0000 0.0000 0.0000 0.3278 1.0000 1.0000 1# Impute these variables specifically to 0, rather than sample median (although

# in many cases the median was already 0).

data[, impute_to_0_vars] = lapply(data[, impute_to_0_vars, drop = FALSE], function(col) {

col[is.na(col)] = 0L

col

})

# Confirm we have no more missingness in these vars.

colMeans(is.na(data[, impute_to_0_vars, drop = FALSE]))## exang

## 0We will use generalized low-rank models in h2o.ai software.

3.4.3 GLRM prep

# Subset using var_df$var so that it's in the same order as var_df.

impute_df = data[, var_df$var]

# Convert binary variables to logical

(binary_vars = var_df$var[var_df$type == "binary"])## character(0)for (binary_name in binary_vars) {

impute_df[[binary_name]] = as.logical(impute_df[[binary_name]])

}

# NOTE: these will be turned into factor variables within h2o.

table(sapply(impute_df, class))##

## factor integer numeric

## 5 7 1# Create a dataframe describing the loss function by variable; the first variable must have index = 0

losses = data.frame("index" = seq(ncol(impute_df)) - 1,

"feature" = var_df$var,

"class" = var_df$class,

"type" = var_df$type,

stringsAsFactors = FALSE)

# Update class for binary variables.

for (binary_name in binary_vars) {

losses[var_df$var == binary_name, "class"] = class(impute_df[[binary_name]])

}

losses$loss[losses$class == "numeric"] = "Huber"

losses$loss[losses$class == "integer"] = "Huber"

#losses$loss[losses$class == "integer"] = "Poisson"

losses$loss[losses$class == "factor"] = "Categorical"

losses$loss[losses$type == "binary"] = "Hinge"

# Logistic seems to yield worse reconstruction RMSE overall.

#losses$loss[losses$type == "binary"] = "Logistic"

losses## index feature class type loss

## 1 0 ca factor categorical Categorical

## 2 1 oldpeak numeric continuous Huber

## 3 2 restecg integer integer Huber

## 4 3 slope factor categorical Categorical

## 5 4 age integer integer Huber

## 6 5 sex factor categorical Categorical

## 7 6 cp factor categorical Categorical

## 8 7 exang integer integer Huber

## 9 8 thal factor categorical Categorical

## 10 9 thalach integer integer Huber

## 11 10 chol integer integer Huber

## 12 11 fbs integer integer Huber

## 13 12 trestbps integer integer Huber3.4.4 Start h2o

# We are avoiding library(h2o) due to namespace conflicts with dplyr & related packages.

# Initialize h2o

h2o::h2o.no_progress() # Turn off progress bars

analyst_name = "chris-kennedy"

h2o::h2o.init(max_mem_size = "15g",

name = paste0("h2o-", analyst_name),

# Default port is 54321, but other analysts may be using that.

port = 54320,

# This can reduce accidental sharing of h2o processes on a shared server.

username = analyst_name,

password = paste0("pw-", analyst_name),

# Use half of available cores for h2o.

nthreads = get_cores())##

## H2O is not running yet, starting it now...

##

## Note: In case of errors look at the following log files:

## C:\Users\chris\AppData\Local\Temp\Rtmpmov5jY\file5fcc2573ccc/h2o_chris_started_from_r.out

## C:\Users\chris\AppData\Local\Temp\Rtmpmov5jY\file5fcc77202047/h2o_chris_started_from_r.err

##

##

## Starting H2O JVM and connecting: Connection successful!

##

## R is connected to the H2O cluster:

## H2O cluster uptime: 3 seconds 750 milliseconds

## H2O cluster timezone: America/Los_Angeles

## H2O data parsing timezone: UTC

## H2O cluster version: 3.30.0.1

## H2O cluster version age: 2 months and 16 days

## H2O cluster name: h2o-chris-kennedy

## H2O cluster total nodes: 1

## H2O cluster total memory: 15.00 GB

## H2O cluster total cores: 12

## H2O cluster allowed cores: 3

## H2O cluster healthy: TRUE

## H2O Connection ip: localhost

## H2O Connection port: 54320

## H2O Connection proxy: NA

## H2O Internal Security: FALSE

## H2O API Extensions: Amazon S3, Algos, AutoML, Core V3, TargetEncoder, Core V4

## R Version: R version 3.6.2 (2019-12-12)3.4.5 Load data into h2o

## Warning in use.package("data.table"): data.table cannot be used without R

## package bit64 version 0.9.7 or higher. Please upgrade to take advangage of

## data.table speedups.## [1] "enum" "real" "int" "enum" "int" "enum" "enum" "int" "enum" "int"

## [11] "int" "int" "int"## index feature class type loss h2o_types

## 1 0 ca factor categorical Categorical enum

## 2 1 oldpeak numeric continuous Huber real

## 3 2 restecg integer integer Huber int

## 4 3 slope factor categorical Categorical enum

## 5 4 age integer integer Huber int

## 6 5 sex factor categorical Categorical enum

## 7 6 cp factor categorical Categorical enum

## 8 7 exang integer integer Huber int

## 9 8 thal factor categorical Categorical enum

## 10 9 thalach integer integer Huber int

## 11 10 chol integer integer Huber int

## 12 11 fbs integer integer Huber int

## 13 12 trestbps integer integer Huber int3.4.6 GLRM train/test split

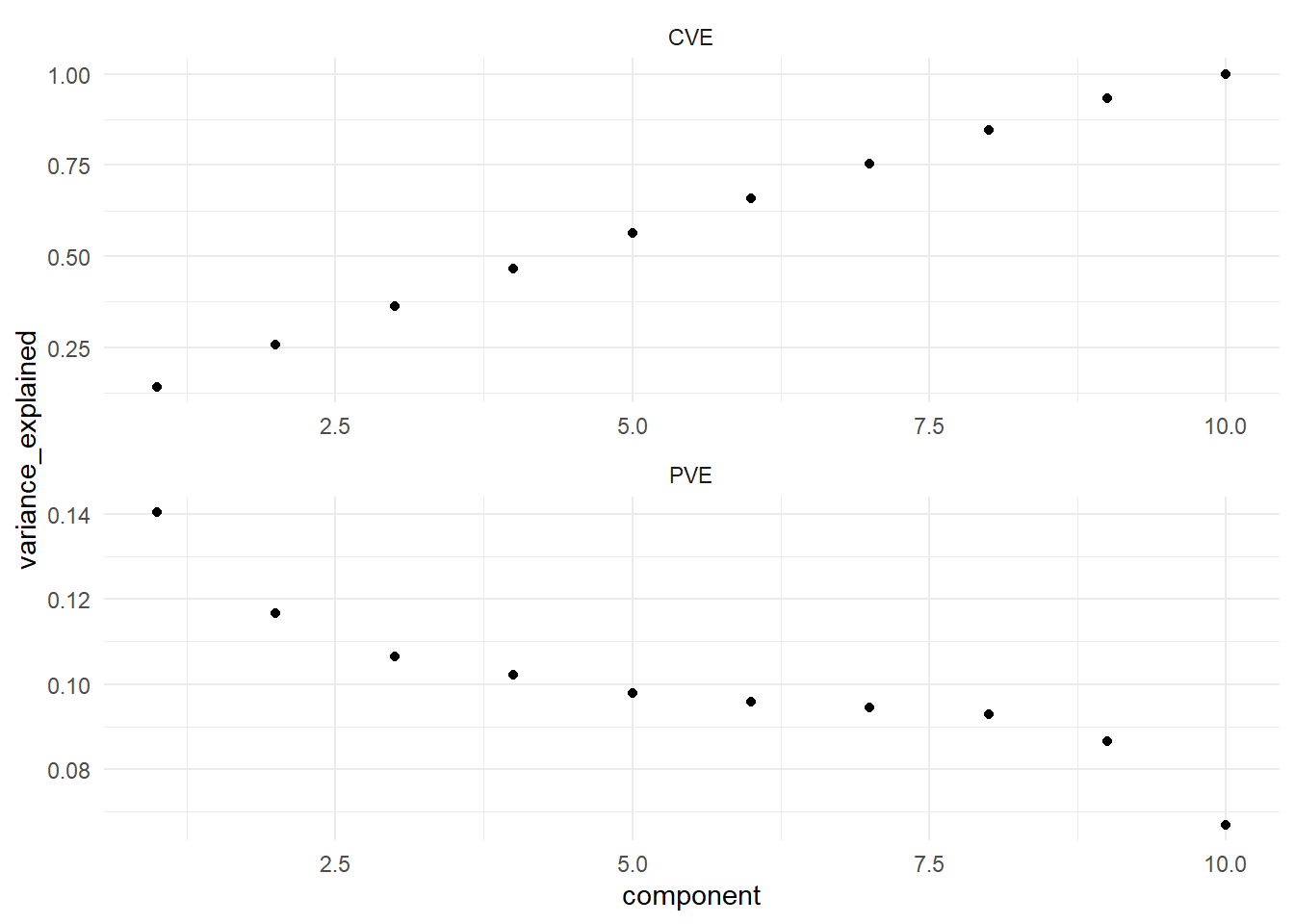

3.4.7 Define GLRM grid

Follow hyperparameter optimization method shown at: * https://github.com/h2oai/h2o-tutorials/blob/master/best-practices/glrm/GLRM-BestPractices.Rmd * and https://bradleyboehmke.github.io/HOML/GLRM.html#tuning-to-optimize-for-unseen-data

# Create hyperparameter search grid

params = expand.grid(

# Try 3 values on the exponential scale up to the maximum number of predictors.

k = round(exp(log(length(vars$predictors)) * exp(c(-0.8, -0.5, -0.1)))),

regularization_x = c("None", "Quadratic", "L1"),

regularization_y = c("None", "Quadratic", "L1"),

gamma_x = c(0, 1, 4),

gamma_y = c(0, 1, 4),

error_num = NA,

error_cat = NA,

objective = NA,

stringsAsFactors = FALSE)

# 243 combinations!

dim(params)## [1] 243 8# Remove combinations in which regularization_x = None and gamma_x != 0

params = subset(params, regularization_x != "None" | gamma_x == 0)

# Remove combinations in which regularization_x != None and gamma_x == 0

params = subset(params, regularization_x == "None" | gamma_x != 0)

# Remove combinations in which regularization_y = None and gamma_y != 0

params = subset(params, regularization_y != "None" | gamma_y == 0)

# Remove combinations in which regularization_y != None and gamma_y == 0

params = subset(params, regularization_y == "None" | gamma_y != 0)

# Down to 75 combinations.

dim(params)## [1] 75 8## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 1 3 None None 0 0 NA NA

## 2 5 None None 0 0 NA NA

## 3 10 None None 0 0 NA NA

## 31 3 Quadratic None 1 0 NA NA

## 32 5 Quadratic None 1 0 NA NA

## 33 10 Quadratic None 1 0 NA NA

## 34 3 L1 None 1 0 NA NA

## 35 5 L1 None 1 0 NA NA

## 36 10 L1 None 1 0 NA NA

## 58 3 Quadratic None 4 0 NA NA

## 59 5 Quadratic None 4 0 NA NA

## 60 10 Quadratic None 4 0 NA NA

## 61 3 L1 None 4 0 NA NA

## 62 5 L1 None 4 0 NA NA

## 63 10 L1 None 4 0 NA NA

## 91 3 None Quadratic 0 1 NA NA

## 92 5 None Quadratic 0 1 NA NA

## 93 10 None Quadratic 0 1 NA NA

## 100 3 None L1 0 1 NA NA

## 101 5 None L1 0 1 NA NA

## 102 10 None L1 0 1 NA NA

## 121 3 Quadratic Quadratic 1 1 NA NA

## 122 5 Quadratic Quadratic 1 1 NA NA

## 123 10 Quadratic Quadratic 1 1 NA NA

## 124 3 L1 Quadratic 1 1 NA NA

## 125 5 L1 Quadratic 1 1 NA NA

## 126 10 L1 Quadratic 1 1 NA NA

## 130 3 Quadratic L1 1 1 NA NA

## 131 5 Quadratic L1 1 1 NA NA

## 132 10 Quadratic L1 1 1 NA NA

## 133 3 L1 L1 1 1 NA NA

## 134 5 L1 L1 1 1 NA NA

## 135 10 L1 L1 1 1 NA NA

## 148 3 Quadratic Quadratic 4 1 NA NA

## 149 5 Quadratic Quadratic 4 1 NA NA

## 150 10 Quadratic Quadratic 4 1 NA NA

## 151 3 L1 Quadratic 4 1 NA NA

## 152 5 L1 Quadratic 4 1 NA NA

## 153 10 L1 Quadratic 4 1 NA NA

## 157 3 Quadratic L1 4 1 NA NA

## 158 5 Quadratic L1 4 1 NA NA

## 159 10 Quadratic L1 4 1 NA NA

## 160 3 L1 L1 4 1 NA NA

## 161 5 L1 L1 4 1 NA NA

## 162 10 L1 L1 4 1 NA NA

## 172 3 None Quadratic 0 4 NA NA

## 173 5 None Quadratic 0 4 NA NA

## 174 10 None Quadratic 0 4 NA NA

## 181 3 None L1 0 4 NA NA

## 182 5 None L1 0 4 NA NA

## 183 10 None L1 0 4 NA NA

## 202 3 Quadratic Quadratic 1 4 NA NA

## 203 5 Quadratic Quadratic 1 4 NA NA

## 204 10 Quadratic Quadratic 1 4 NA NA

## 205 3 L1 Quadratic 1 4 NA NA

## 206 5 L1 Quadratic 1 4 NA NA

## 207 10 L1 Quadratic 1 4 NA NA

## 211 3 Quadratic L1 1 4 NA NA

## 212 5 Quadratic L1 1 4 NA NA

## 213 10 Quadratic L1 1 4 NA NA

## 214 3 L1 L1 1 4 NA NA

## 215 5 L1 L1 1 4 NA NA

## 216 10 L1 L1 1 4 NA NA

## 229 3 Quadratic Quadratic 4 4 NA NA

## 230 5 Quadratic Quadratic 4 4 NA NA

## 231 10 Quadratic Quadratic 4 4 NA NA

## 232 3 L1 Quadratic 4 4 NA NA

## 233 5 L1 Quadratic 4 4 NA NA

## 234 10 L1 Quadratic 4 4 NA NA

## 238 3 Quadratic L1 4 4 NA NA

## 239 5 Quadratic L1 4 4 NA NA

## 240 10 Quadratic L1 4 4 NA NA

## 241 3 L1 L1 4 4 NA NA

## 242 5 L1 L1 4 4 NA NA

## 243 10 L1 L1 4 4 NA NA

## objective

## 1 NA

## 2 NA

## 3 NA

## 31 NA

## 32 NA

## 33 NA

## 34 NA

## 35 NA

## 36 NA

## 58 NA

## 59 NA

## 60 NA

## 61 NA

## 62 NA

## 63 NA

## 91 NA

## 92 NA

## 93 NA

## 100 NA

## 101 NA

## 102 NA

## 121 NA

## 122 NA

## 123 NA

## 124 NA

## 125 NA

## 126 NA

## 130 NA

## 131 NA

## 132 NA

## 133 NA

## 134 NA

## 135 NA

## 148 NA

## 149 NA

## 150 NA

## 151 NA

## 152 NA

## 153 NA

## 157 NA

## 158 NA

## 159 NA

## 160 NA

## 161 NA

## 162 NA

## 172 NA

## 173 NA

## 174 NA

## 181 NA

## 182 NA

## 183 NA

## 202 NA

## 203 NA

## 204 NA

## 205 NA

## 206 NA

## 207 NA

## 211 NA

## 212 NA

## 213 NA

## 214 NA

## 215 NA

## 216 NA

## 229 NA

## 230 NA

## 231 NA

## 232 NA

## 233 NA

## 234 NA

## 238 NA

## 239 NA

## 240 NA

## 241 NA

## 242 NA

## 243 NA# Randomly order the params so that we can stop at any time.

set.seed(1)

params = params[sample(nrow(params)), ]

params## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 233 5 L1 Quadratic 4 4 NA NA

## 153 10 L1 Quadratic 4 1 NA NA

## 1 3 None None 0 0 NA NA

## 148 3 Quadratic Quadratic 4 1 NA NA

## 160 3 L1 L1 4 1 NA NA

## 62 5 L1 None 4 0 NA NA

## 212 5 Quadratic L1 1 4 NA NA

## 183 10 None L1 0 4 NA NA

## 102 10 None L1 0 1 NA NA

## 204 10 Quadratic Quadratic 1 4 NA NA

## 34 3 L1 None 1 0 NA NA

## 36 10 L1 None 1 0 NA NA

## 63 10 L1 None 4 0 NA NA

## 232 3 L1 Quadratic 4 4 NA NA

## 151 3 L1 Quadratic 4 1 NA NA

## 158 5 Quadratic L1 4 1 NA NA

## 124 3 L1 Quadratic 1 1 NA NA

## 172 3 None Quadratic 0 4 NA NA

## 214 3 L1 L1 1 4 NA NA

## 207 10 L1 Quadratic 1 4 NA NA

## 240 10 Quadratic L1 4 4 NA NA

## 159 10 Quadratic L1 4 1 NA NA

## 234 10 L1 Quadratic 4 4 NA NA

## 161 5 L1 L1 4 1 NA NA

## 216 10 L1 L1 1 4 NA NA

## 135 10 L1 L1 1 1 NA NA

## 101 5 None L1 0 1 NA NA

## 149 5 Quadratic Quadratic 4 1 NA NA

## 33 10 Quadratic None 1 0 NA NA

## 58 3 Quadratic None 4 0 NA NA

## 231 10 Quadratic Quadratic 4 4 NA NA

## 152 5 L1 Quadratic 4 1 NA NA

## 181 3 None L1 0 4 NA NA

## 130 3 Quadratic L1 1 1 NA NA

## 239 5 Quadratic L1 4 4 NA NA

## 122 5 Quadratic Quadratic 1 1 NA NA

## 173 5 None Quadratic 0 4 NA NA

## 203 5 Quadratic Quadratic 1 4 NA NA

## 242 5 L1 L1 4 4 NA NA

## 206 5 L1 Quadratic 1 4 NA NA

## 123 10 Quadratic Quadratic 1 1 NA NA

## 134 5 L1 L1 1 1 NA NA

## 238 3 Quadratic L1 4 4 NA NA

## 2 5 None None 0 0 NA NA

## 61 3 L1 None 4 0 NA NA

## 93 10 None Quadratic 0 1 NA NA

## 121 3 Quadratic Quadratic 1 1 NA NA

## 182 5 None L1 0 4 NA NA

## 150 10 Quadratic Quadratic 4 1 NA NA

## 241 3 L1 L1 4 4 NA NA

## 100 3 None L1 0 1 NA NA

## 202 3 Quadratic Quadratic 1 4 NA NA

## 35 5 L1 None 1 0 NA NA

## 126 10 L1 Quadratic 1 1 NA NA

## 60 10 Quadratic None 4 0 NA NA

## 131 5 Quadratic L1 1 1 NA NA

## 157 3 Quadratic L1 4 1 NA NA

## 230 5 Quadratic Quadratic 4 4 NA NA

## 59 5 Quadratic None 4 0 NA NA

## 125 5 L1 Quadratic 1 1 NA NA

## 31 3 Quadratic None 1 0 NA NA

## 133 3 L1 L1 1 1 NA NA

## 174 10 None Quadratic 0 4 NA NA

## 229 3 Quadratic Quadratic 4 4 NA NA

## 215 5 L1 L1 1 4 NA NA

## 132 10 Quadratic L1 1 1 NA NA

## 243 10 L1 L1 4 4 NA NA

## 211 3 Quadratic L1 1 4 NA NA

## 32 5 Quadratic None 1 0 NA NA

## 162 10 L1 L1 4 1 NA NA

## 92 5 None Quadratic 0 1 NA NA

## 205 3 L1 Quadratic 1 4 NA NA

## 91 3 None Quadratic 0 1 NA NA

## 213 10 Quadratic L1 1 4 NA NA

## 3 10 None None 0 0 NA NA

## objective

## 233 NA

## 153 NA

## 1 NA

## 148 NA

## 160 NA

## 62 NA

## 212 NA

## 183 NA

## 102 NA

## 204 NA

## 34 NA

## 36 NA

## 63 NA

## 232 NA

## 151 NA

## 158 NA

## 124 NA

## 172 NA

## 214 NA

## 207 NA

## 240 NA

## 159 NA

## 234 NA

## 161 NA

## 216 NA

## 135 NA

## 101 NA

## 149 NA

## 33 NA

## 58 NA

## 231 NA

## 152 NA

## 181 NA

## 130 NA

## 239 NA

## 122 NA

## 173 NA

## 203 NA

## 242 NA

## 206 NA

## 123 NA

## 134 NA

## 238 NA

## 2 NA

## 61 NA

## 93 NA

## 121 NA

## 182 NA

## 150 NA

## 241 NA

## 100 NA

## 202 NA

## 35 NA

## 126 NA

## 60 NA

## 131 NA

## 157 NA

## 230 NA

## 59 NA

## 125 NA

## 31 NA

## 133 NA

## 174 NA

## 229 NA

## 215 NA

## 132 NA

## 243 NA

## 211 NA

## 32 NA

## 162 NA

## 92 NA

## 205 NA

## 91 NA

## 213 NA

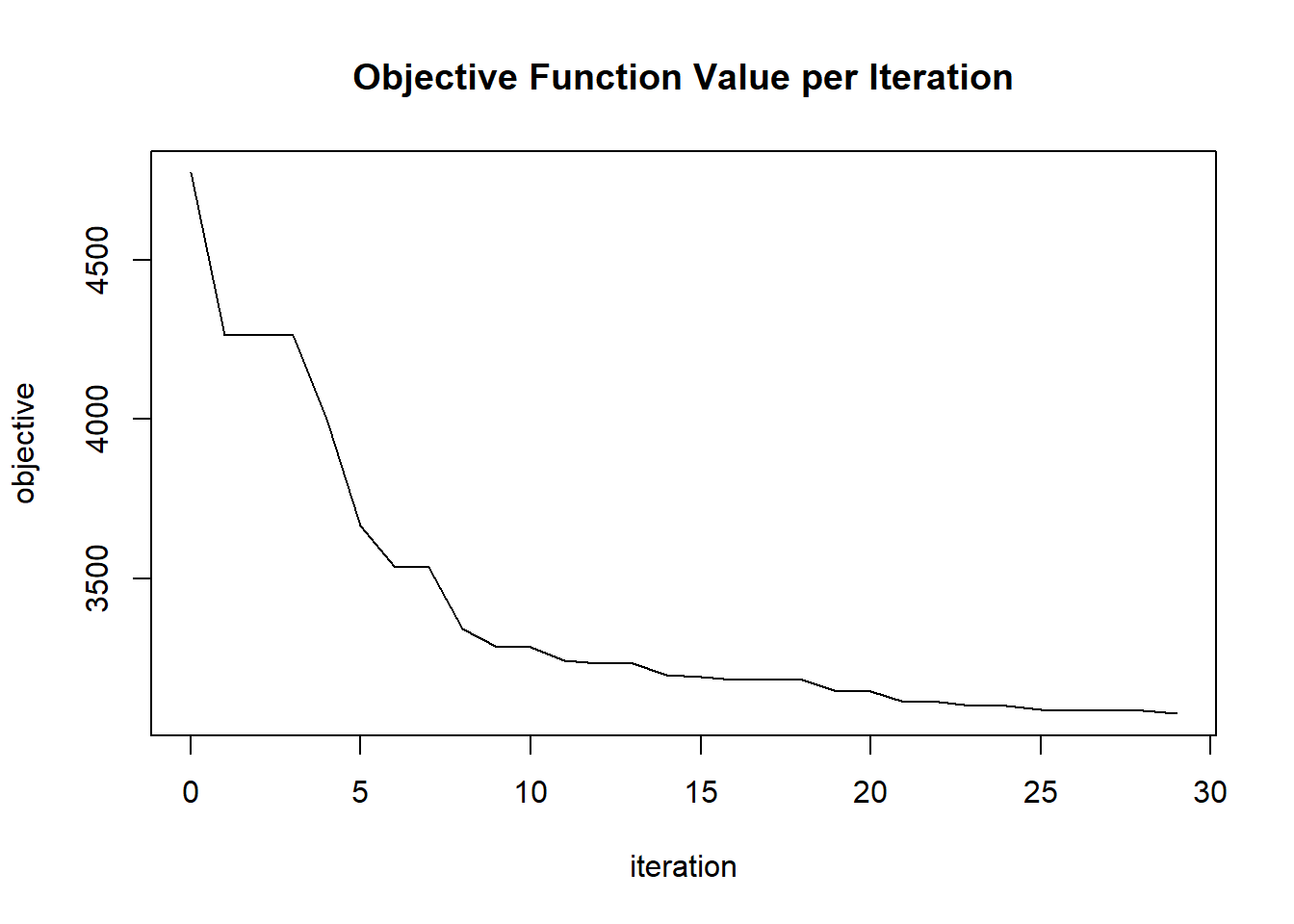

## 3 NA3.4.8 GLRM grid search

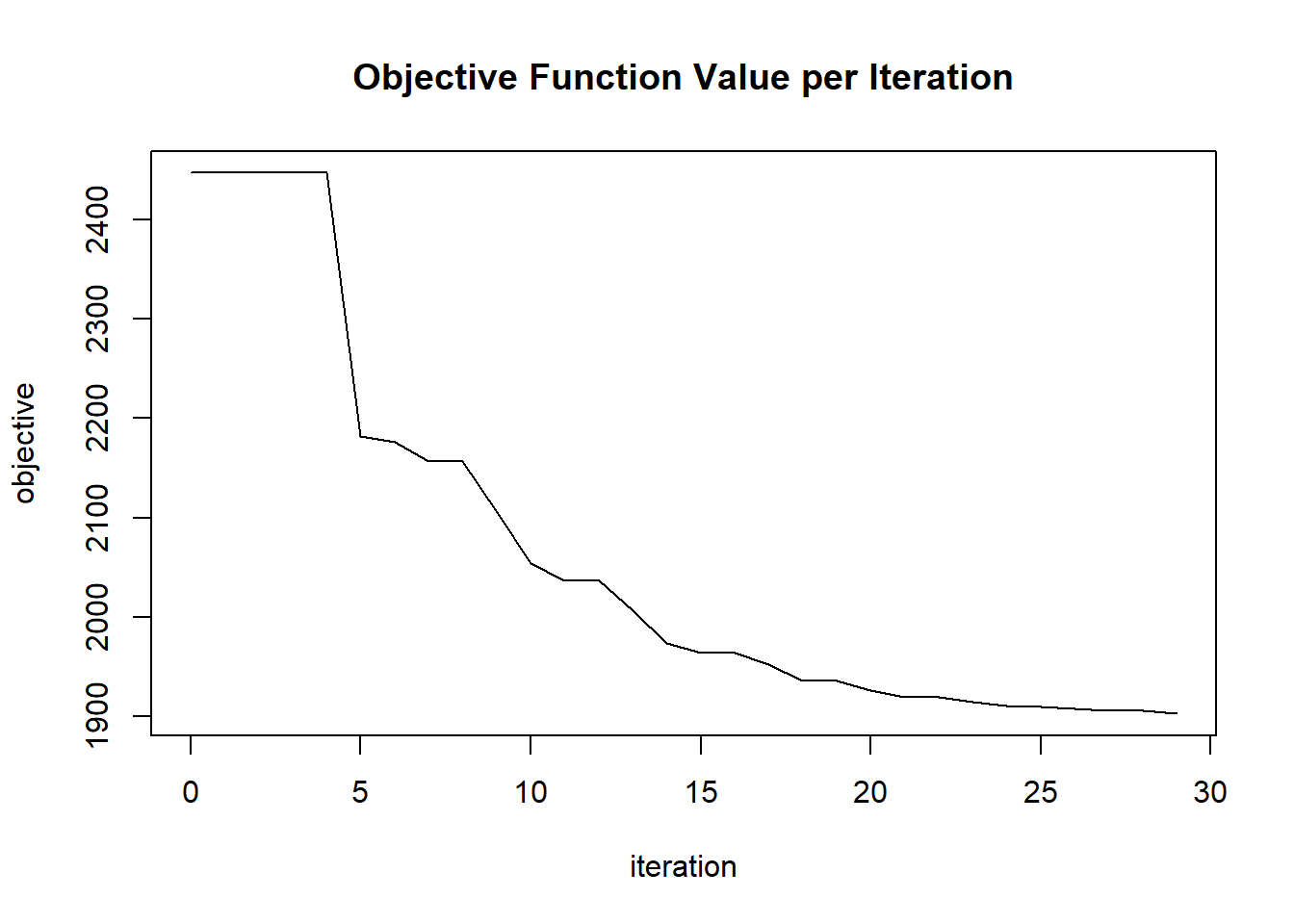

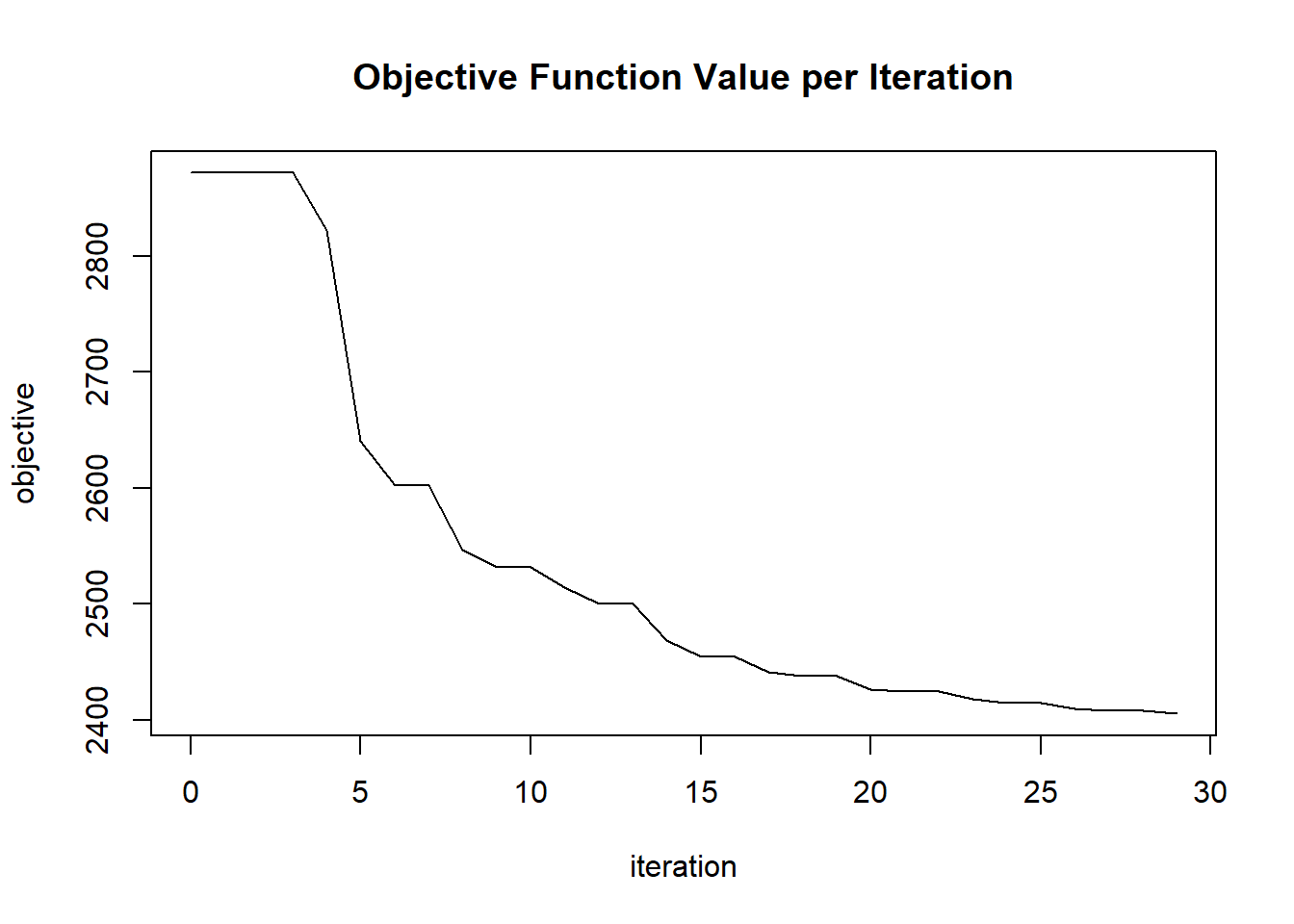

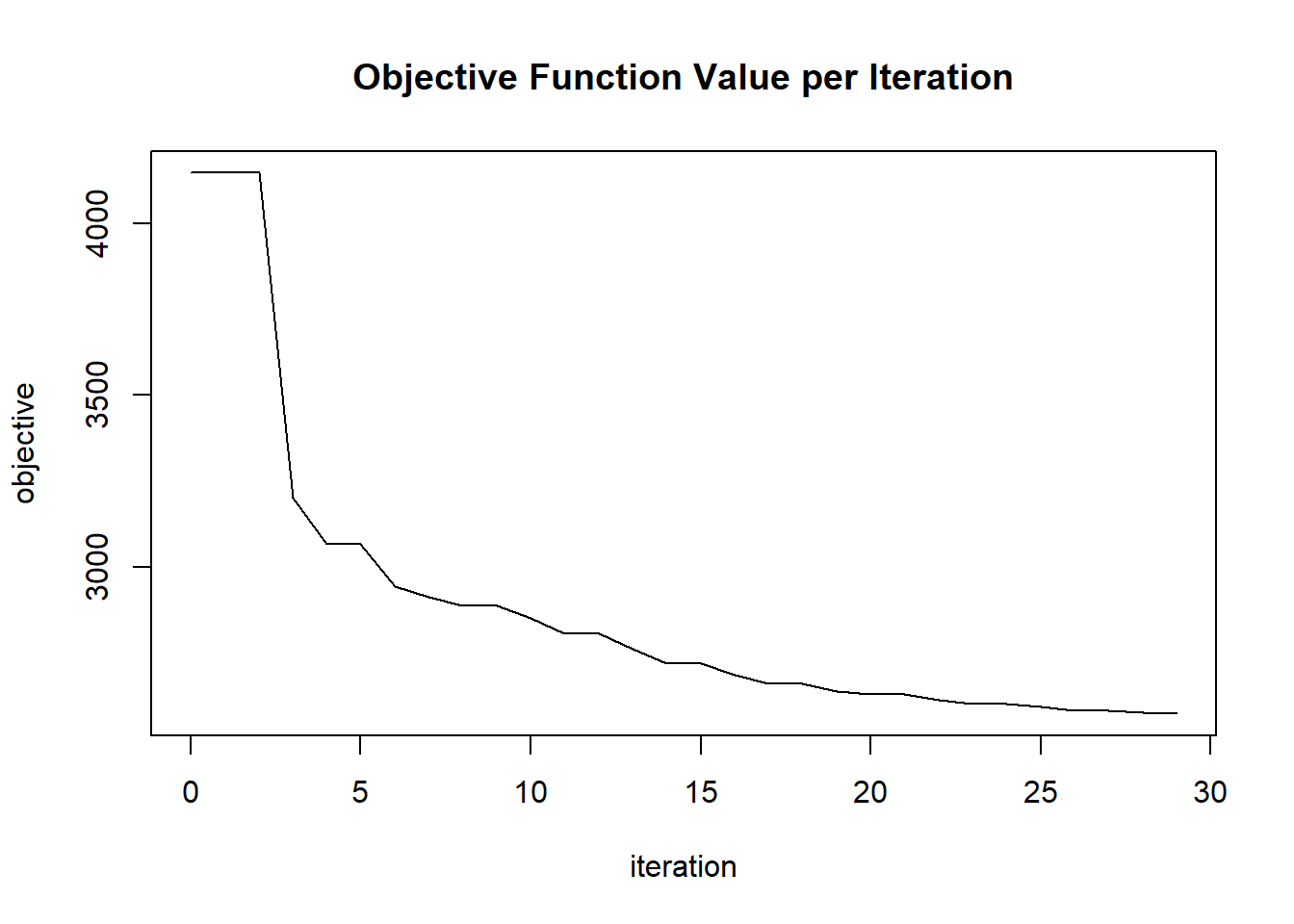

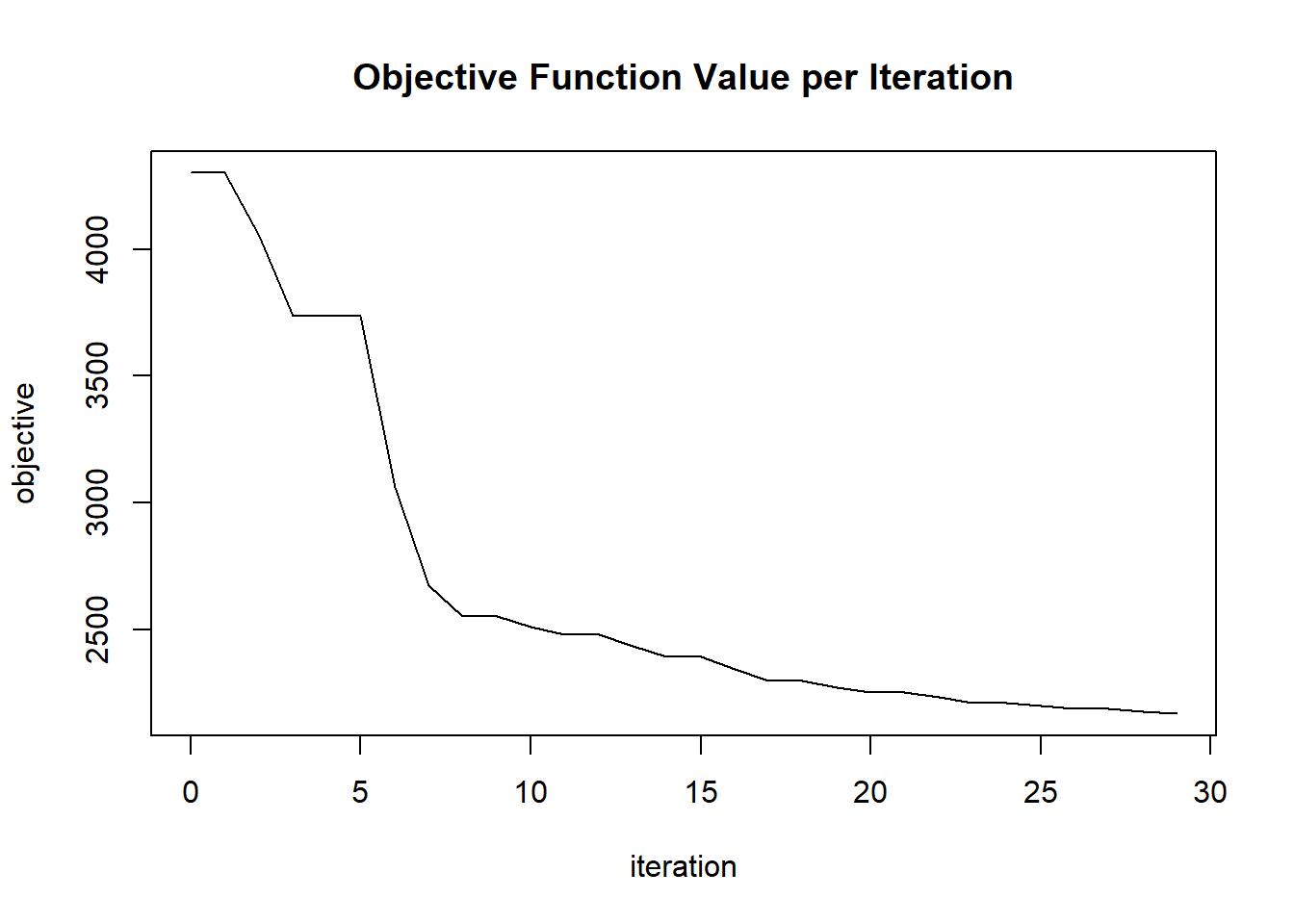

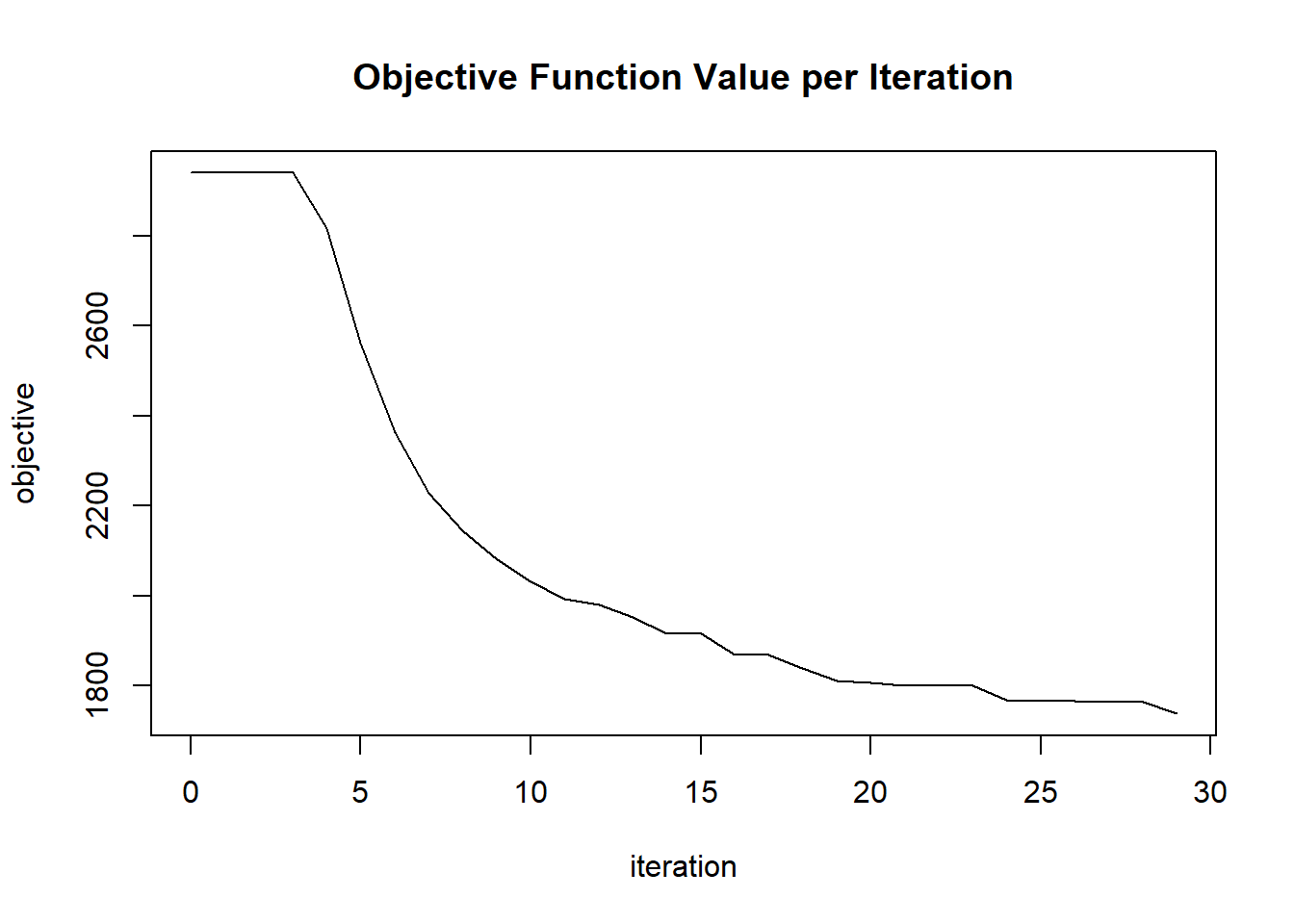

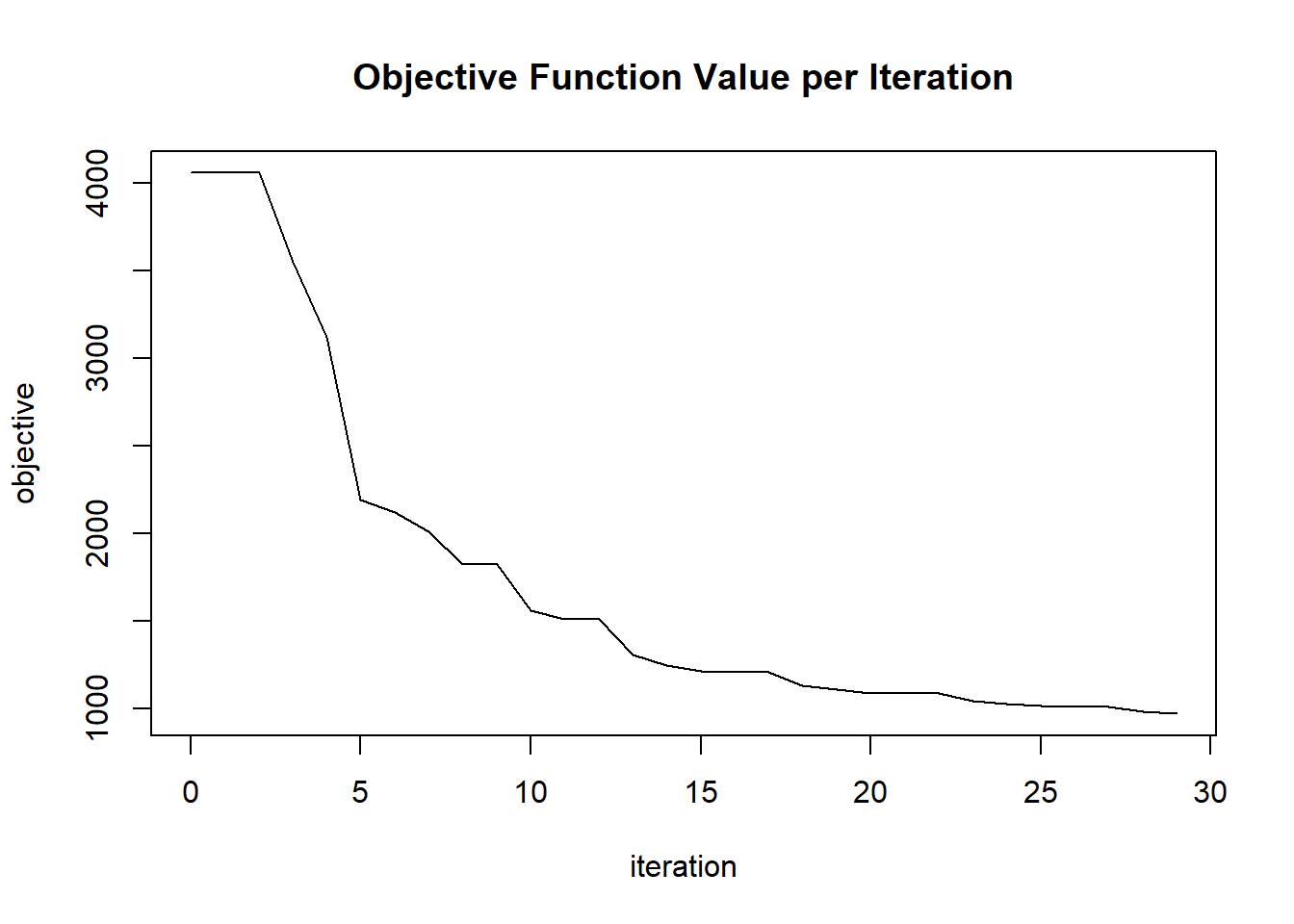

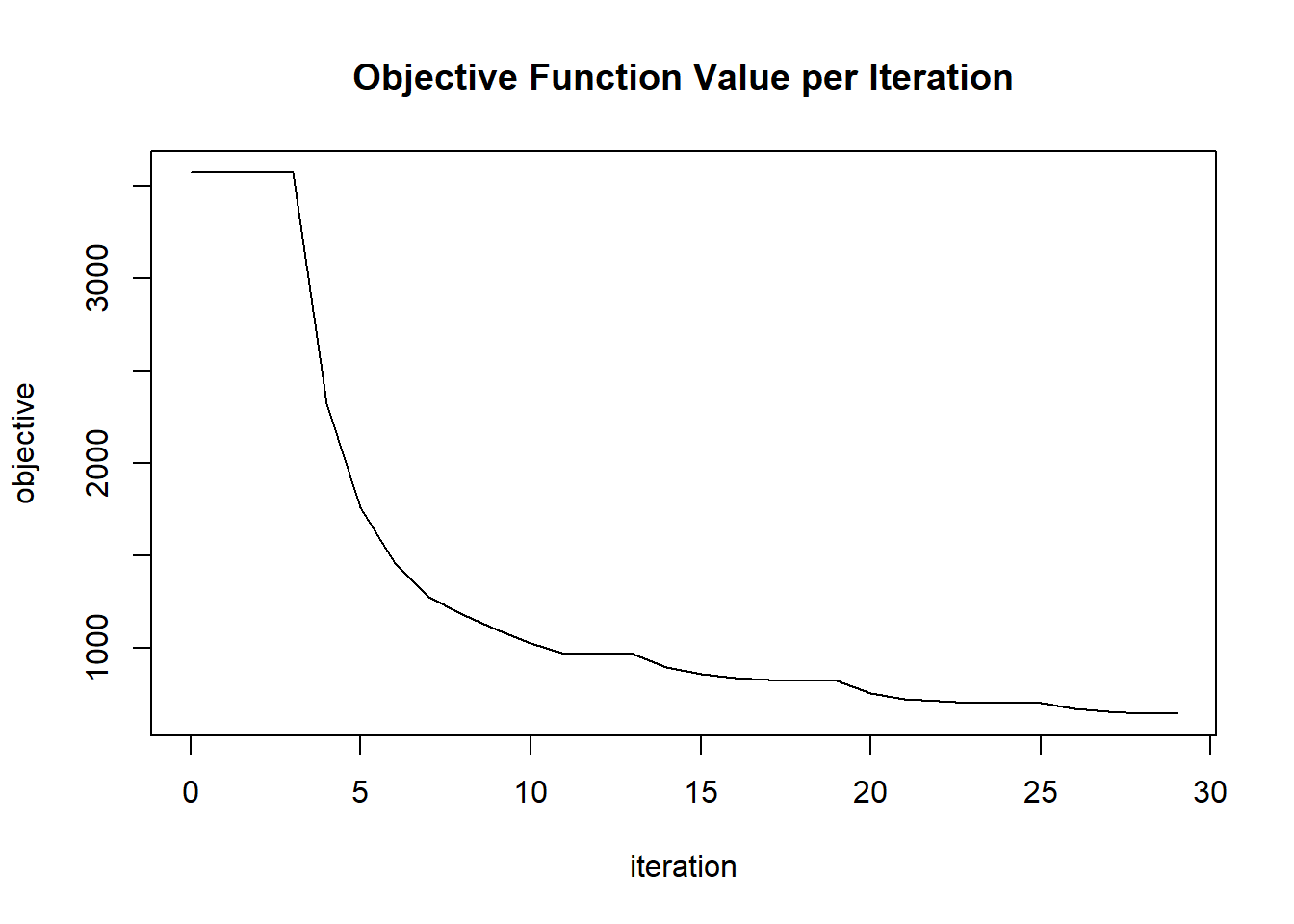

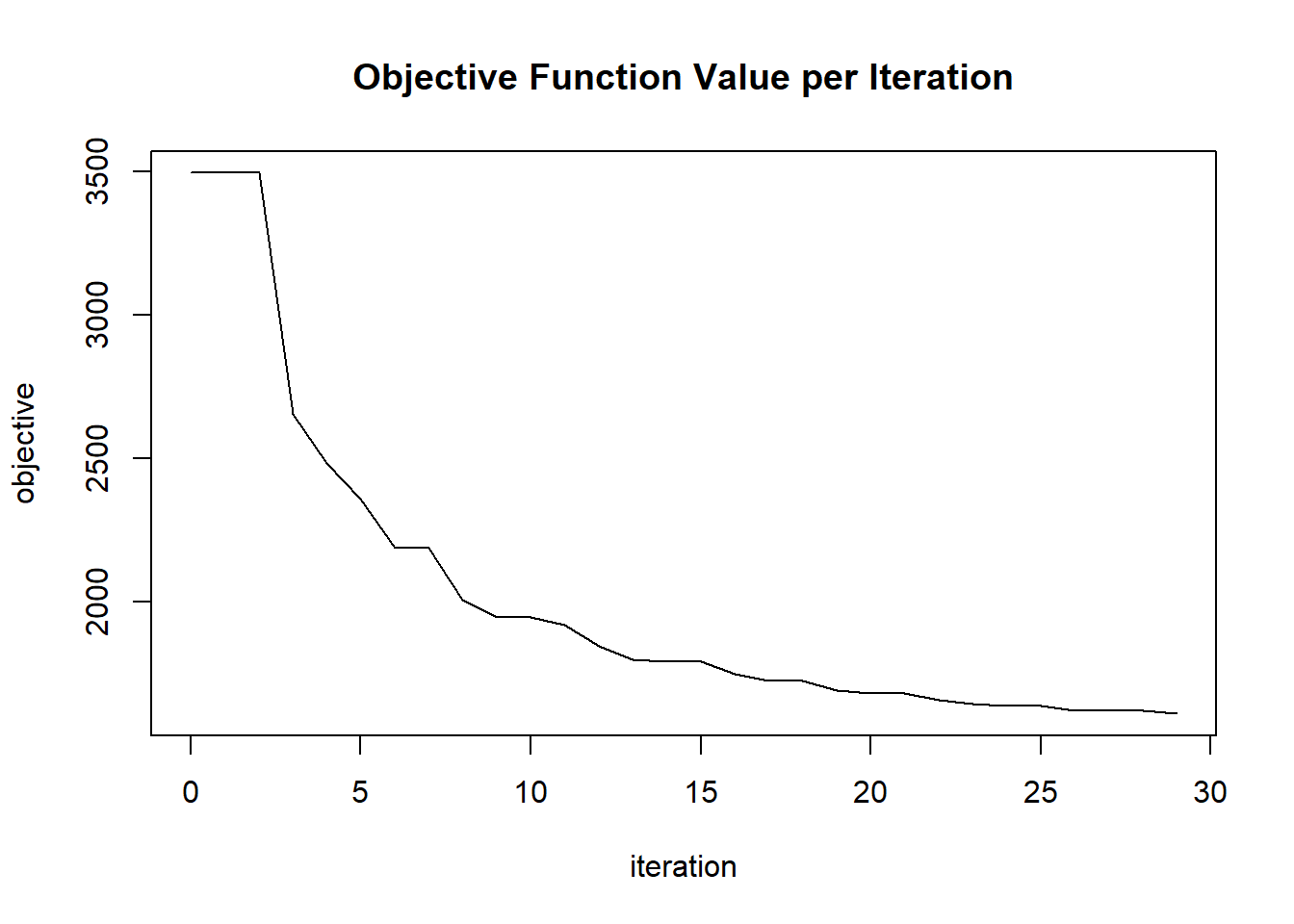

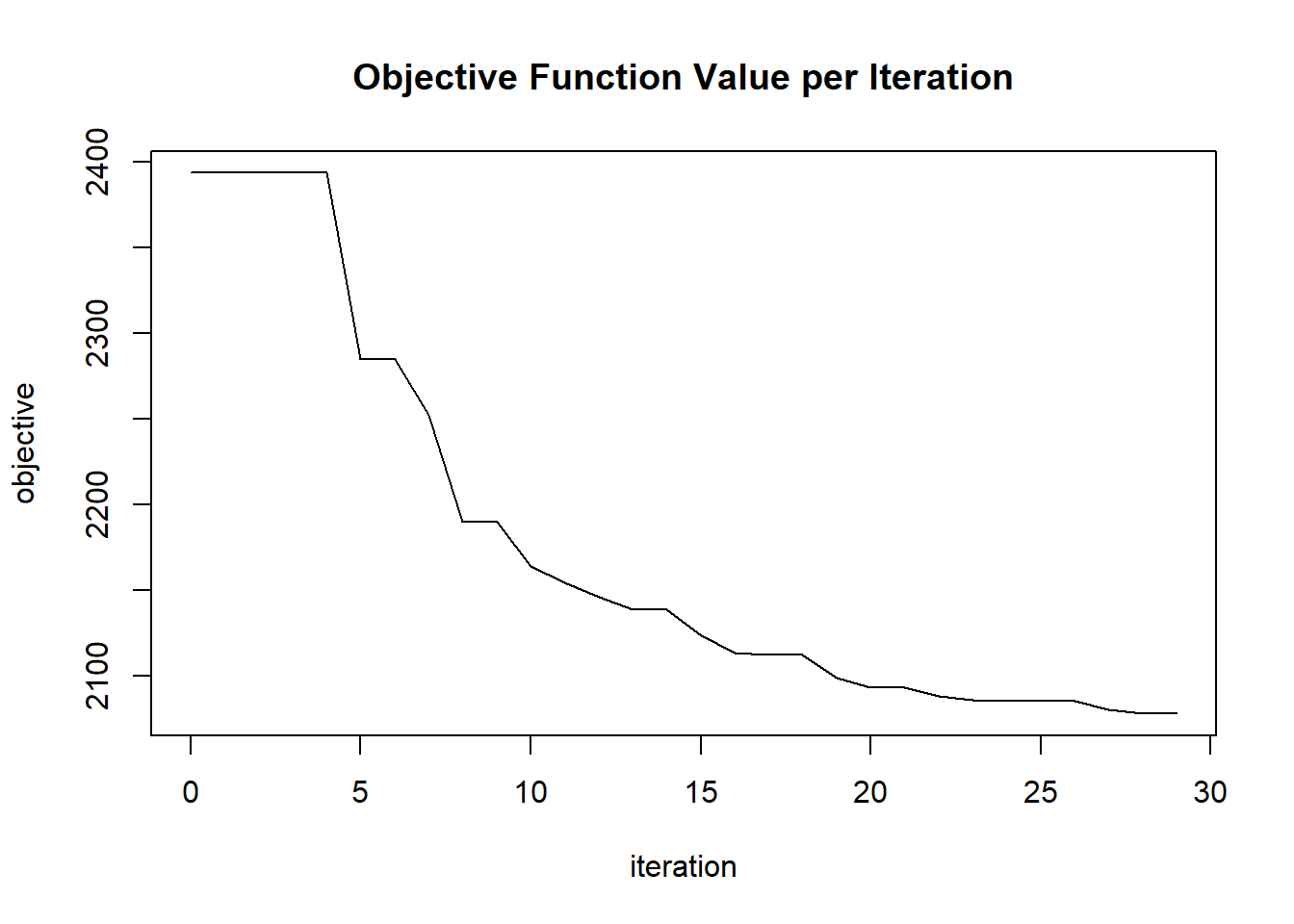

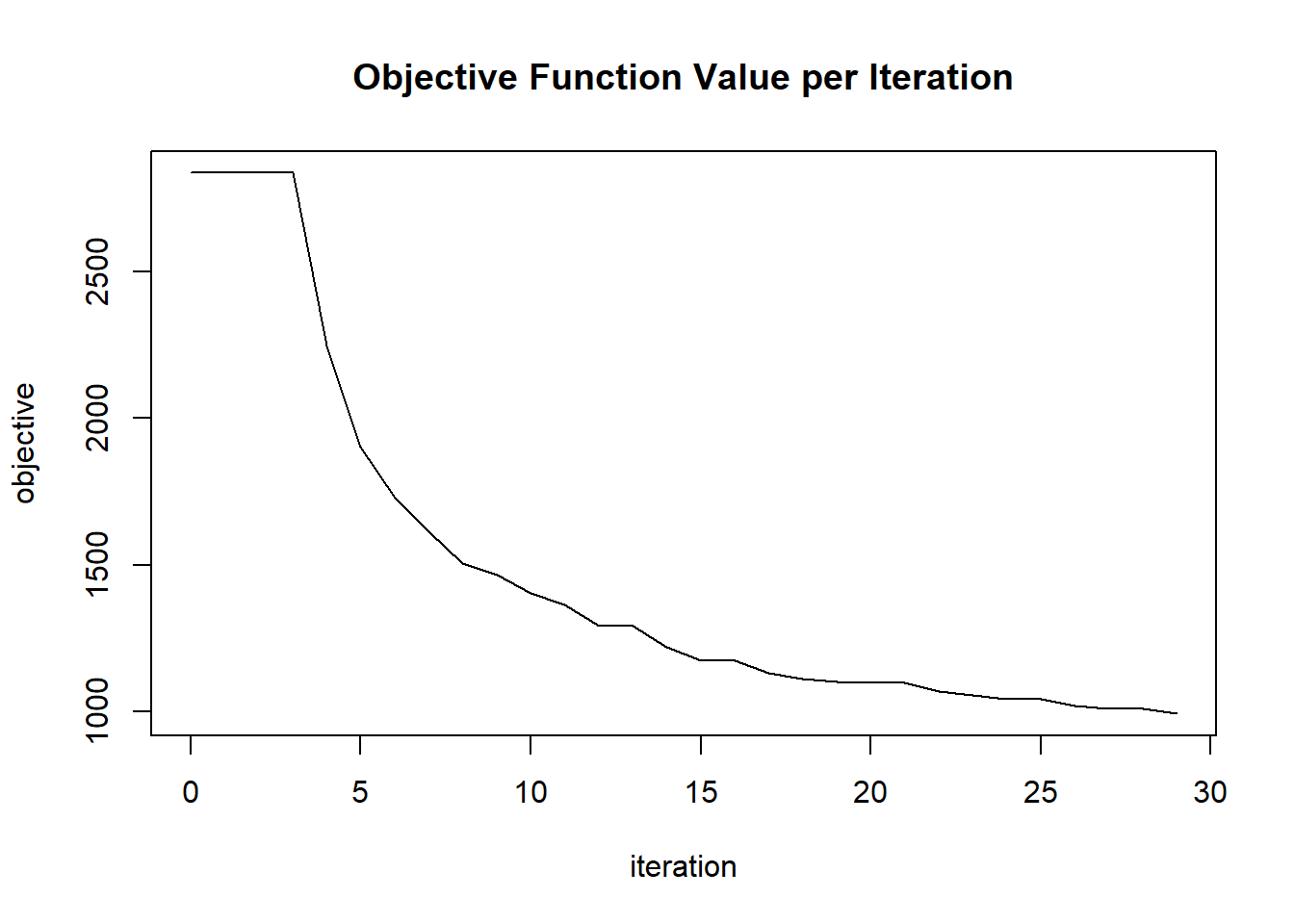

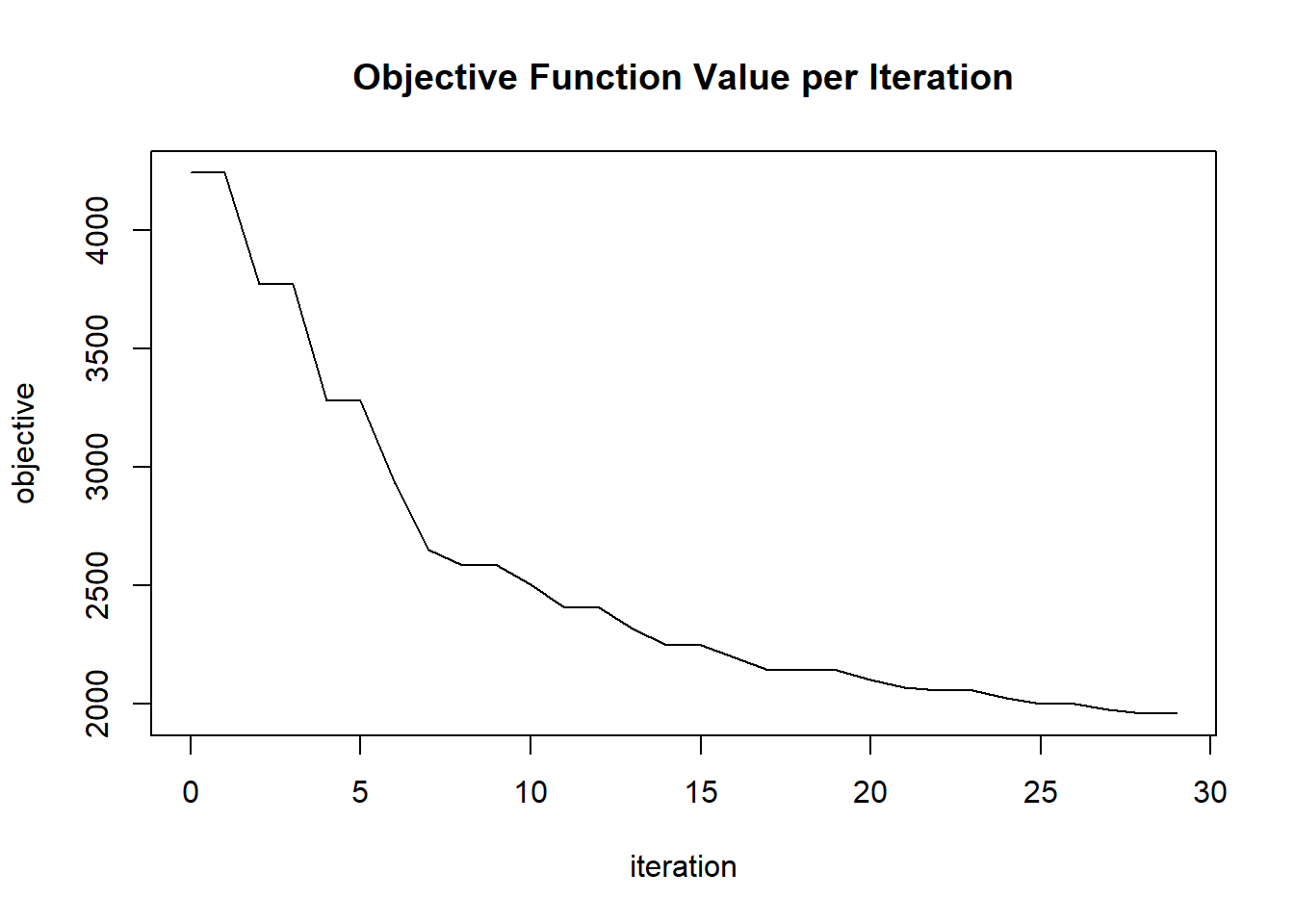

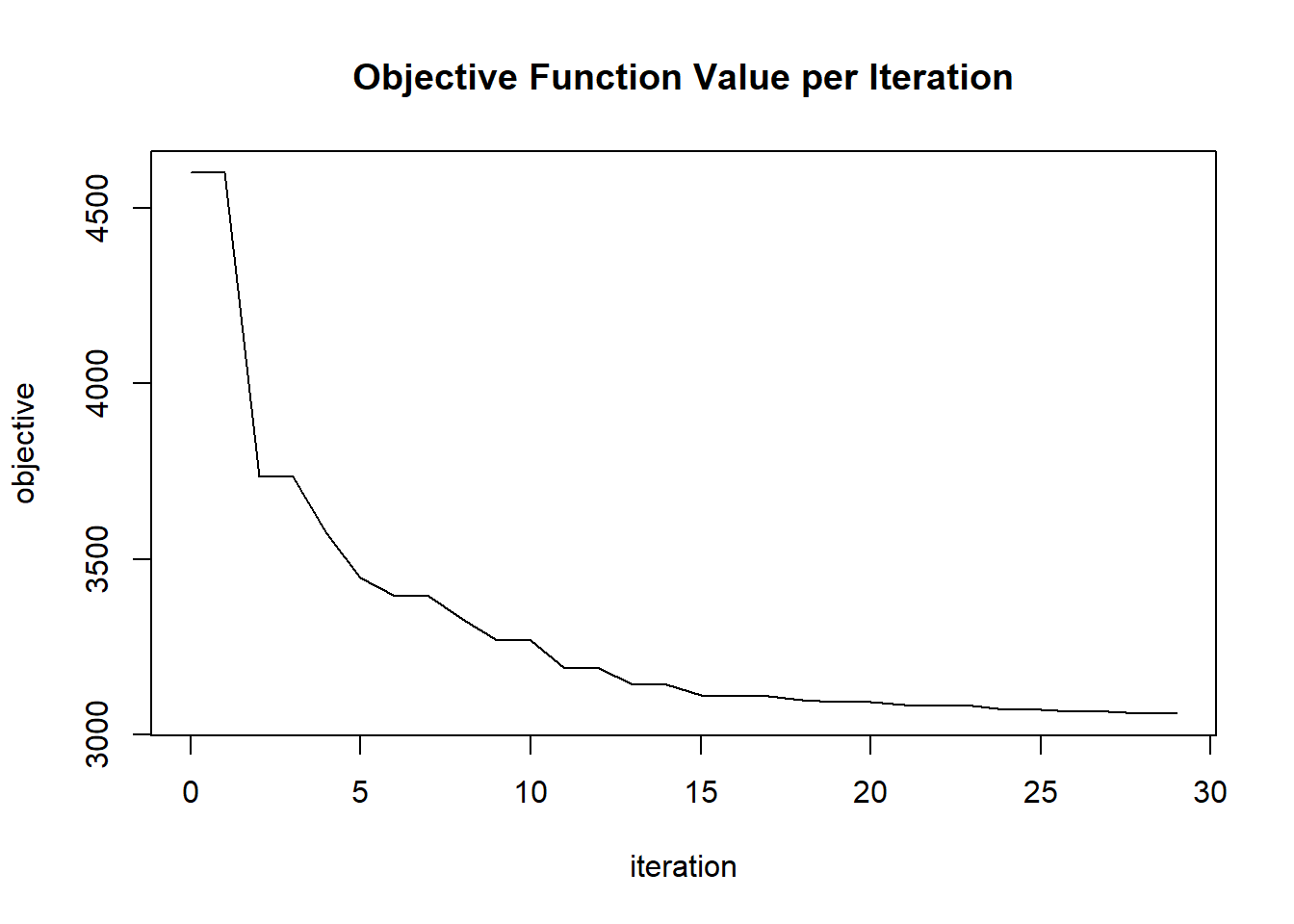

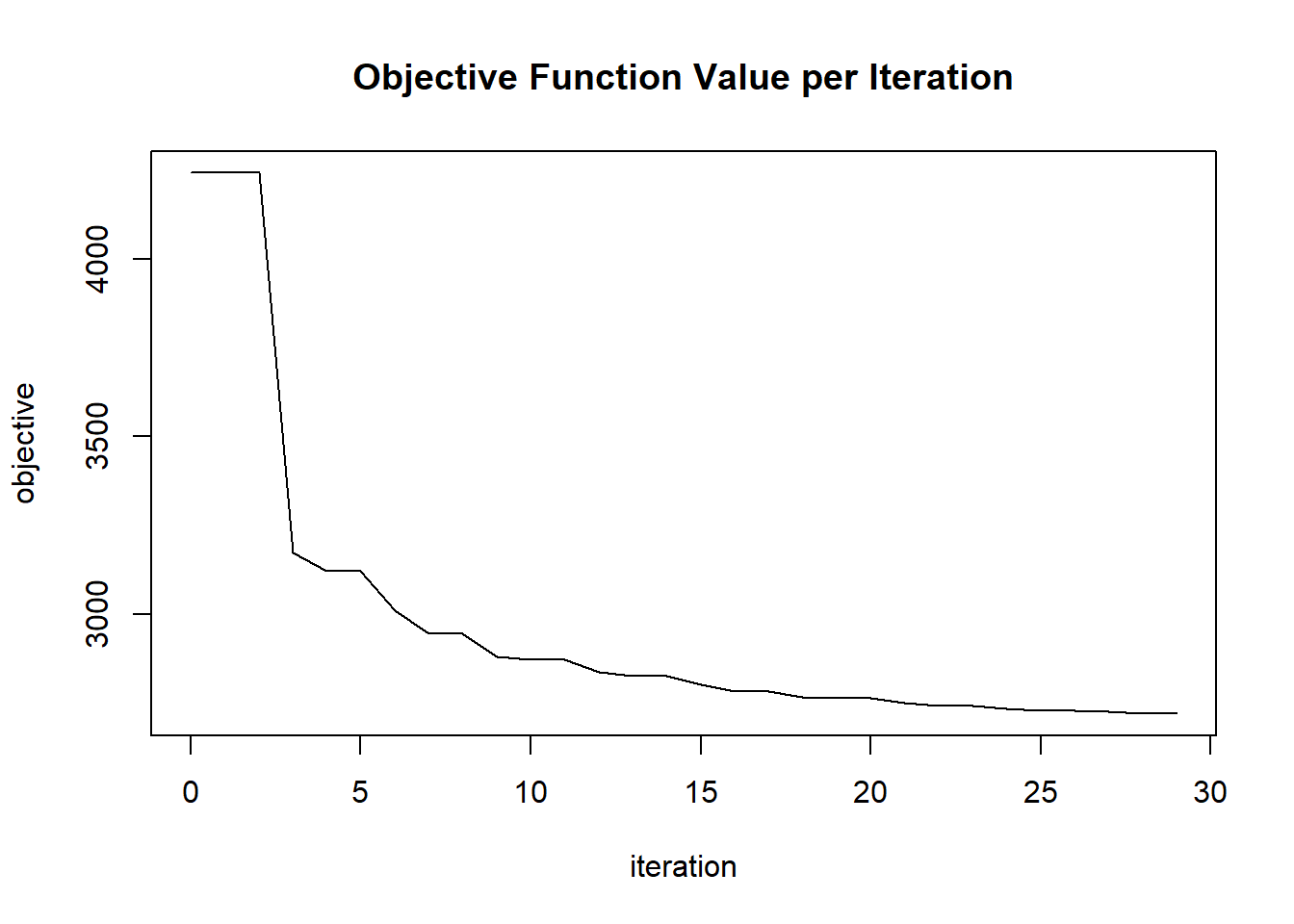

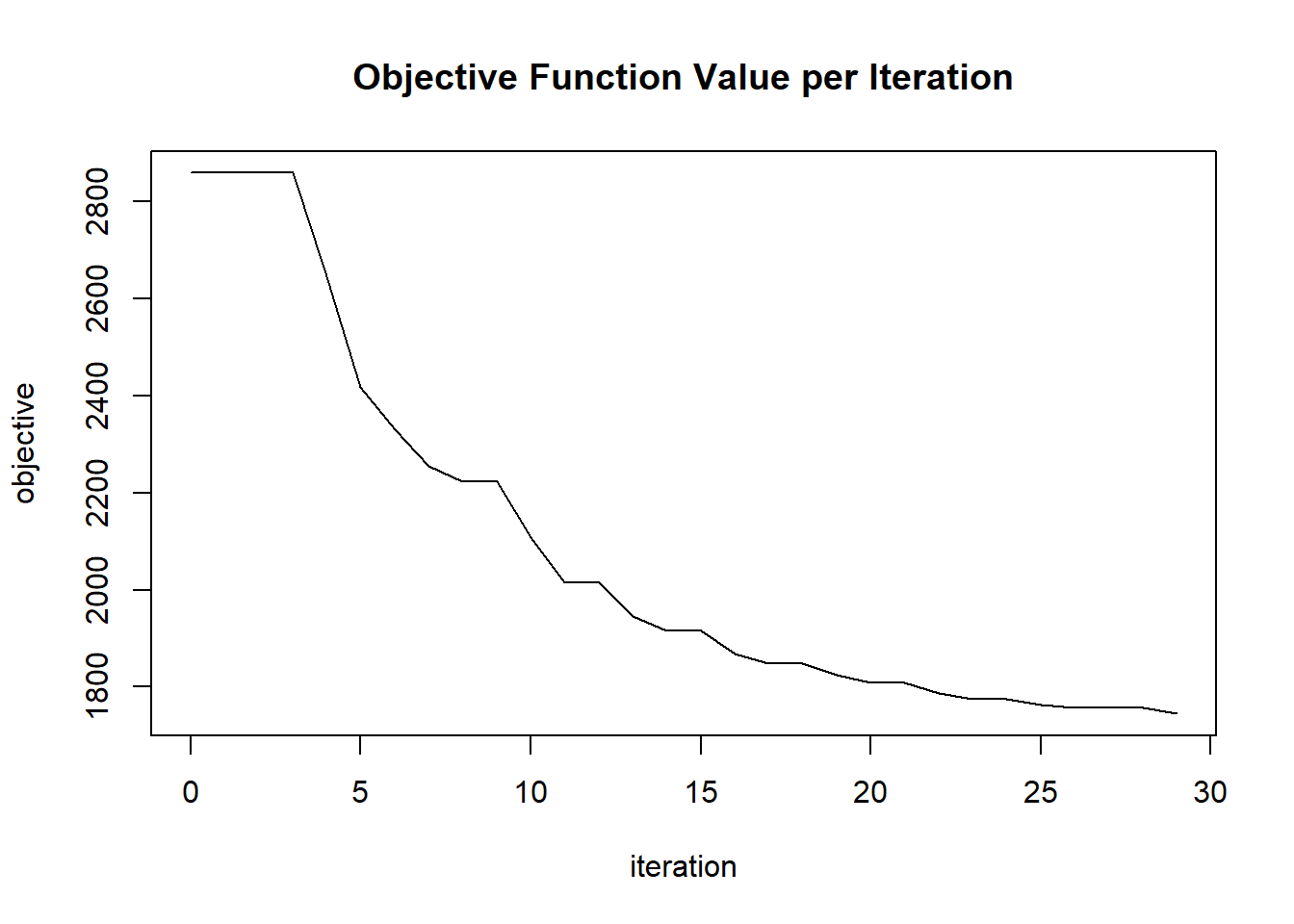

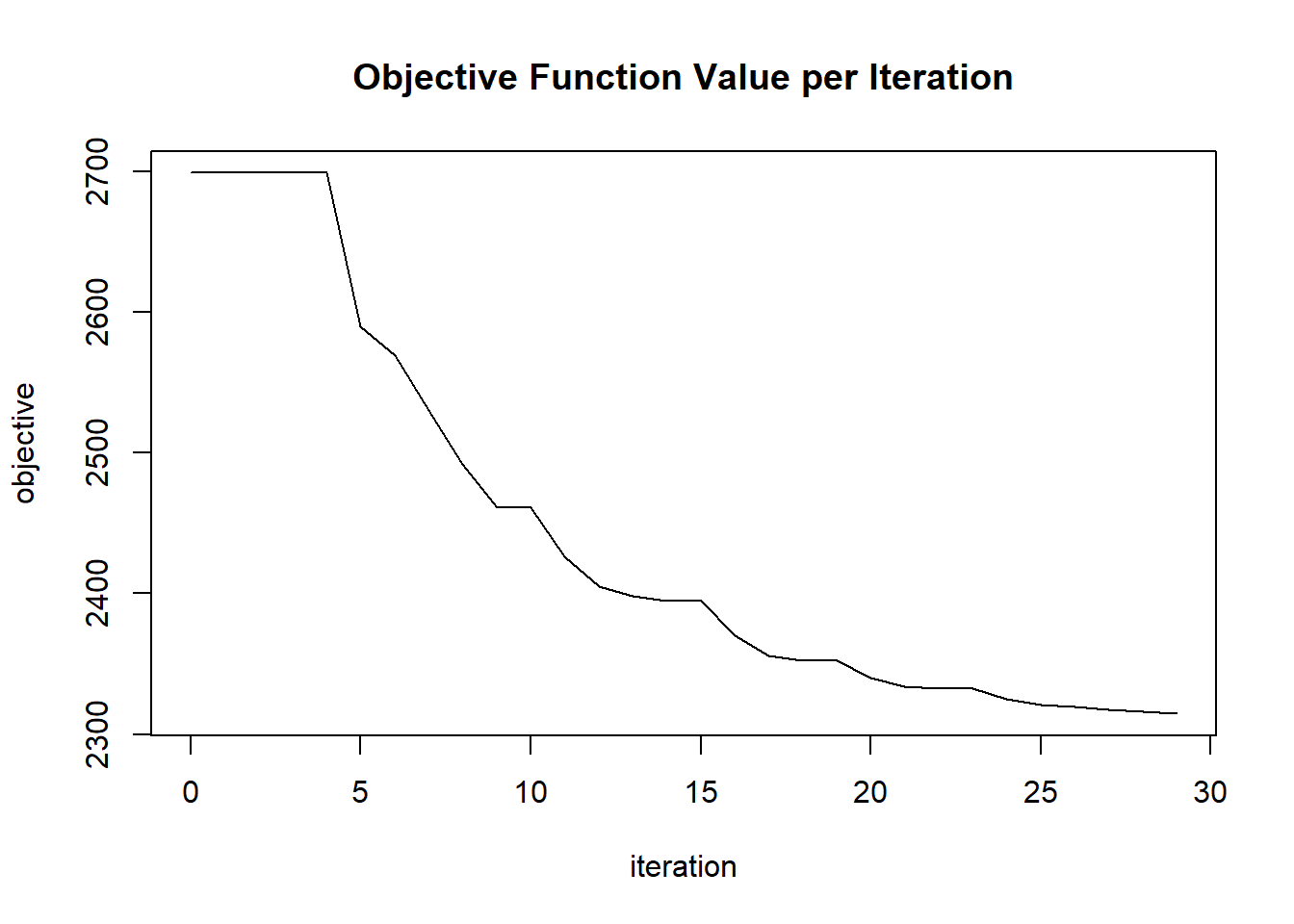

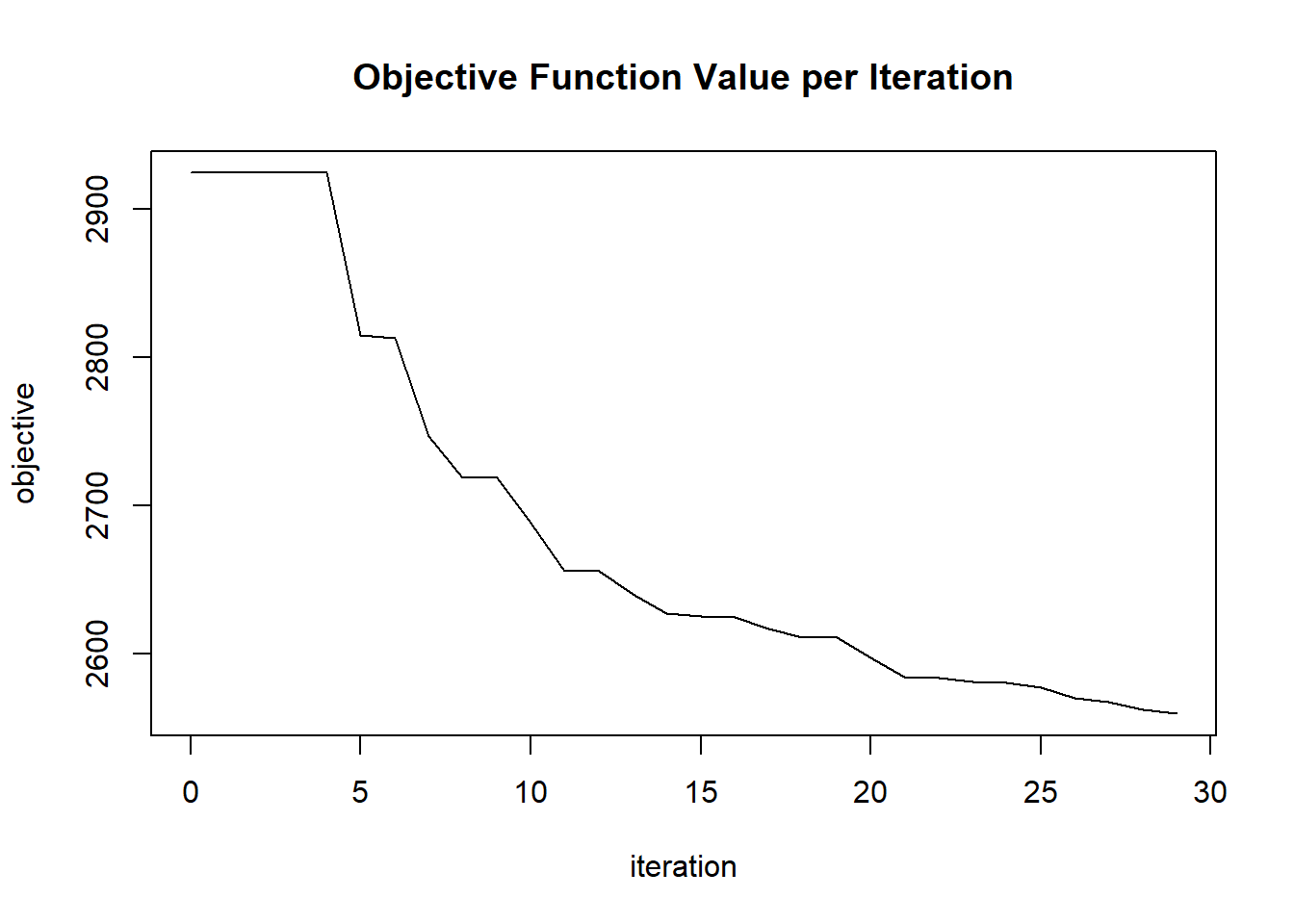

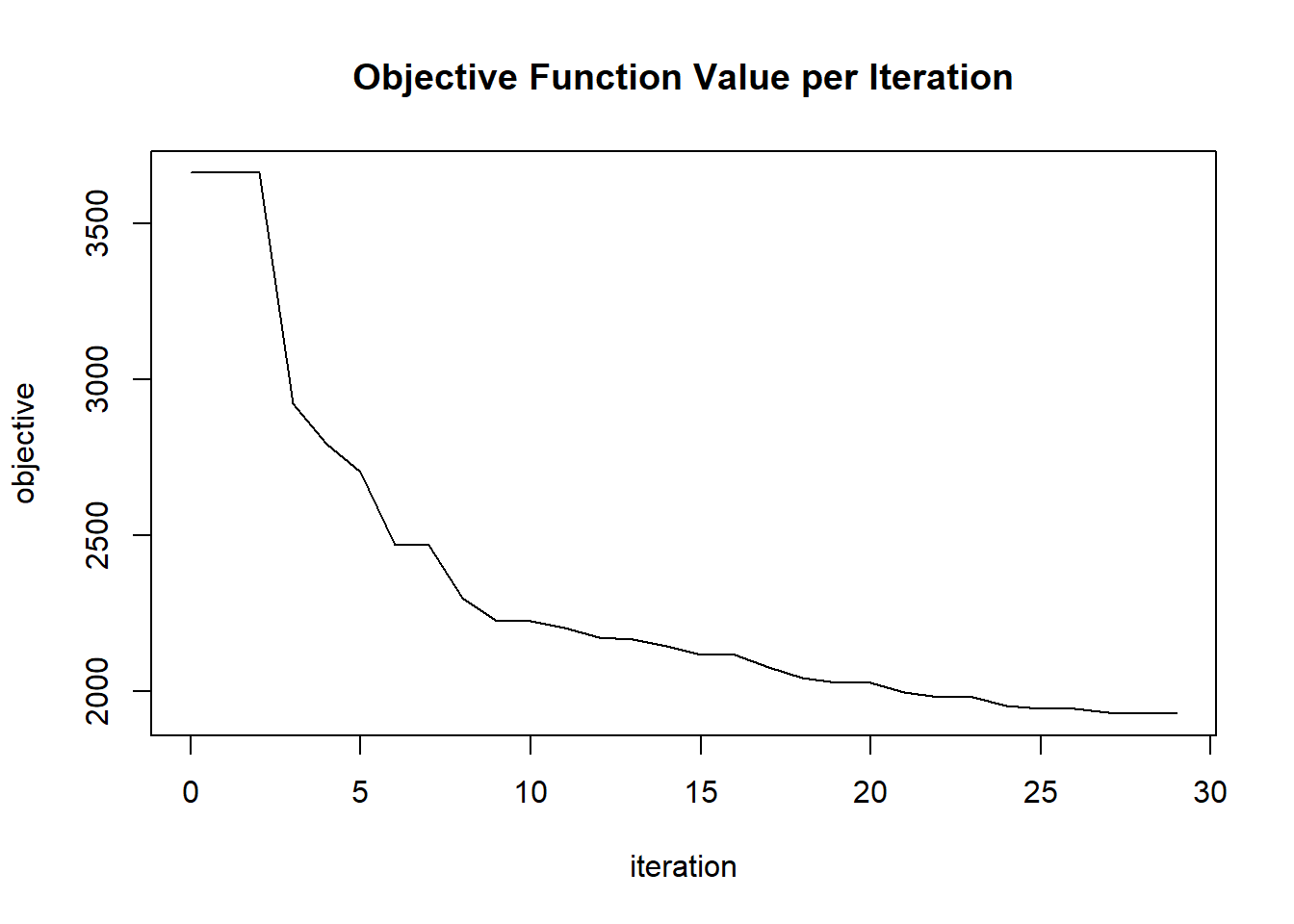

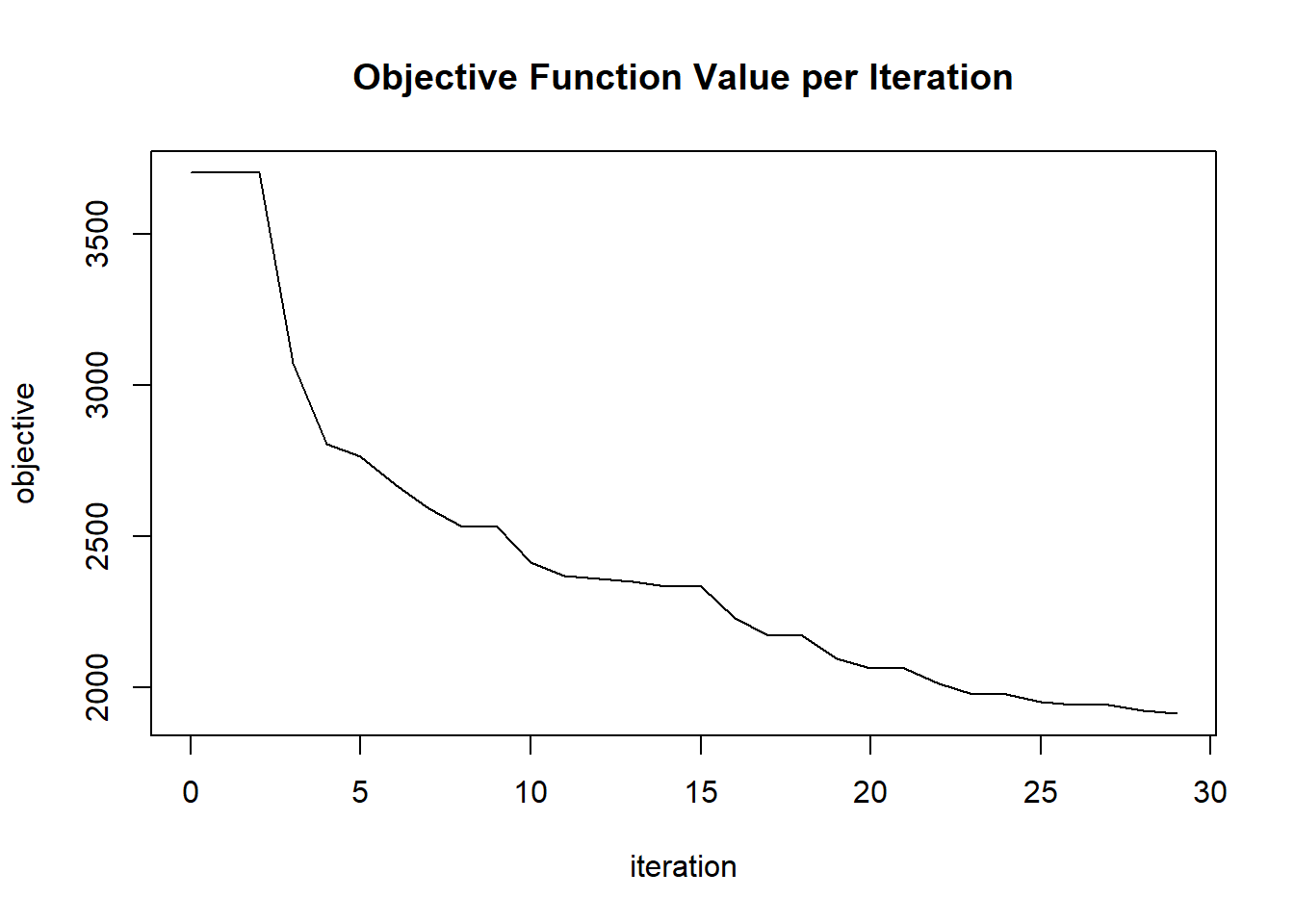

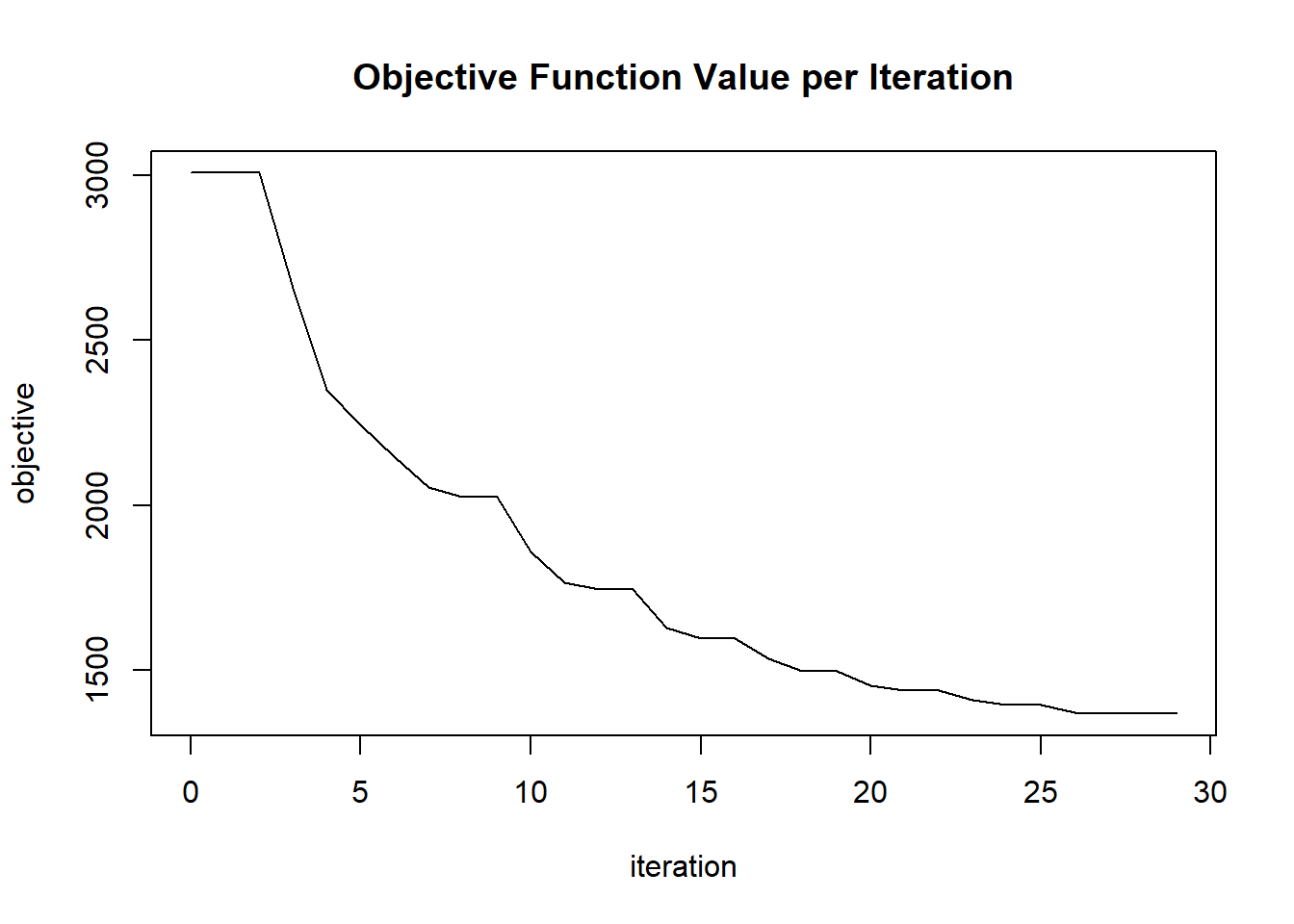

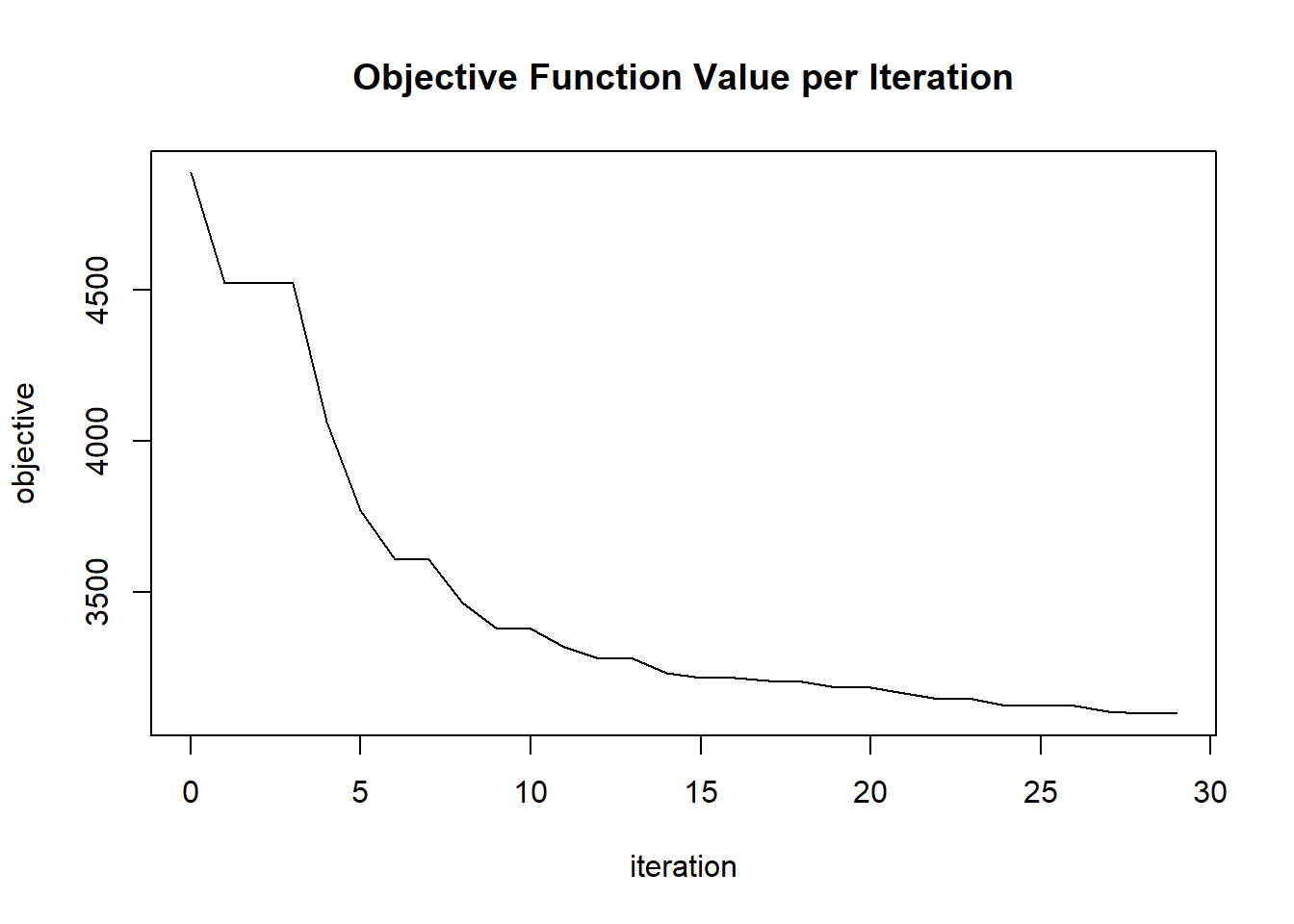

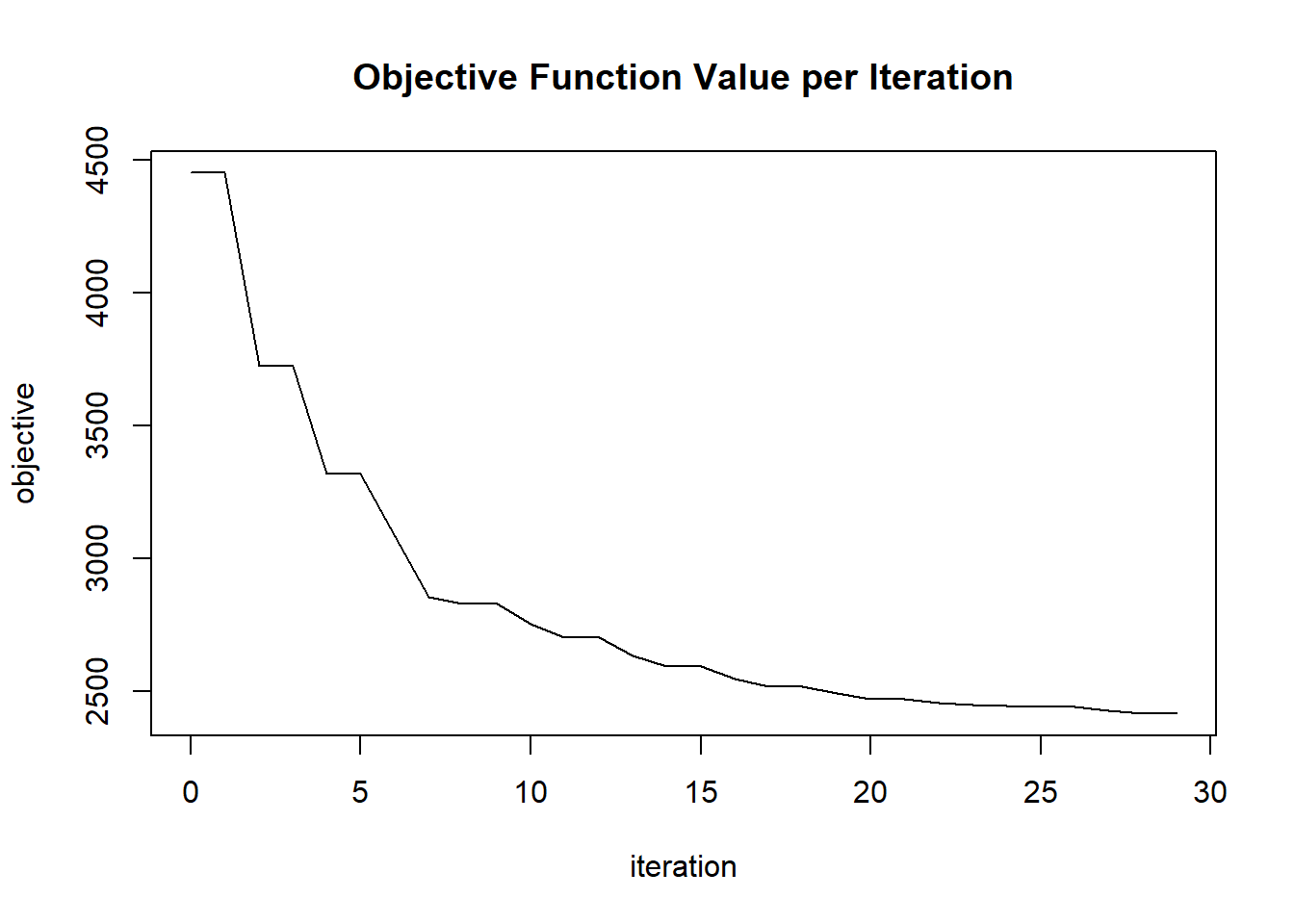

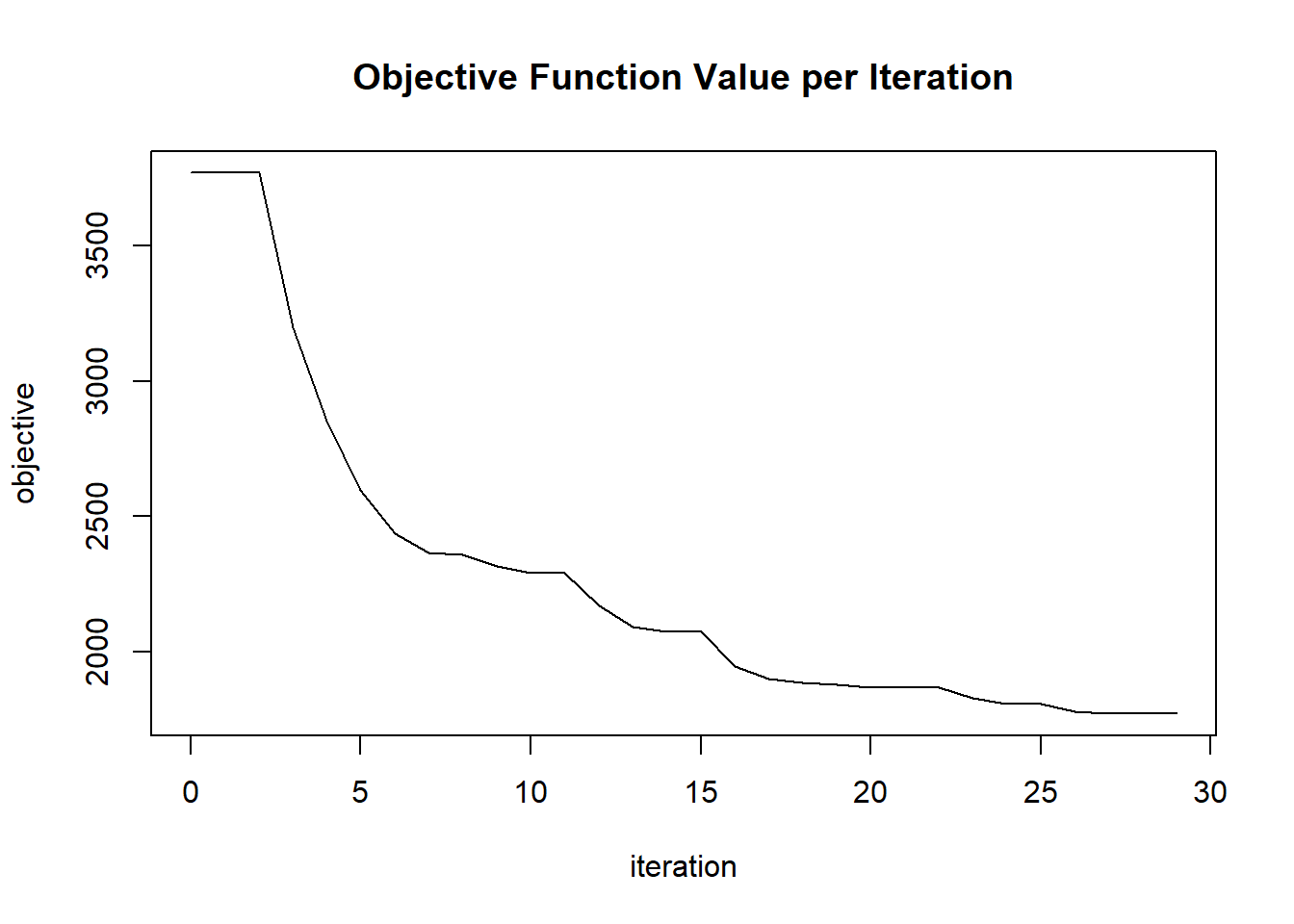

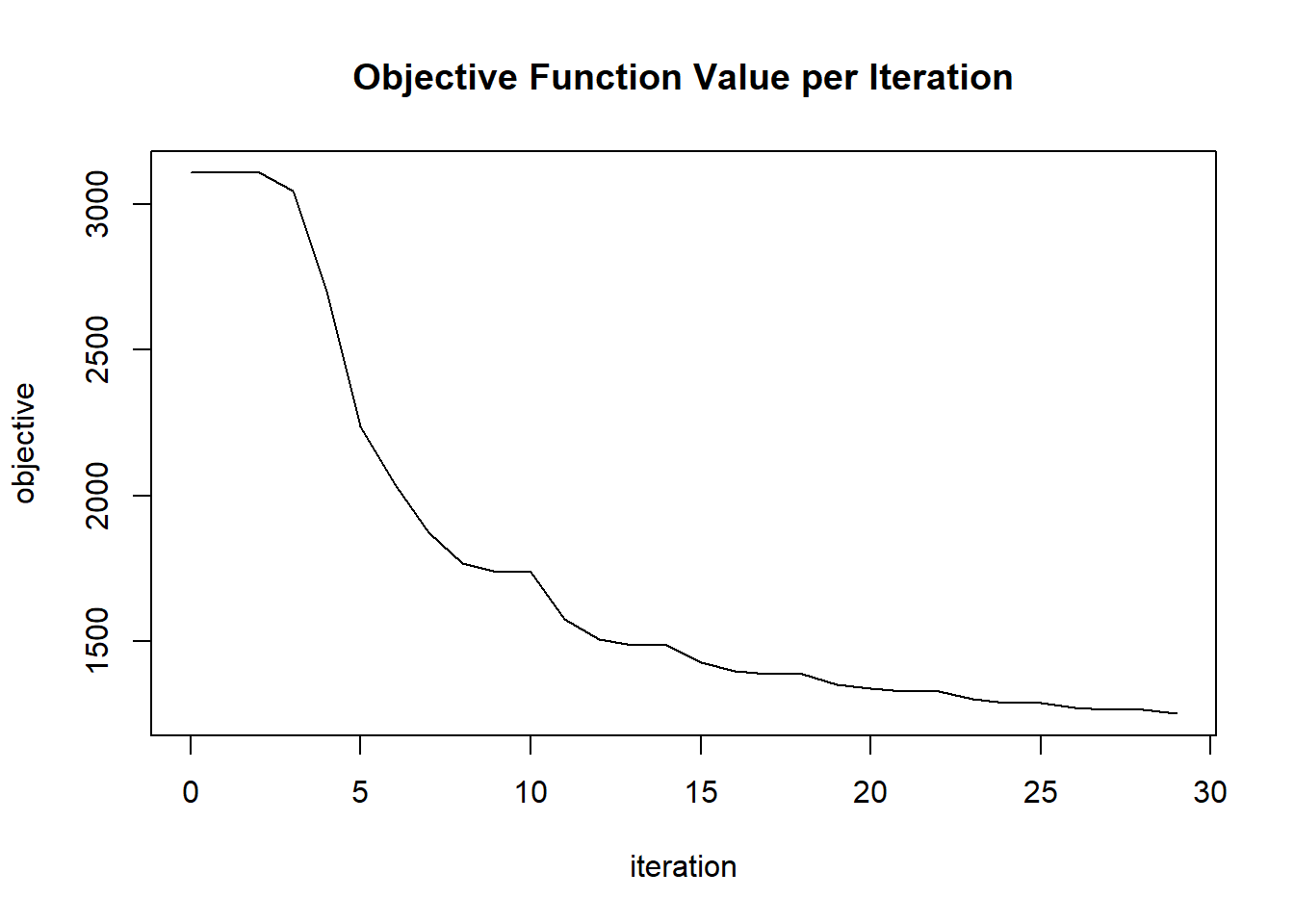

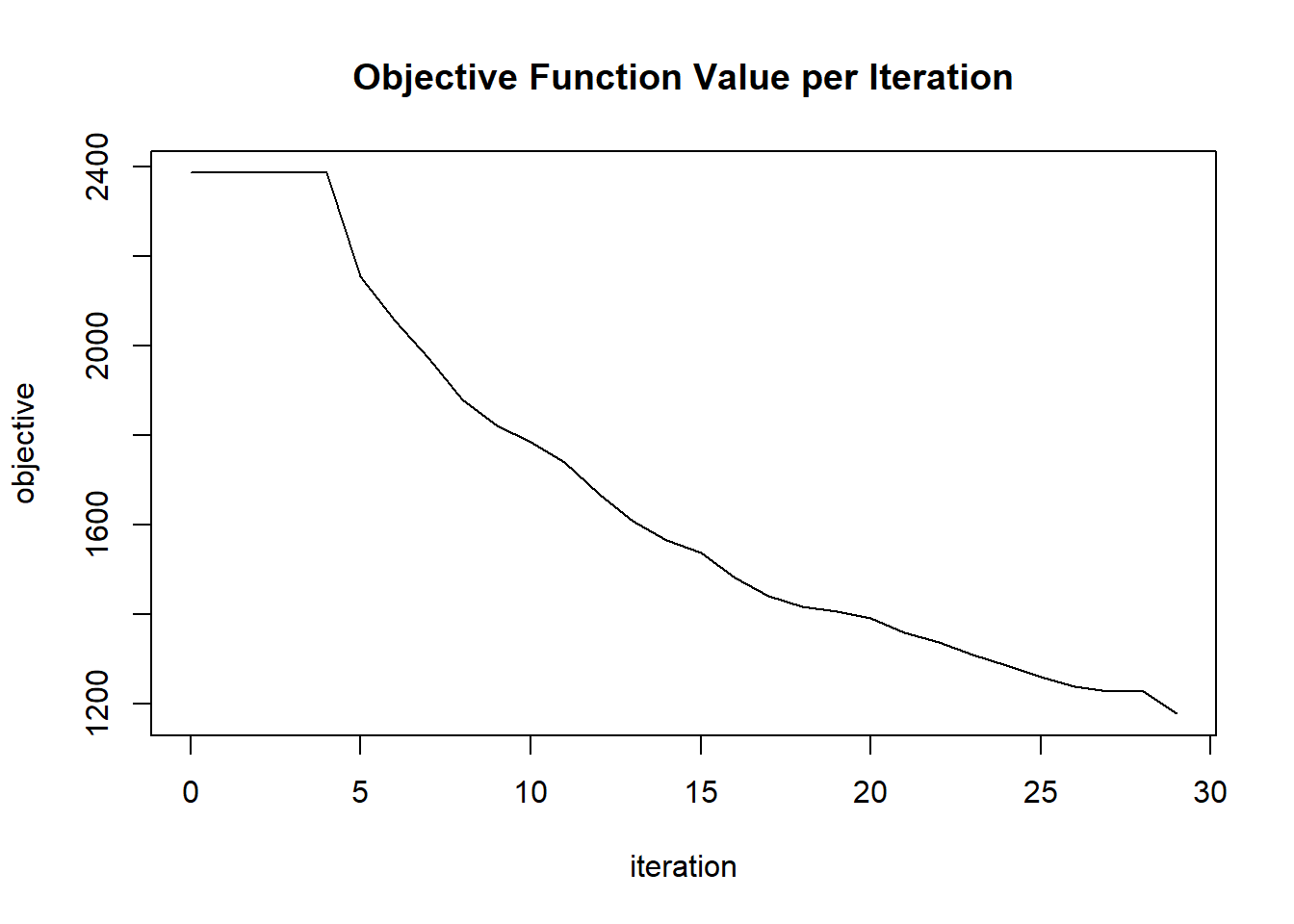

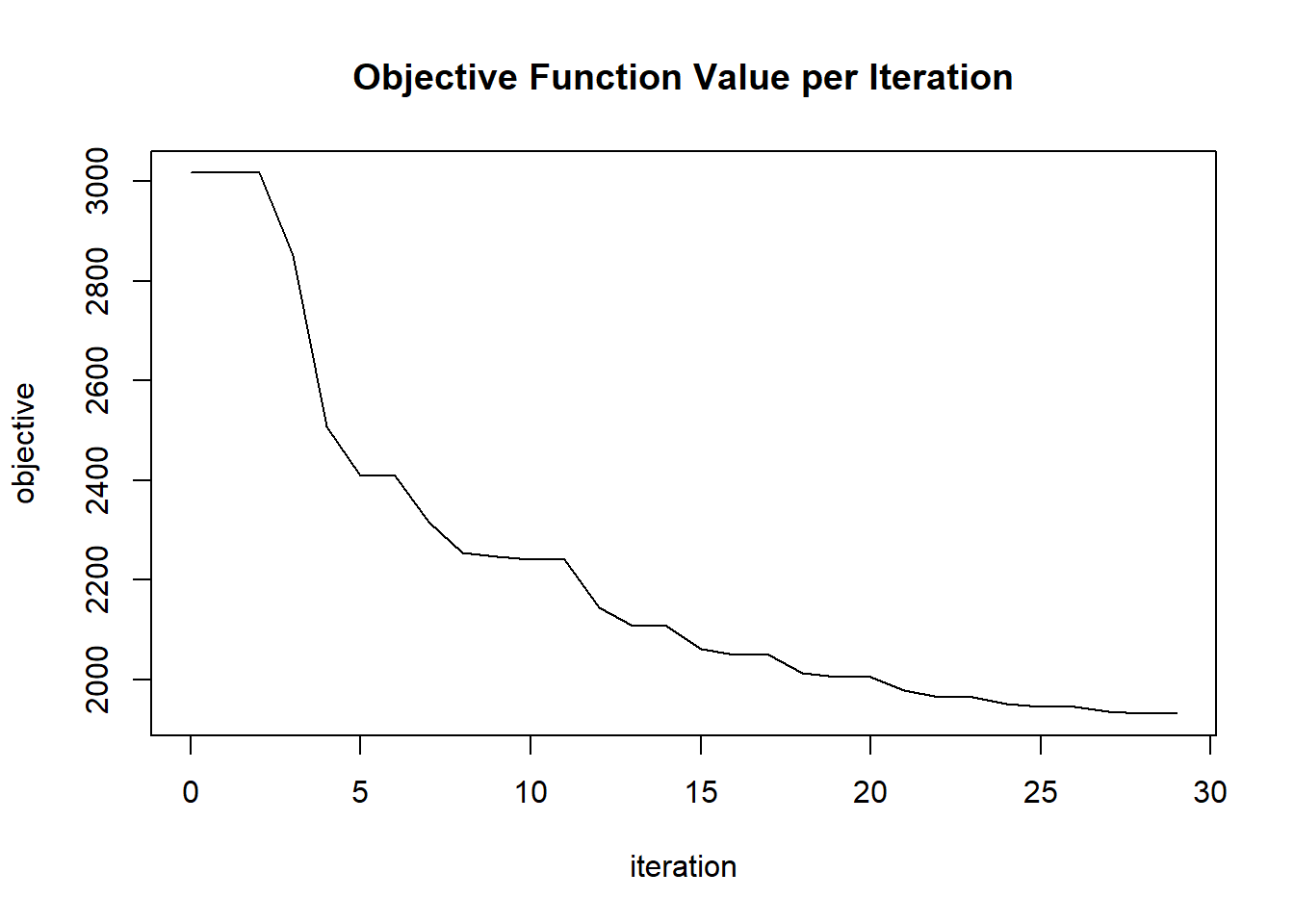

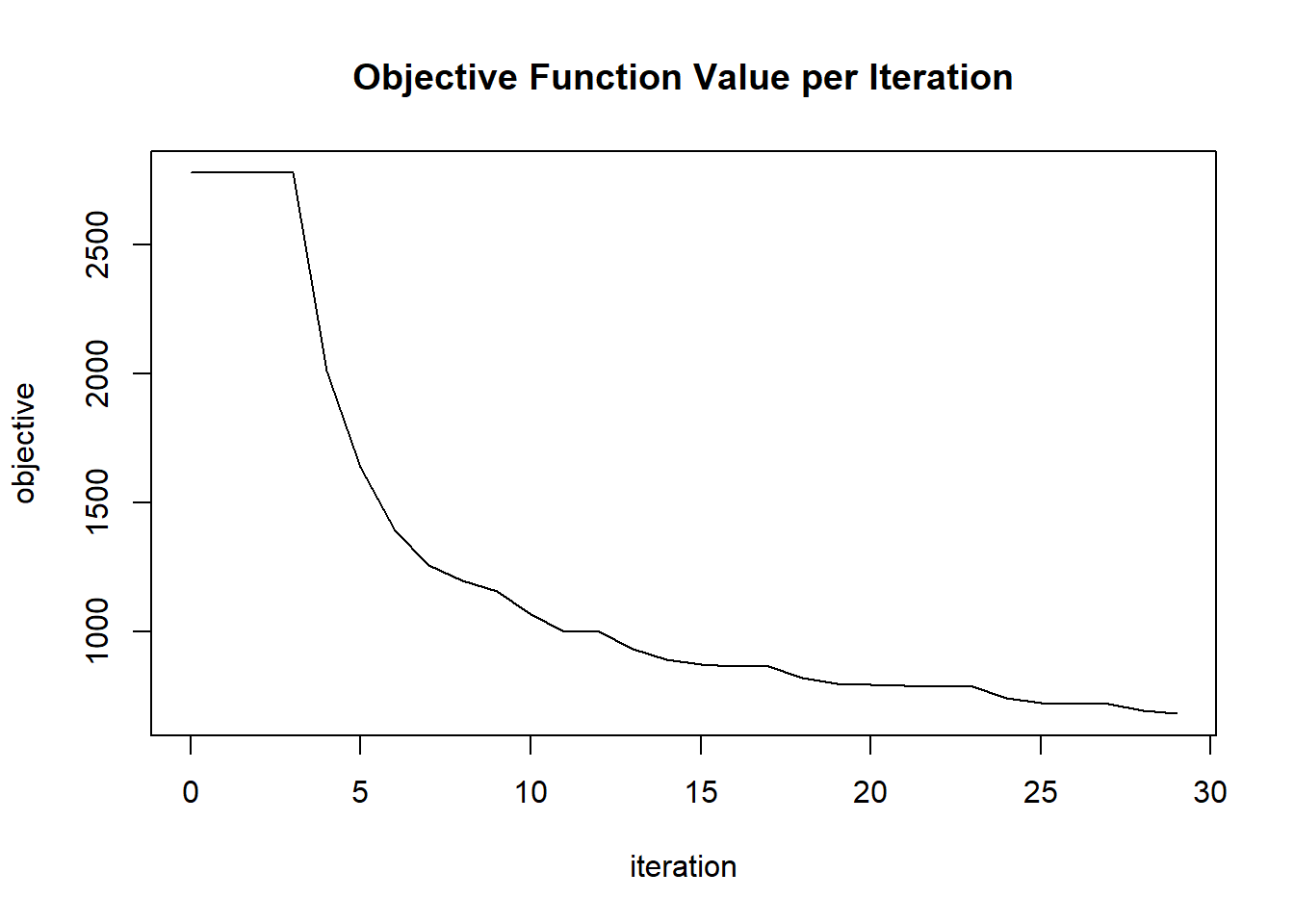

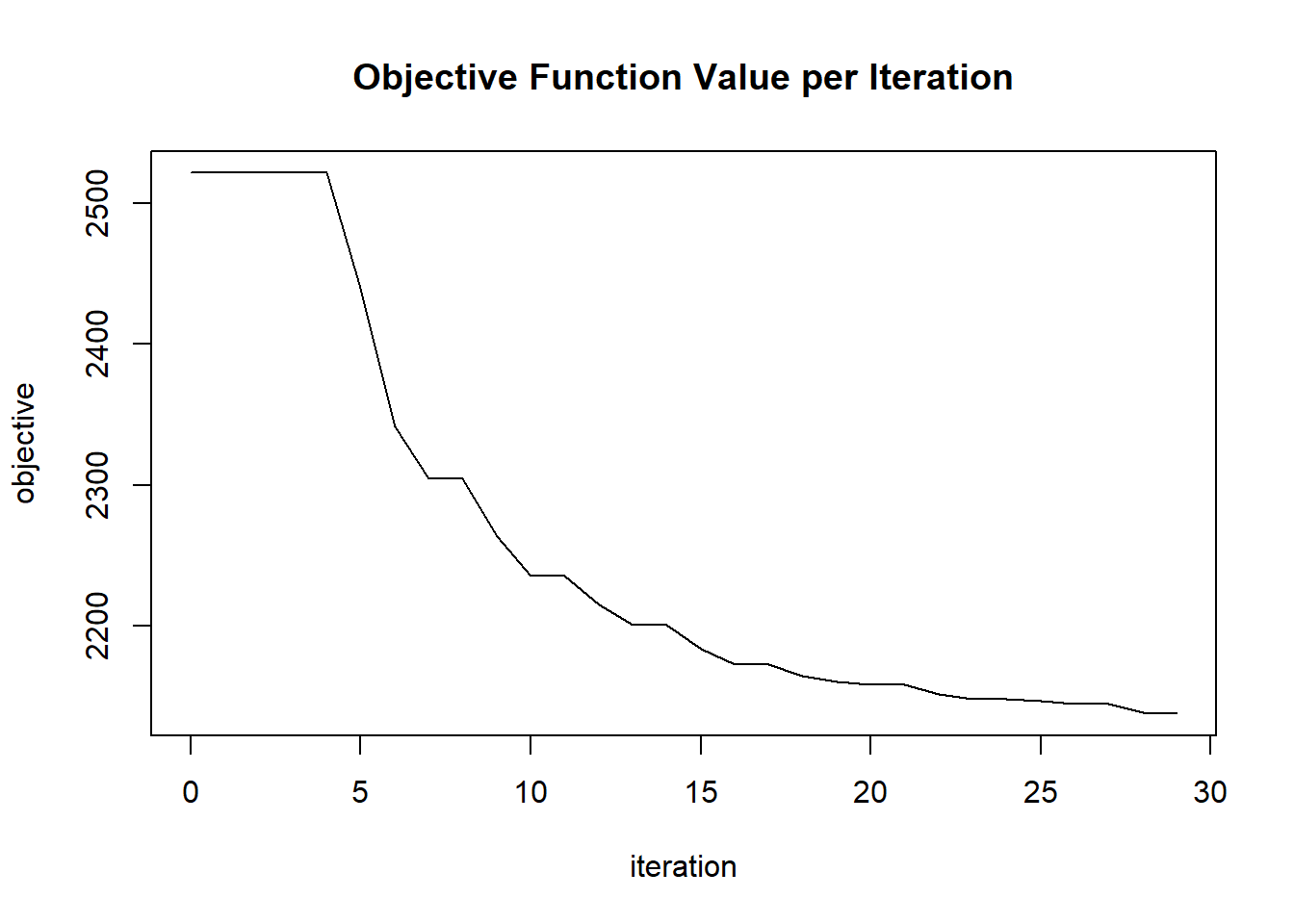

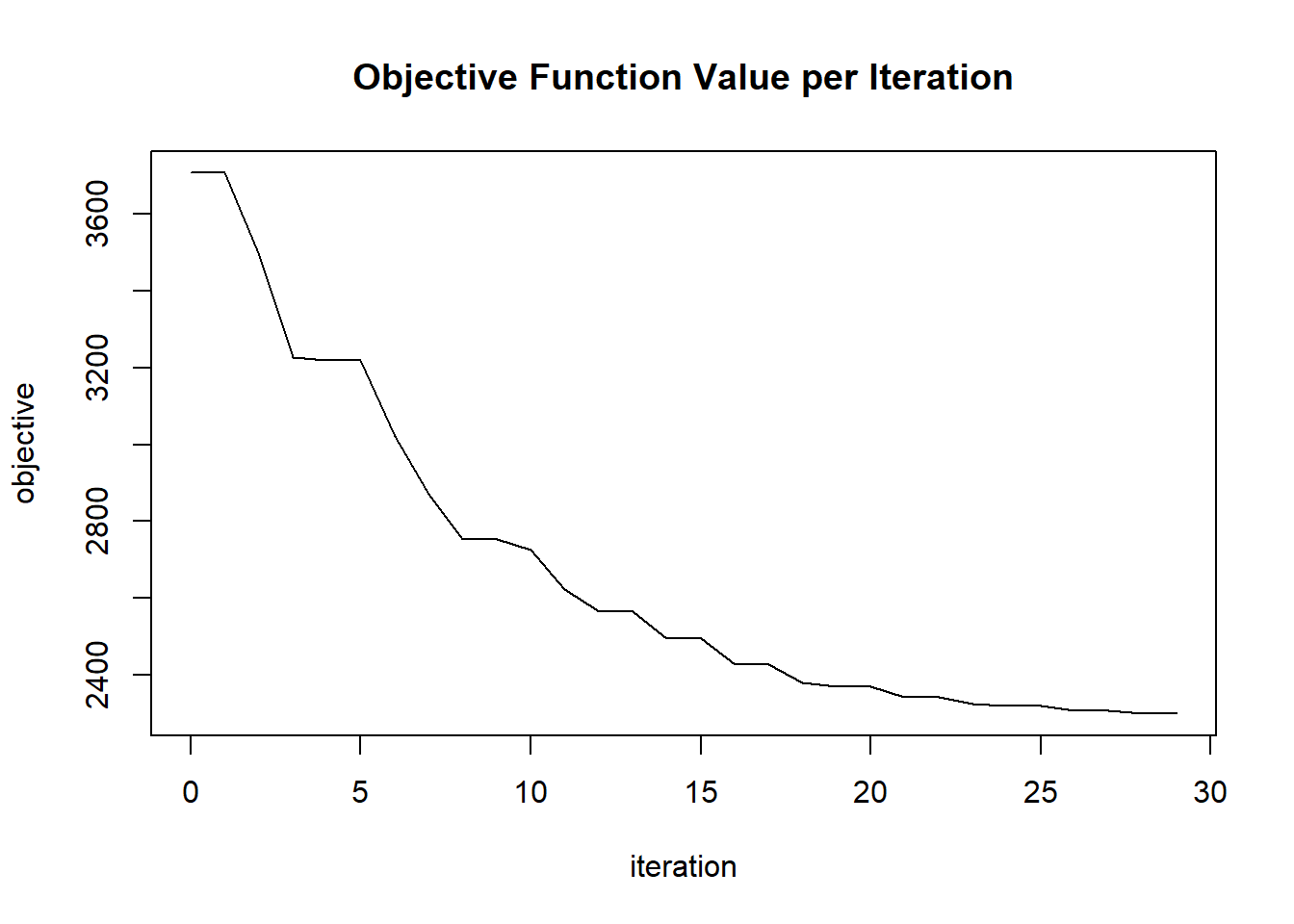

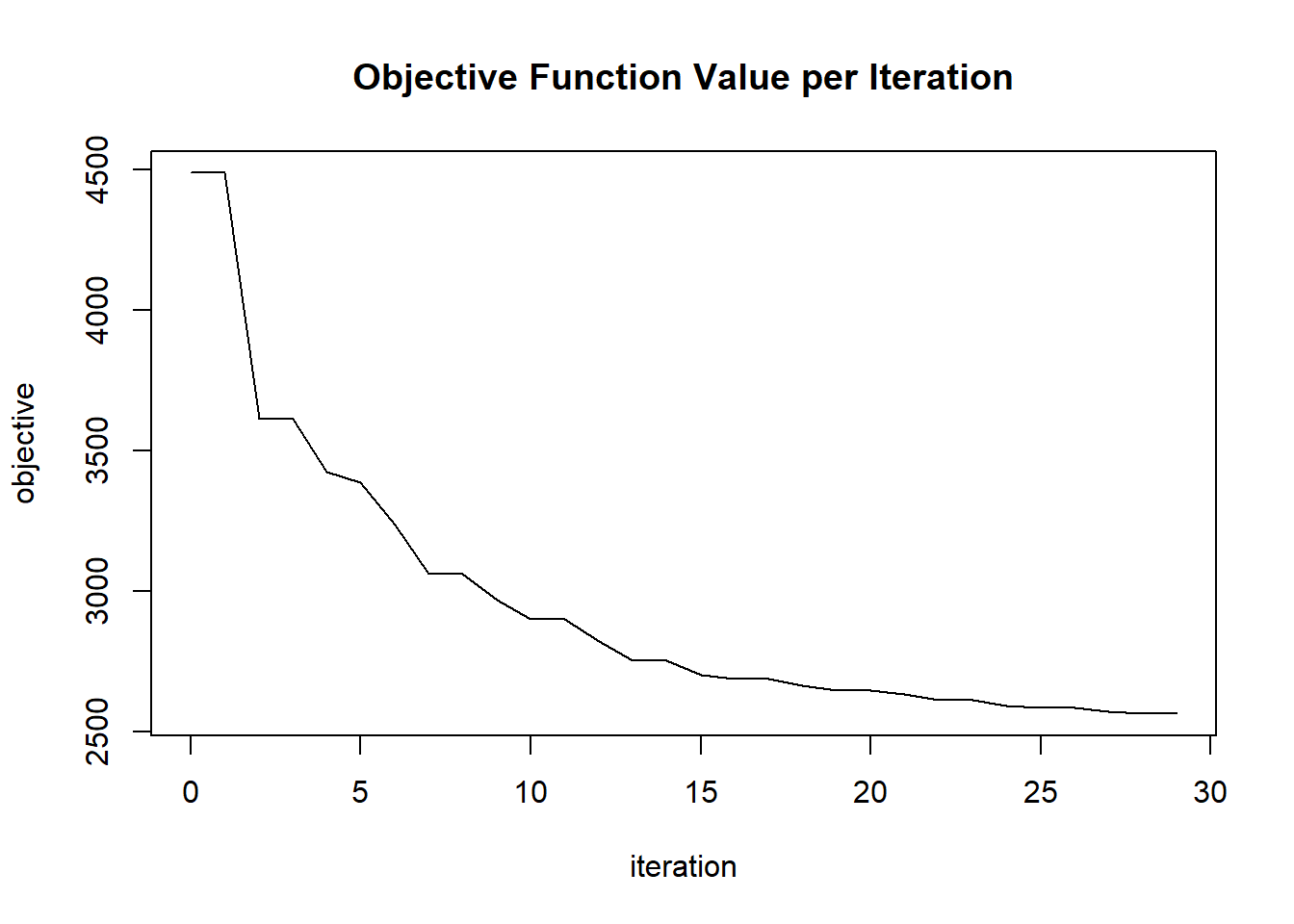

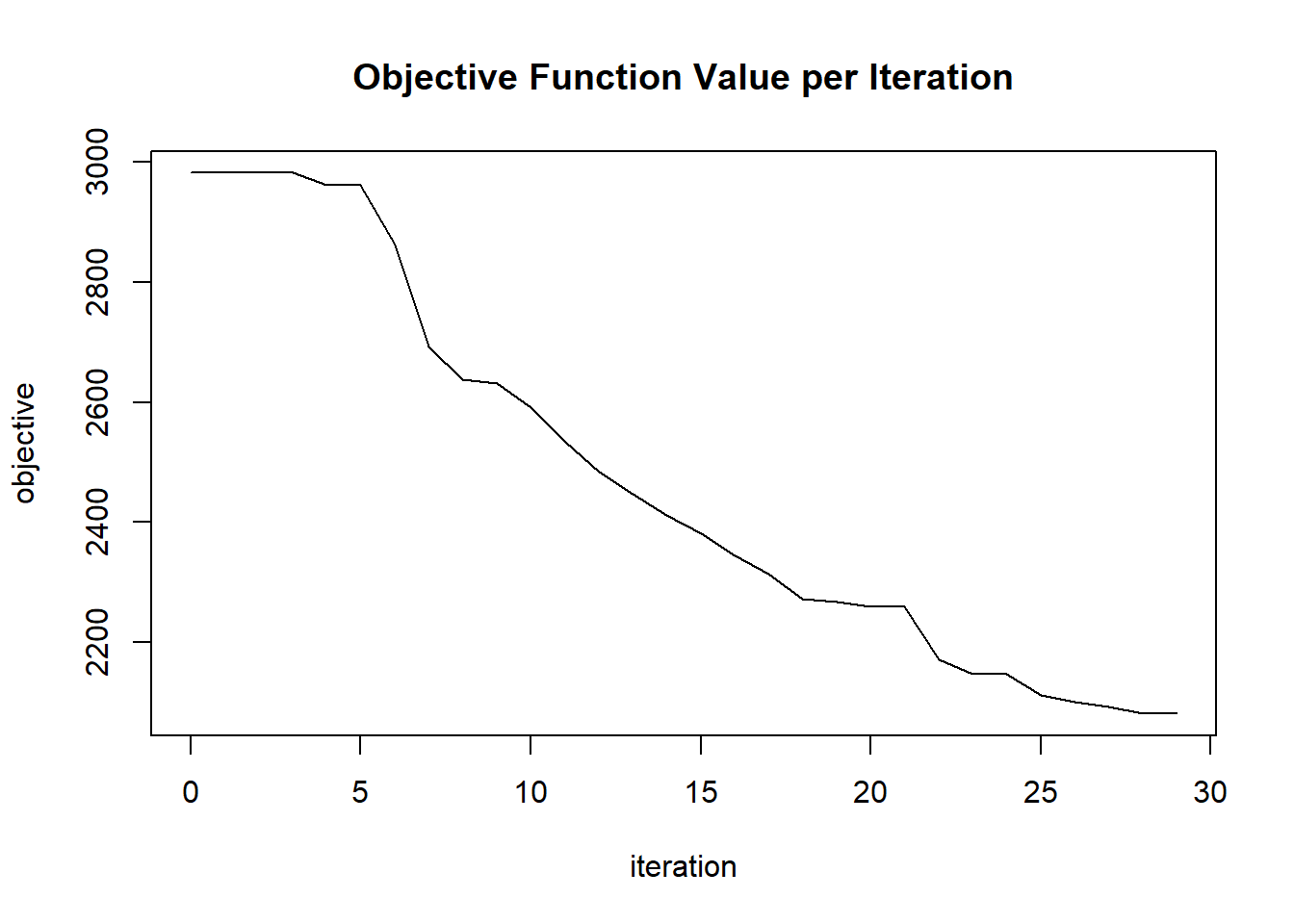

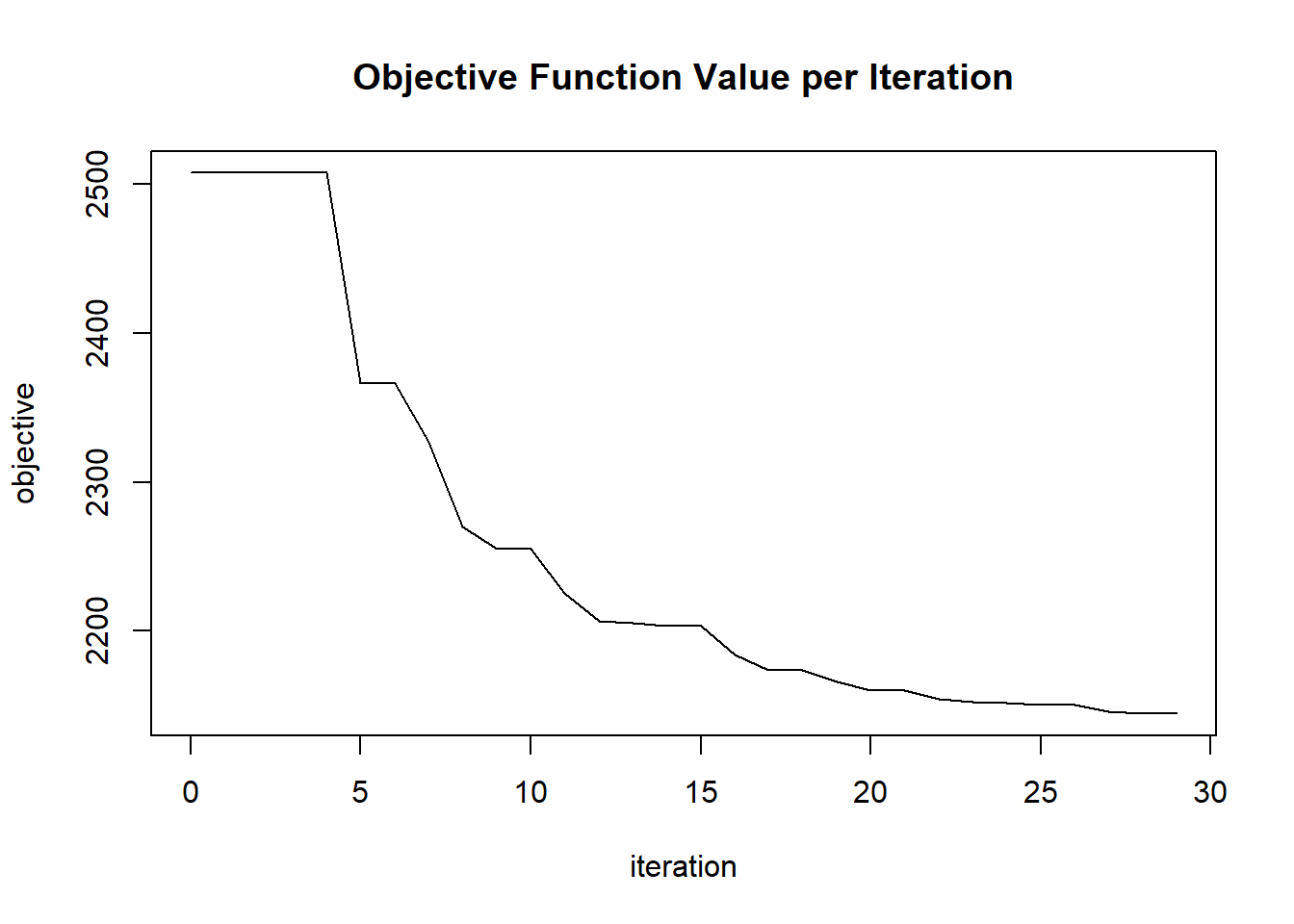

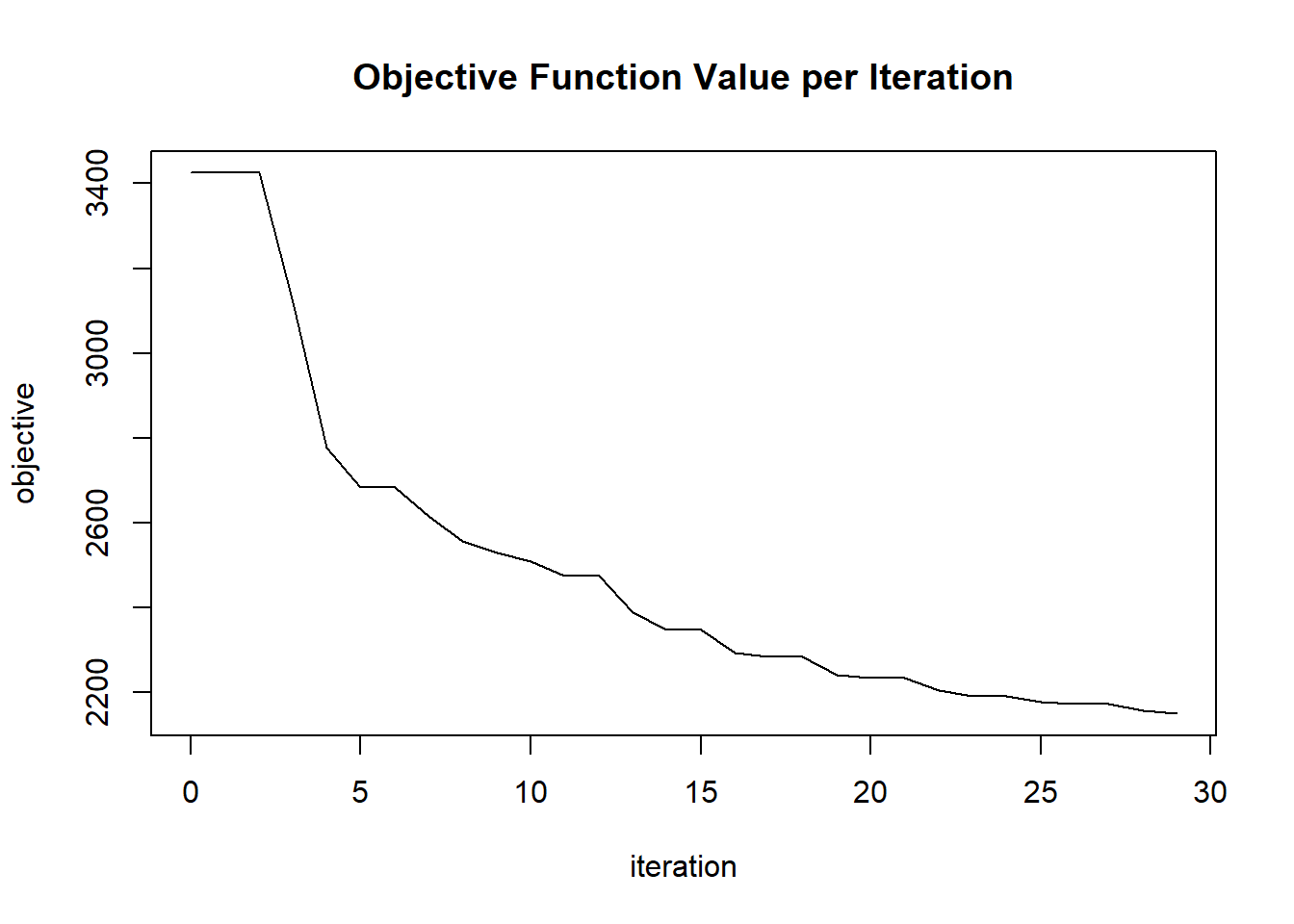

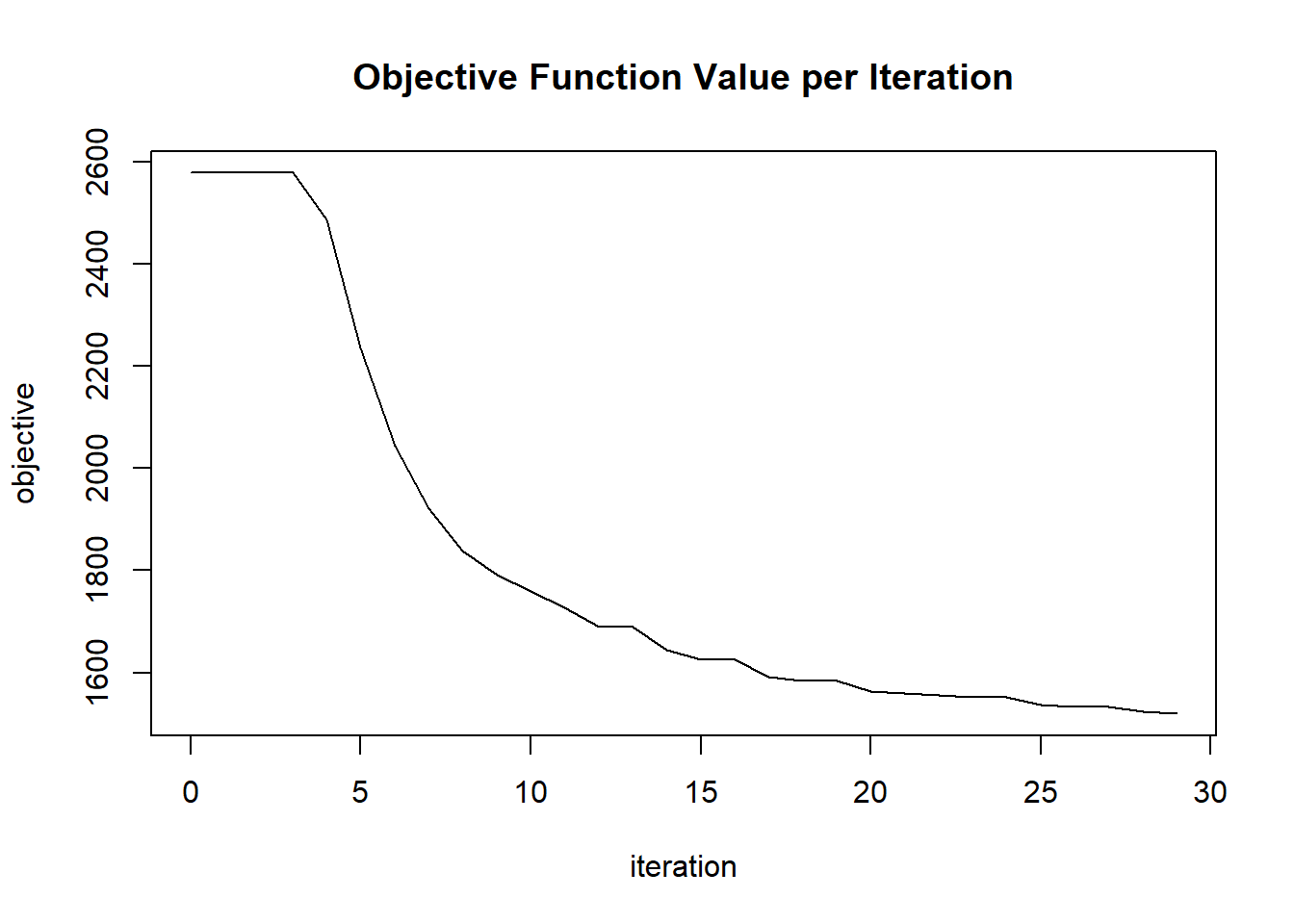

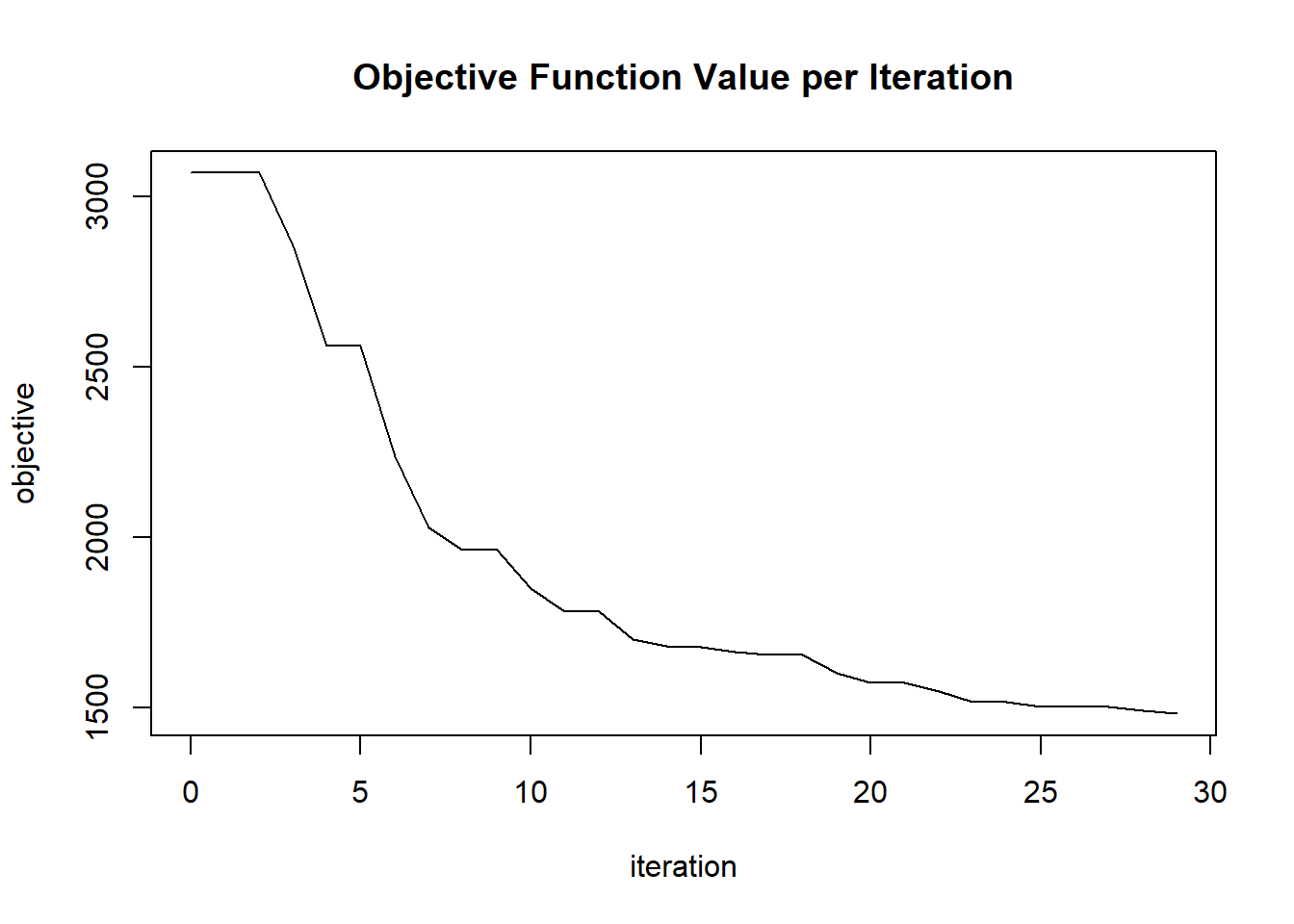

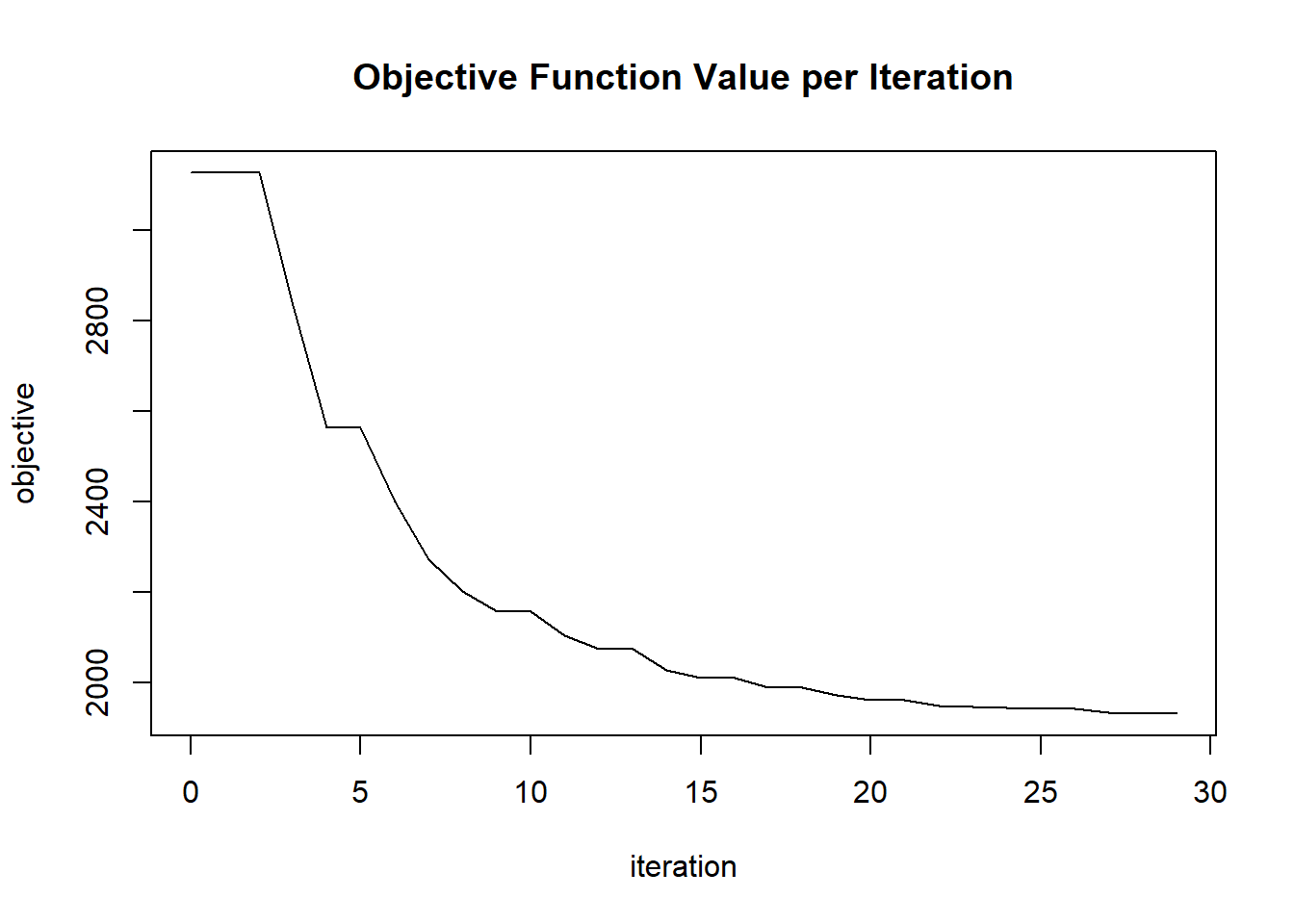

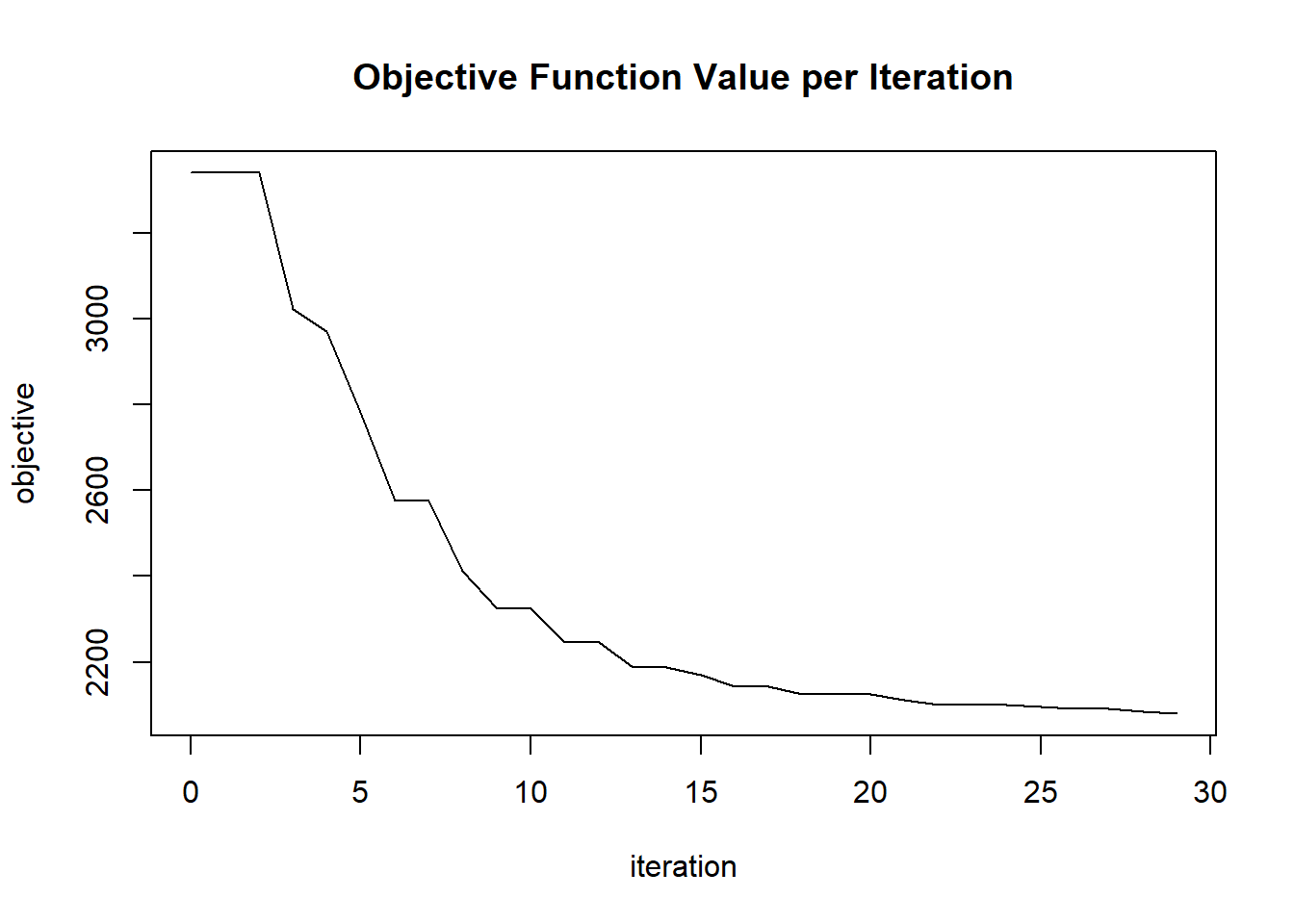

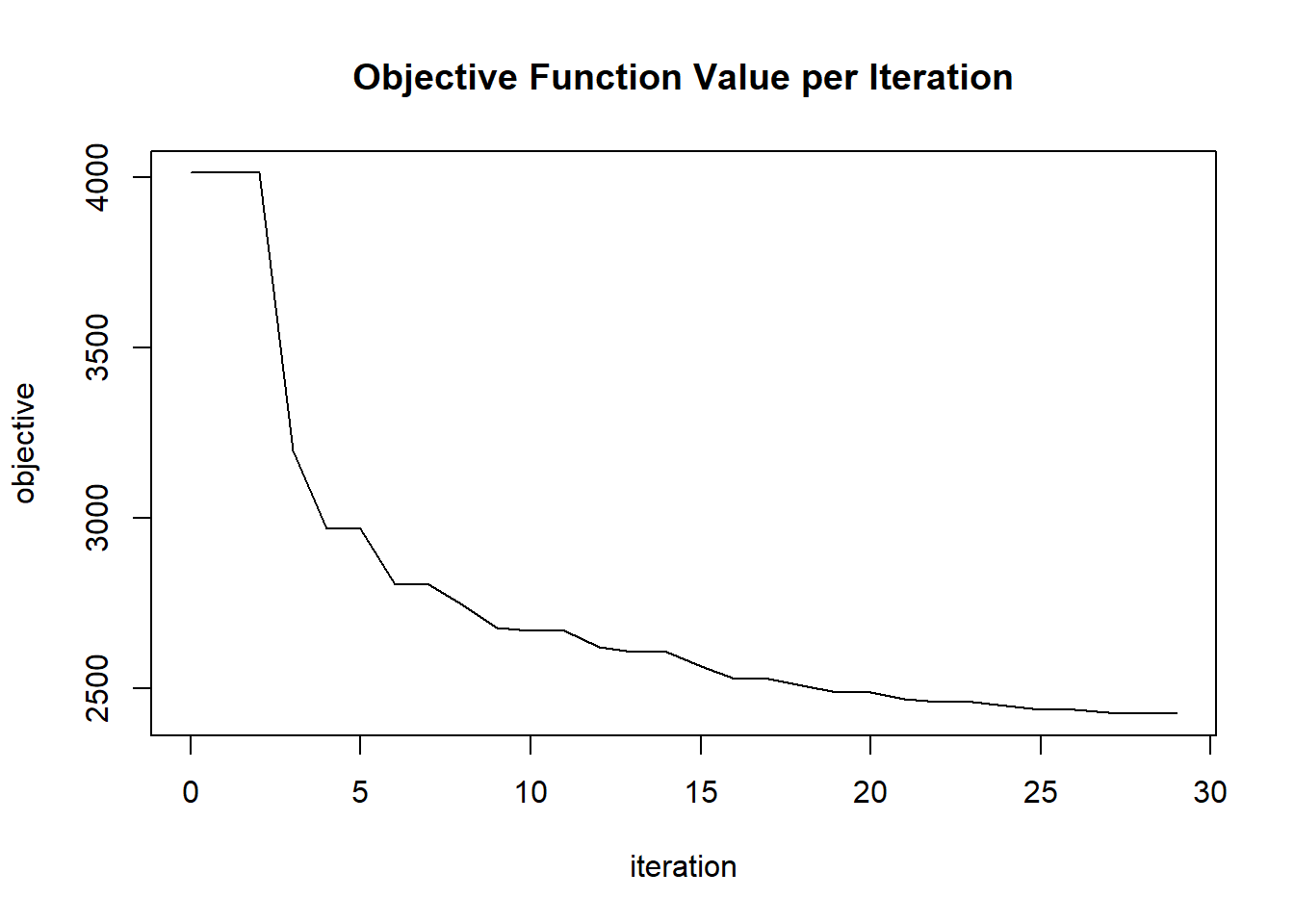

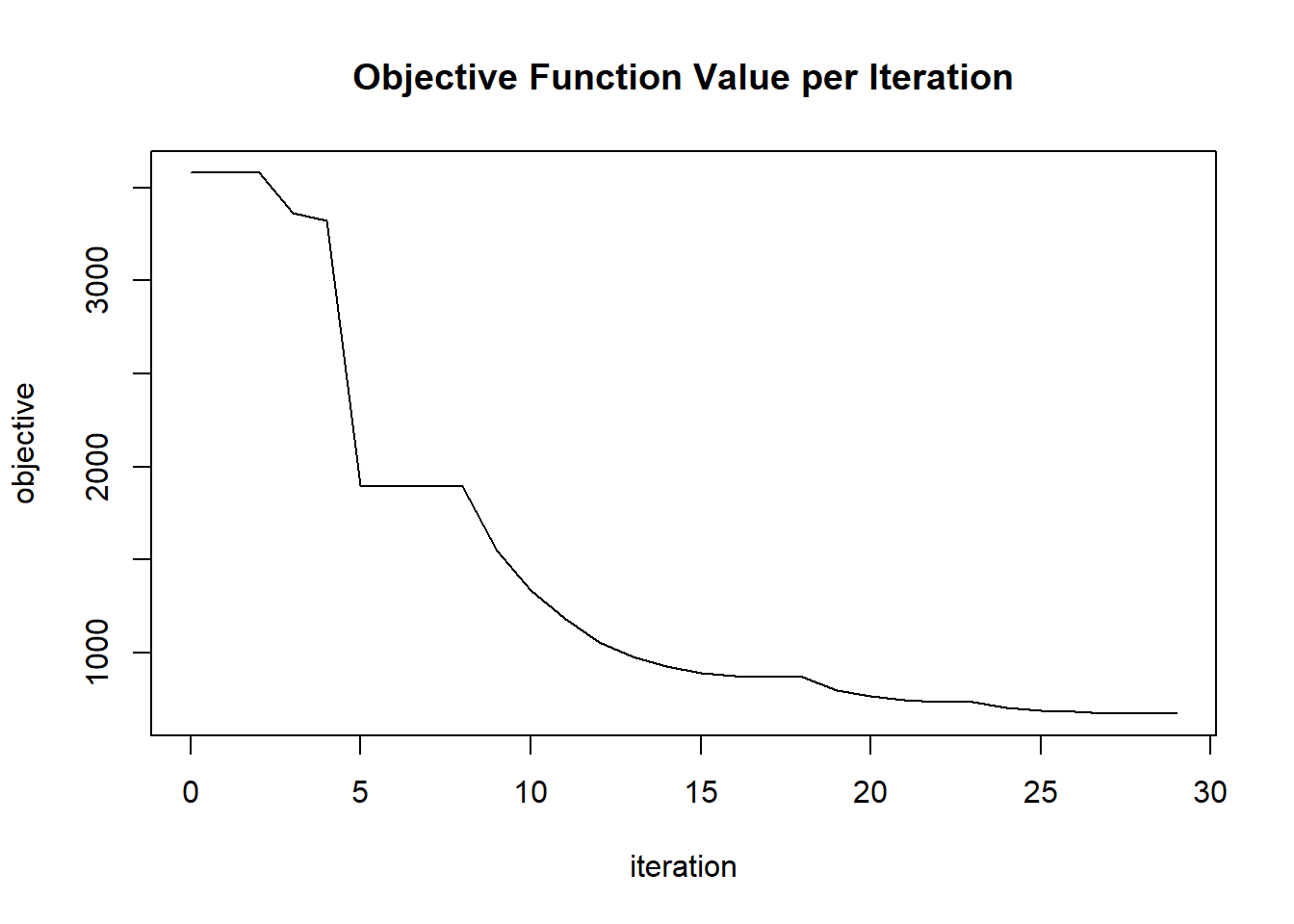

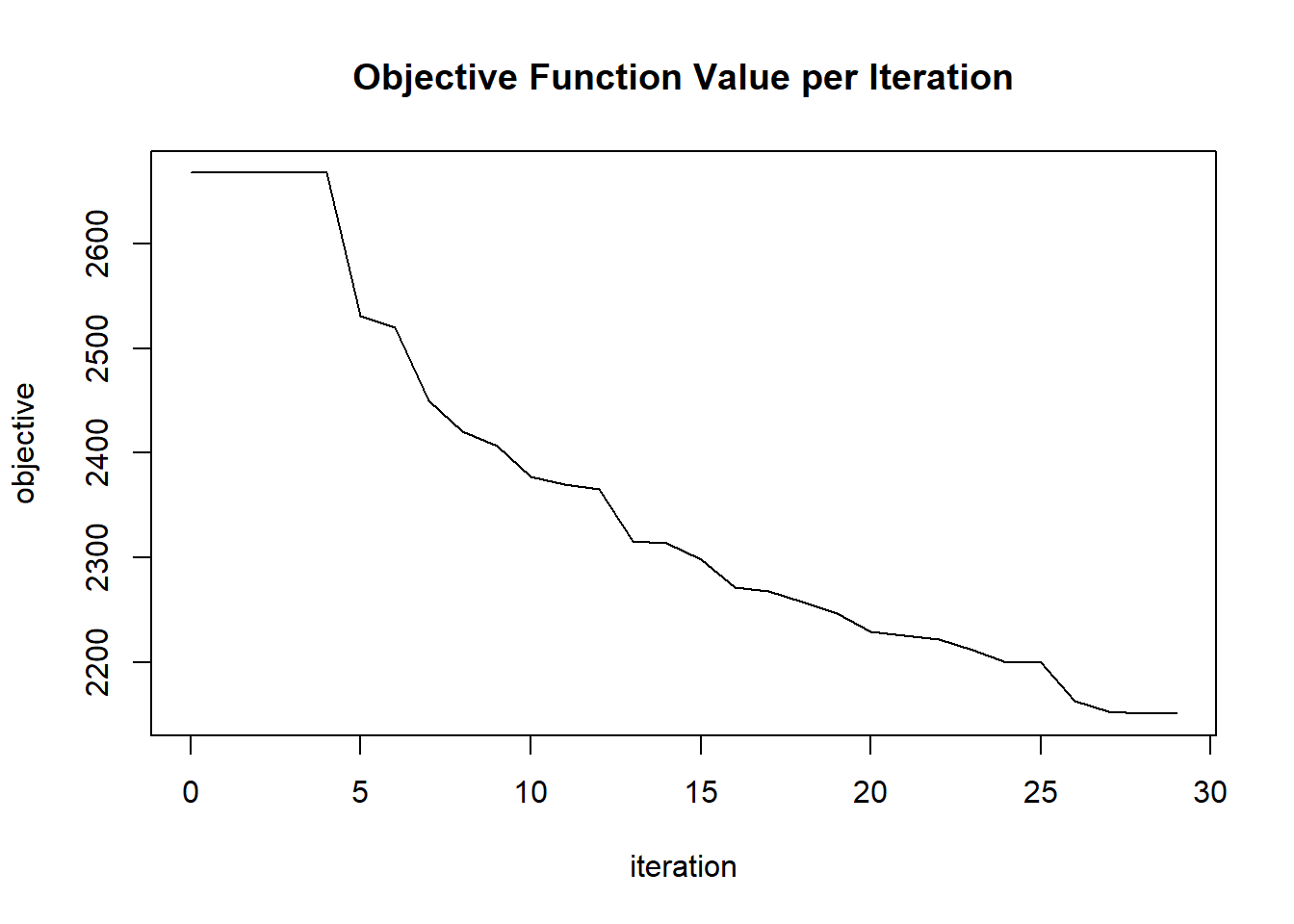

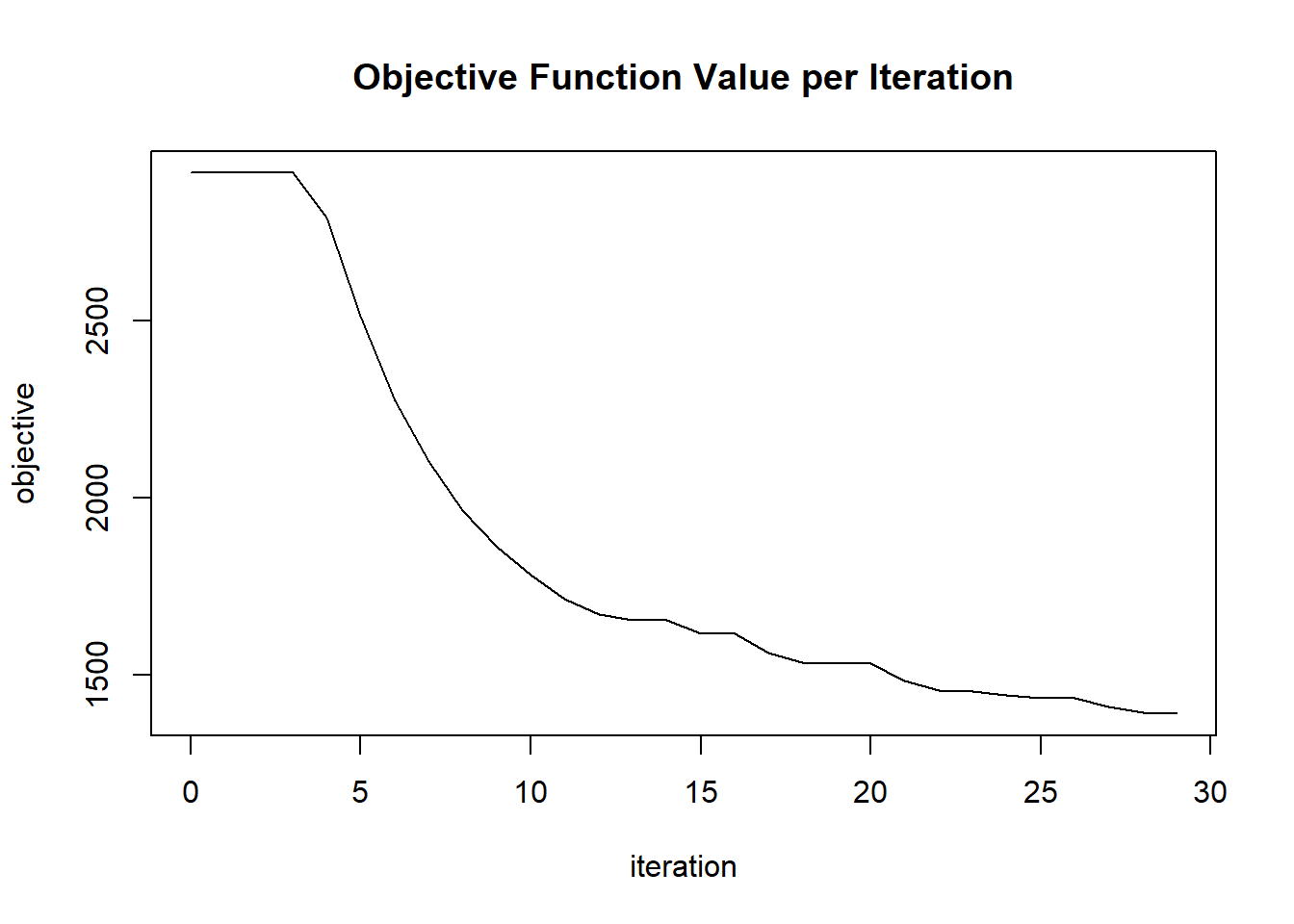

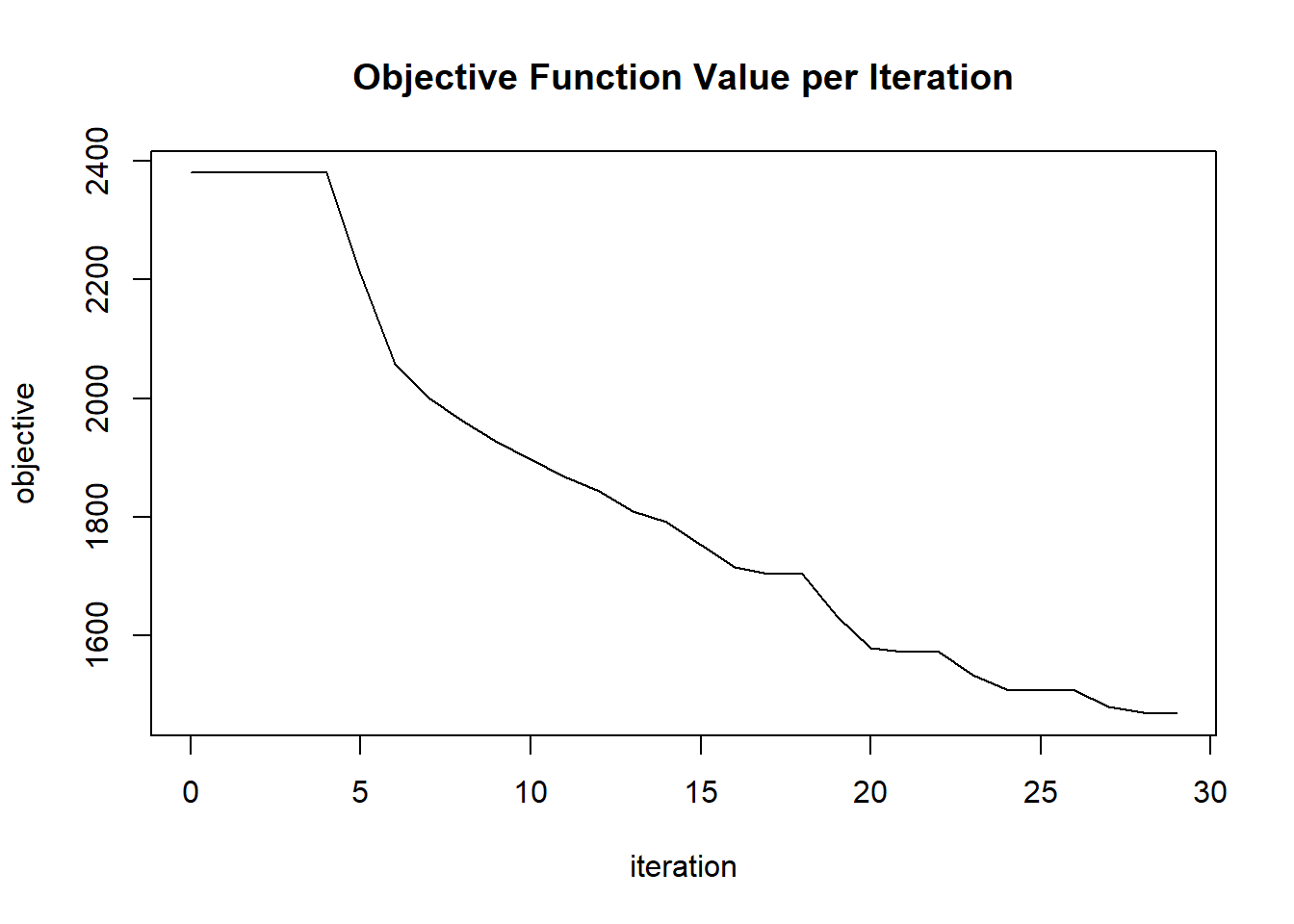

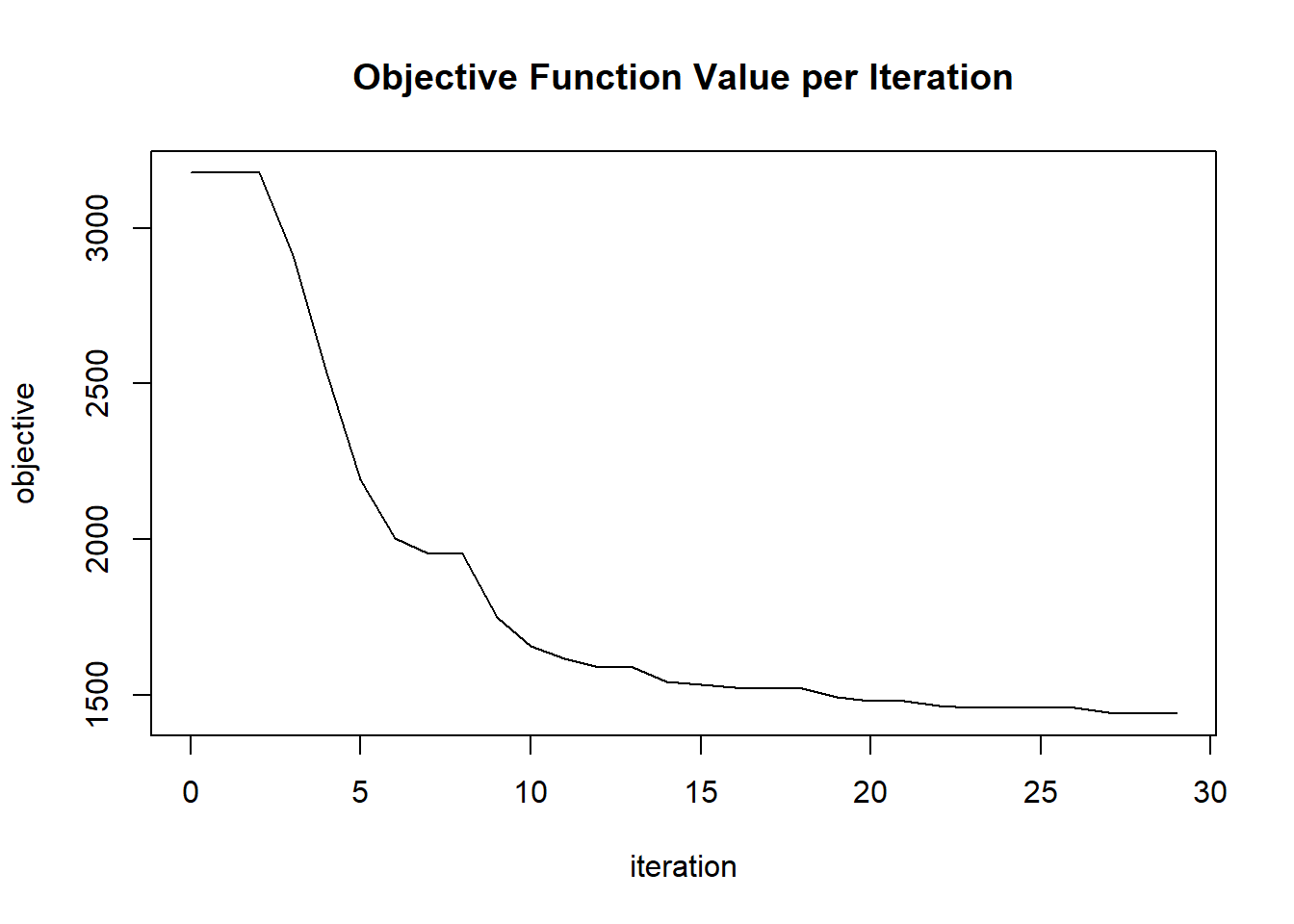

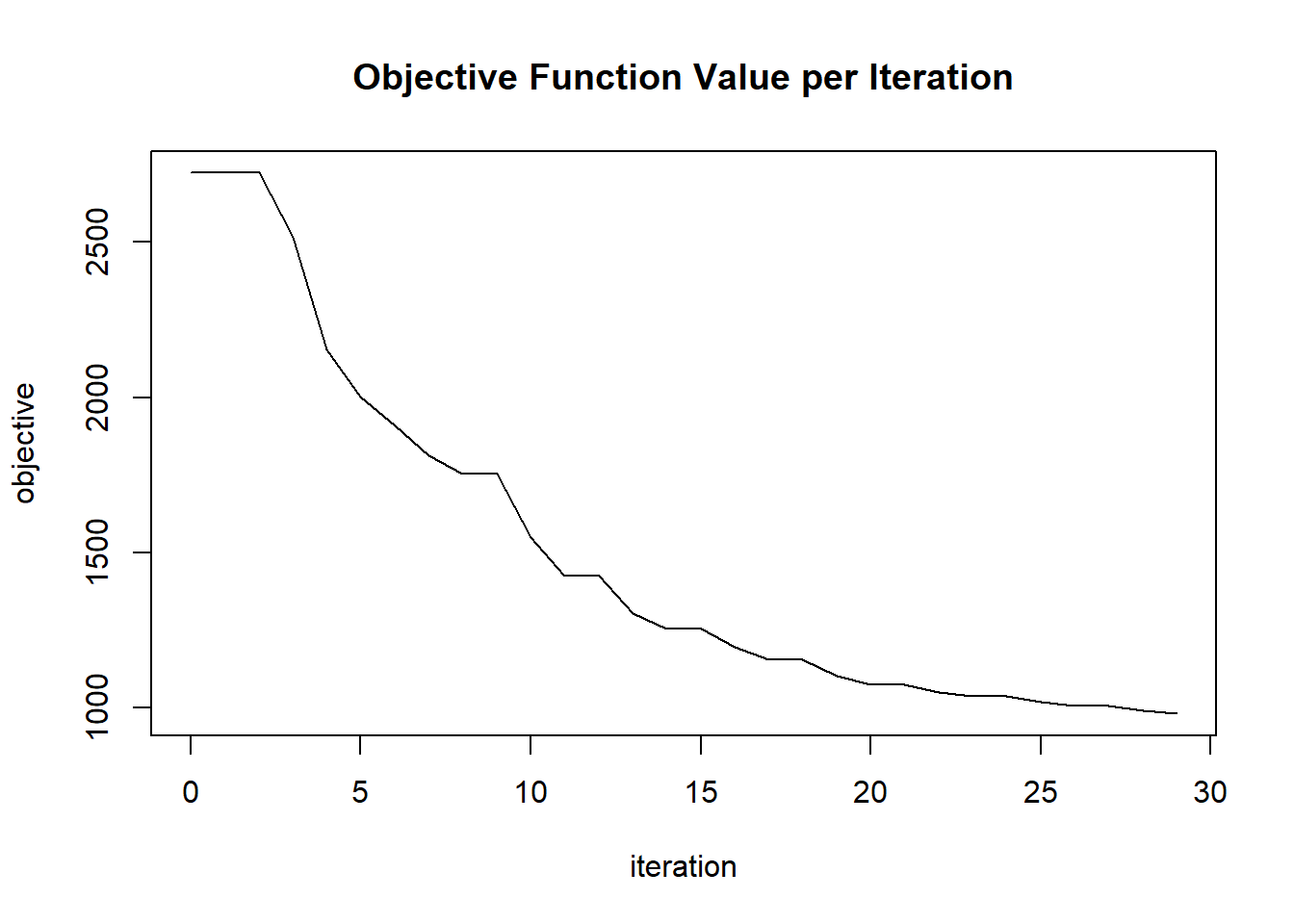

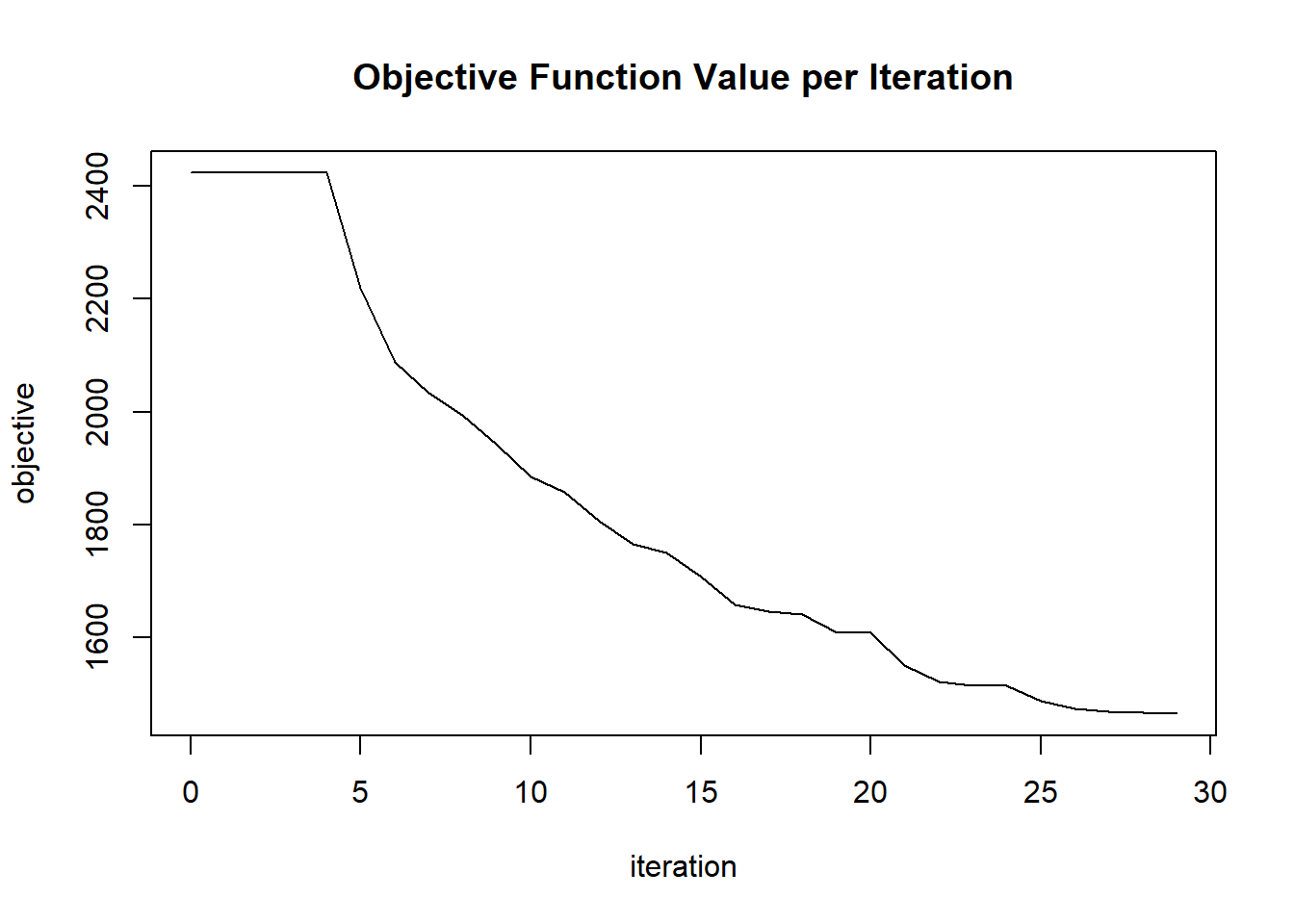

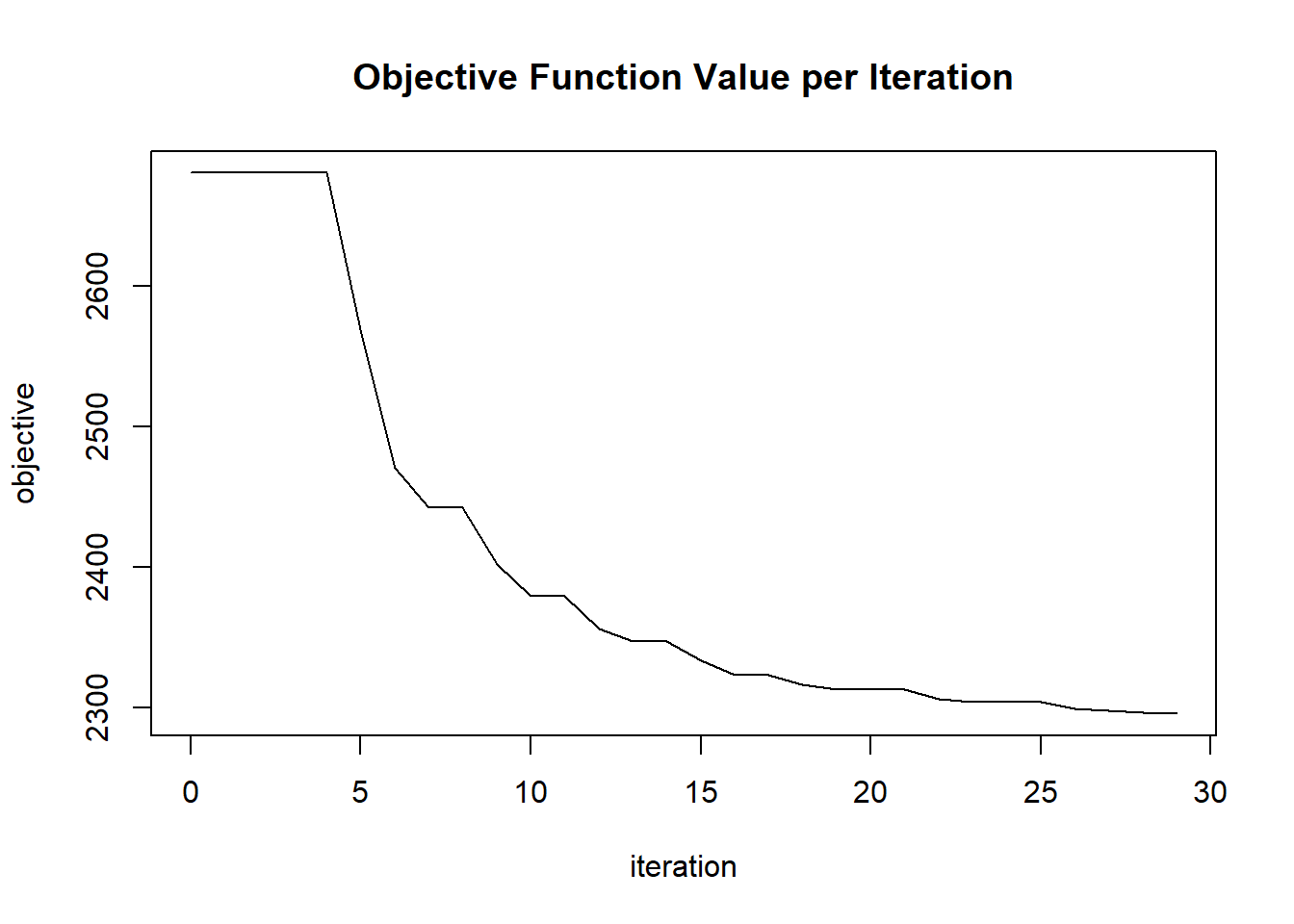

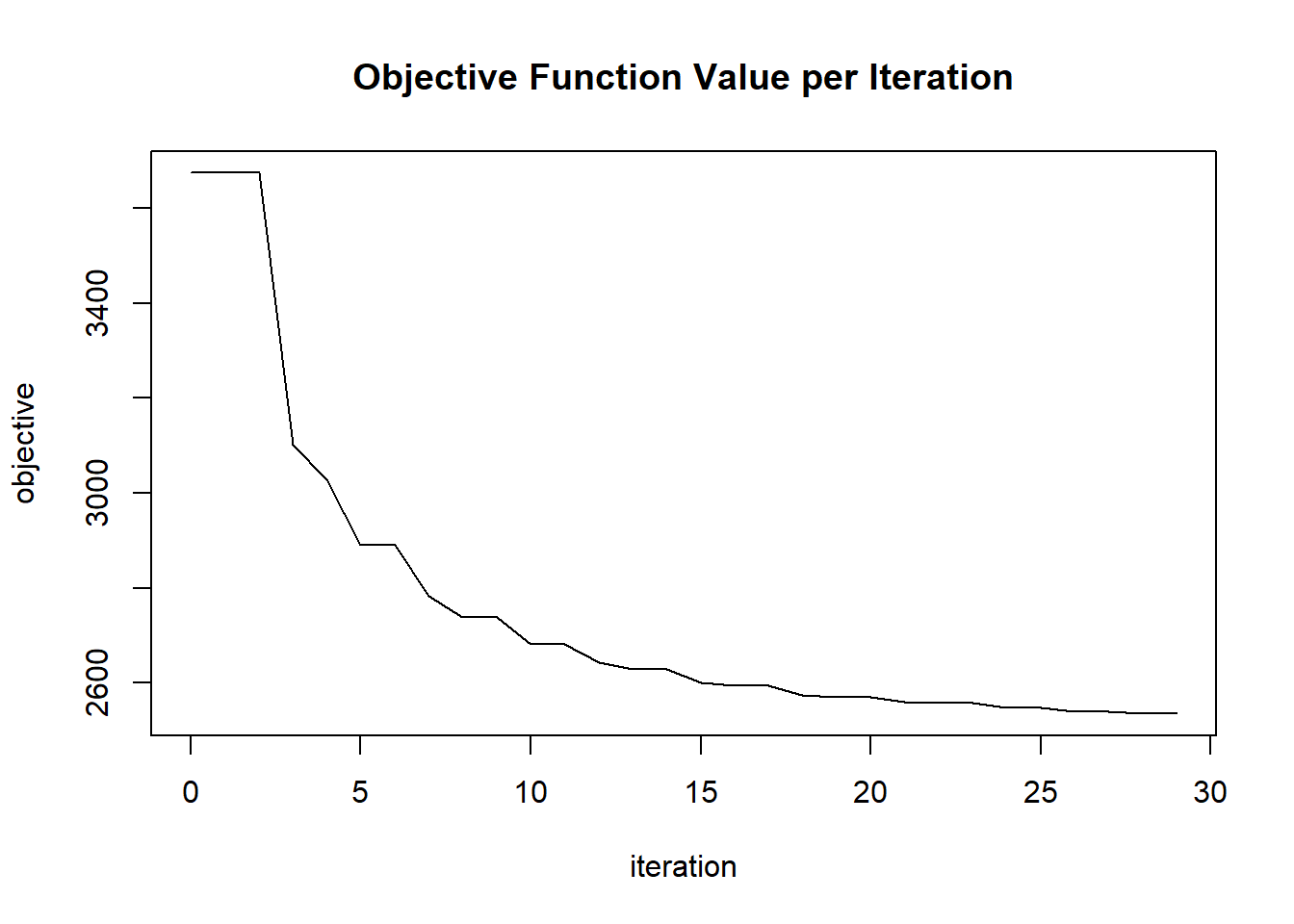

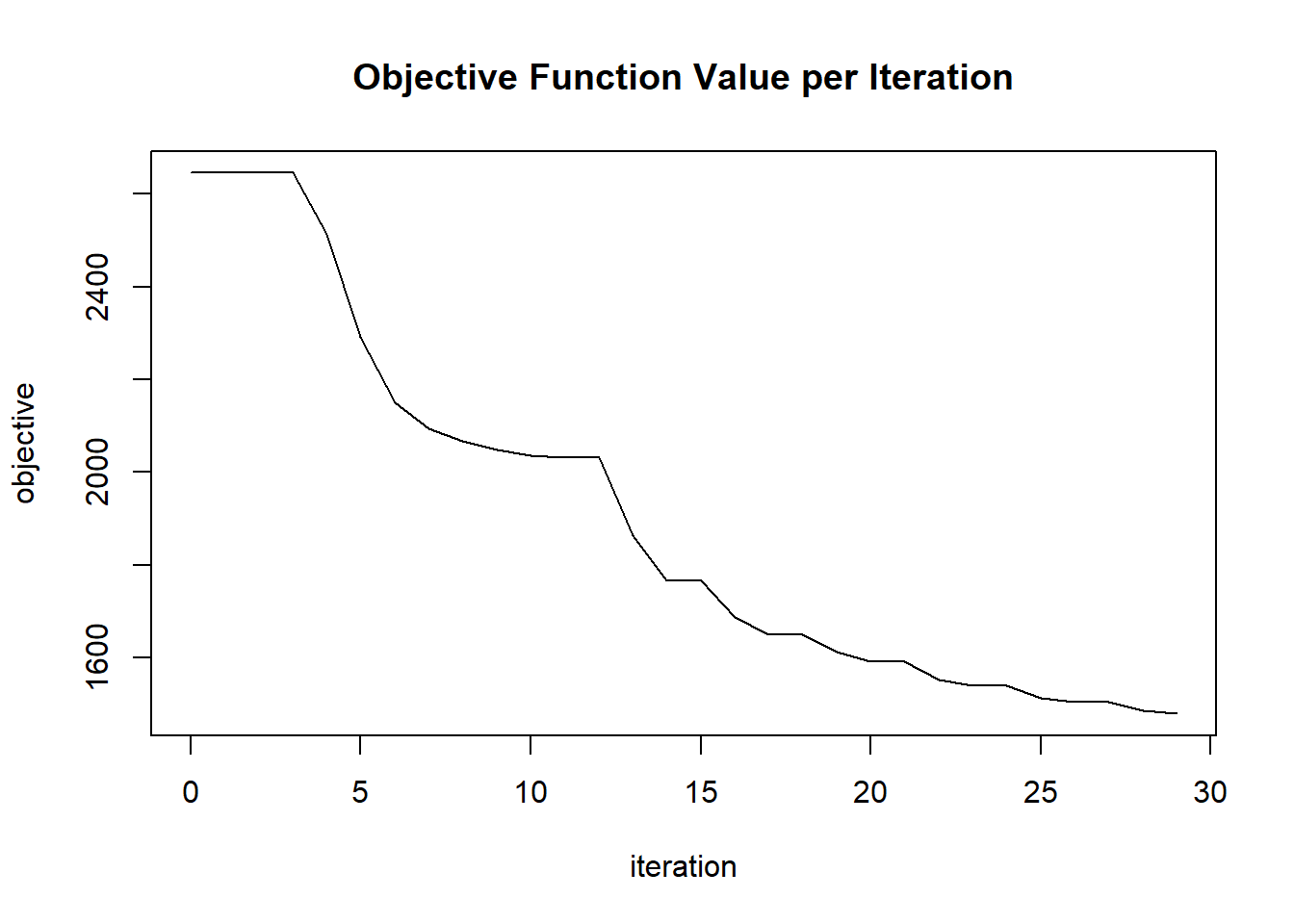

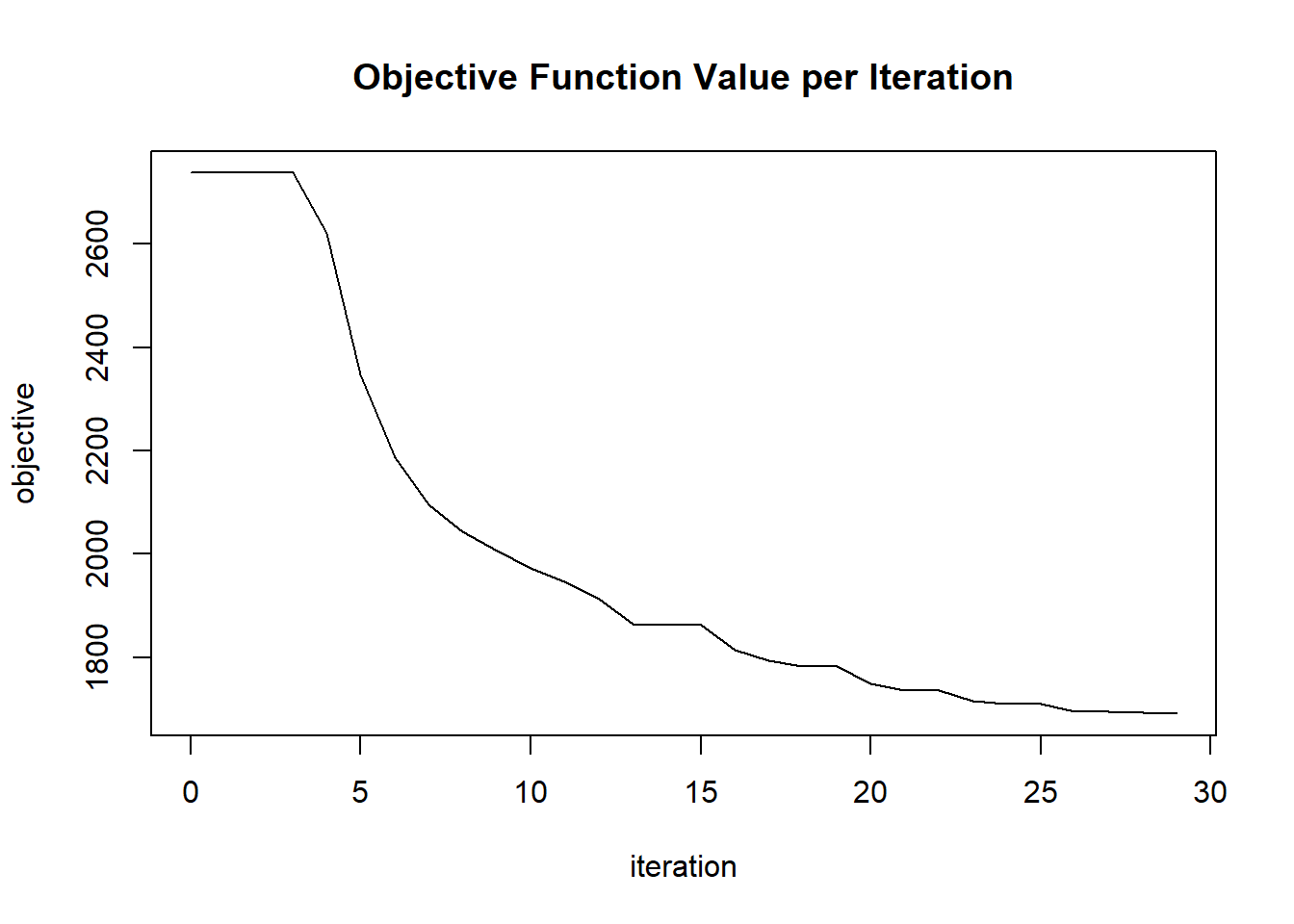

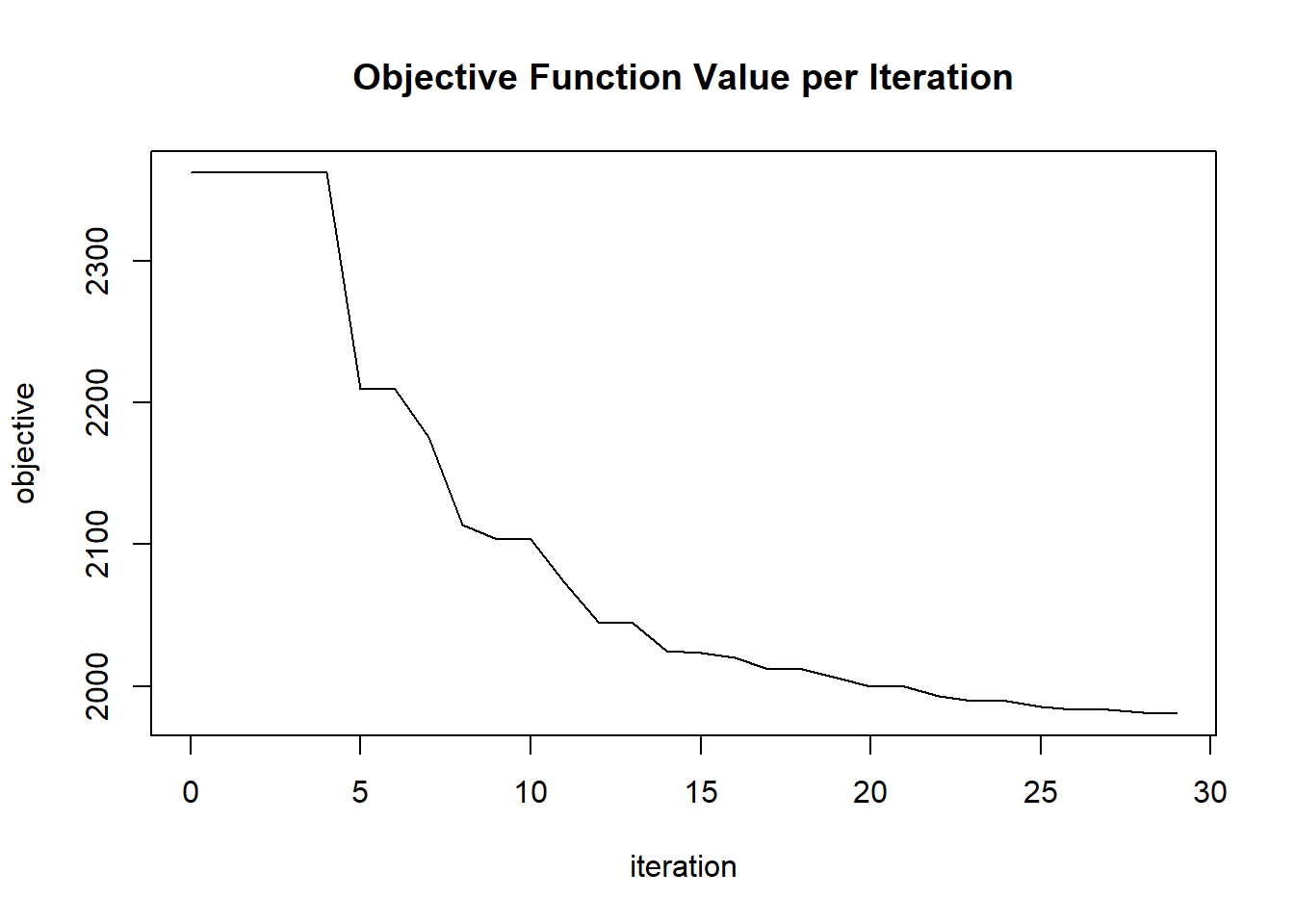

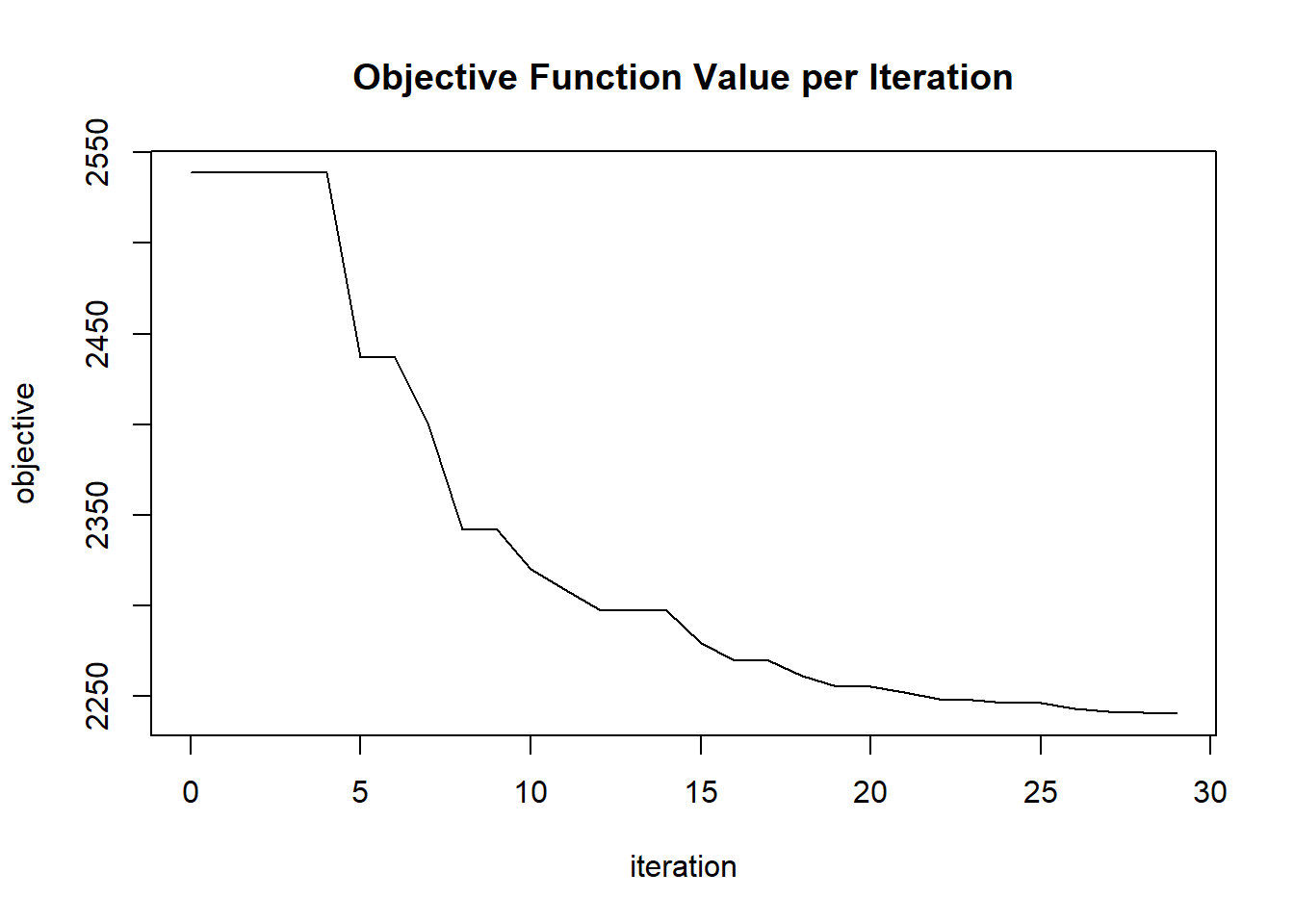

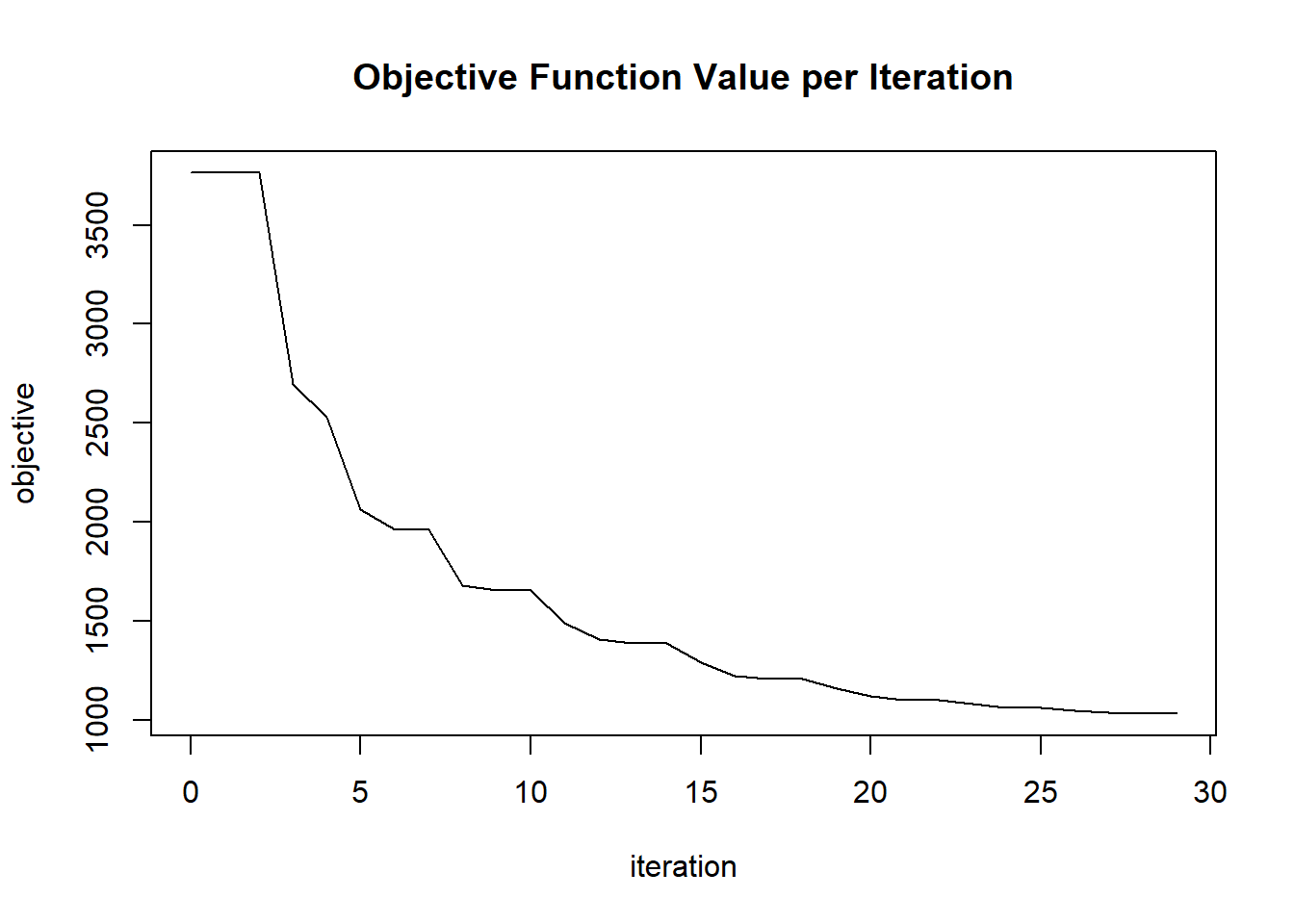

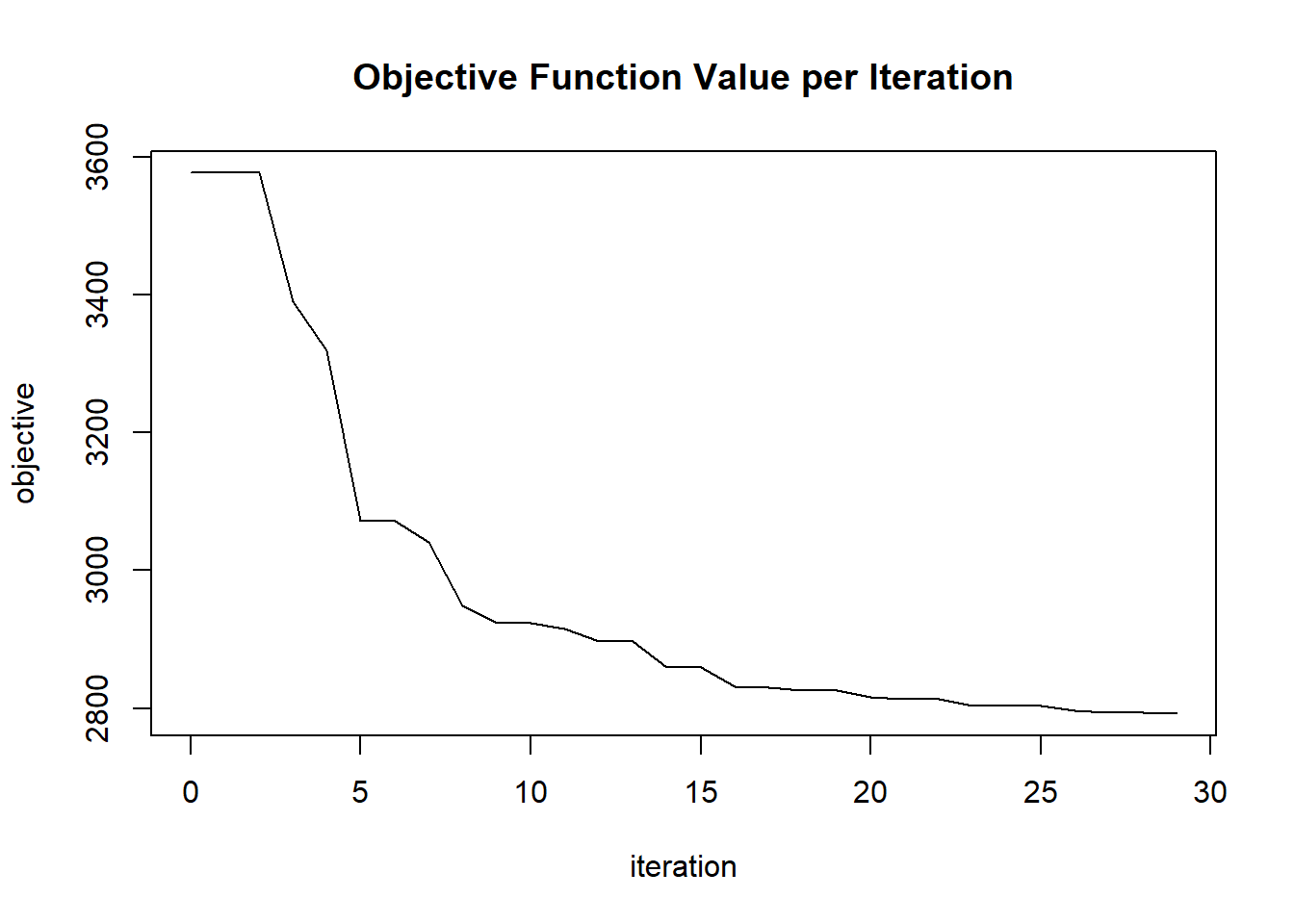

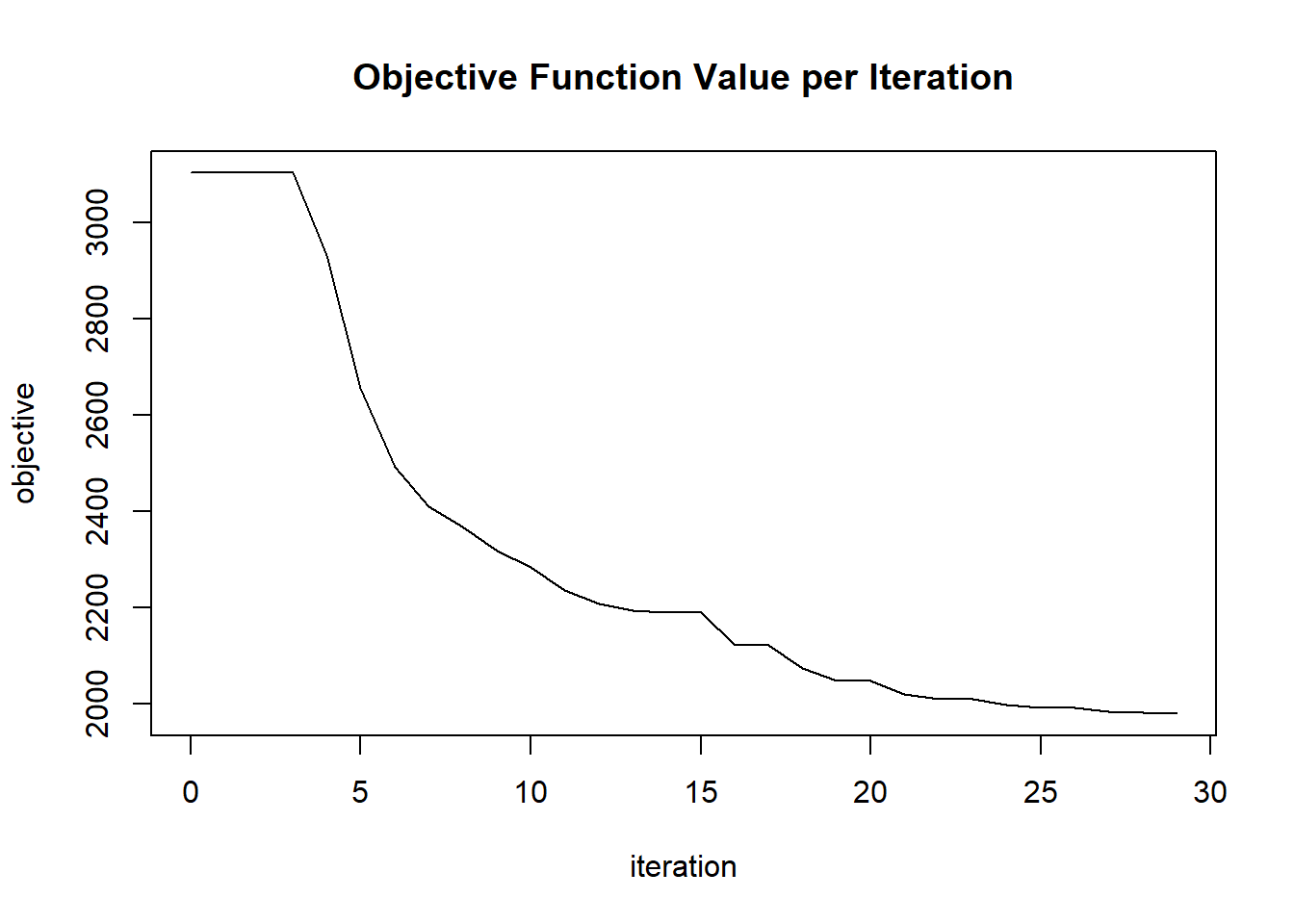

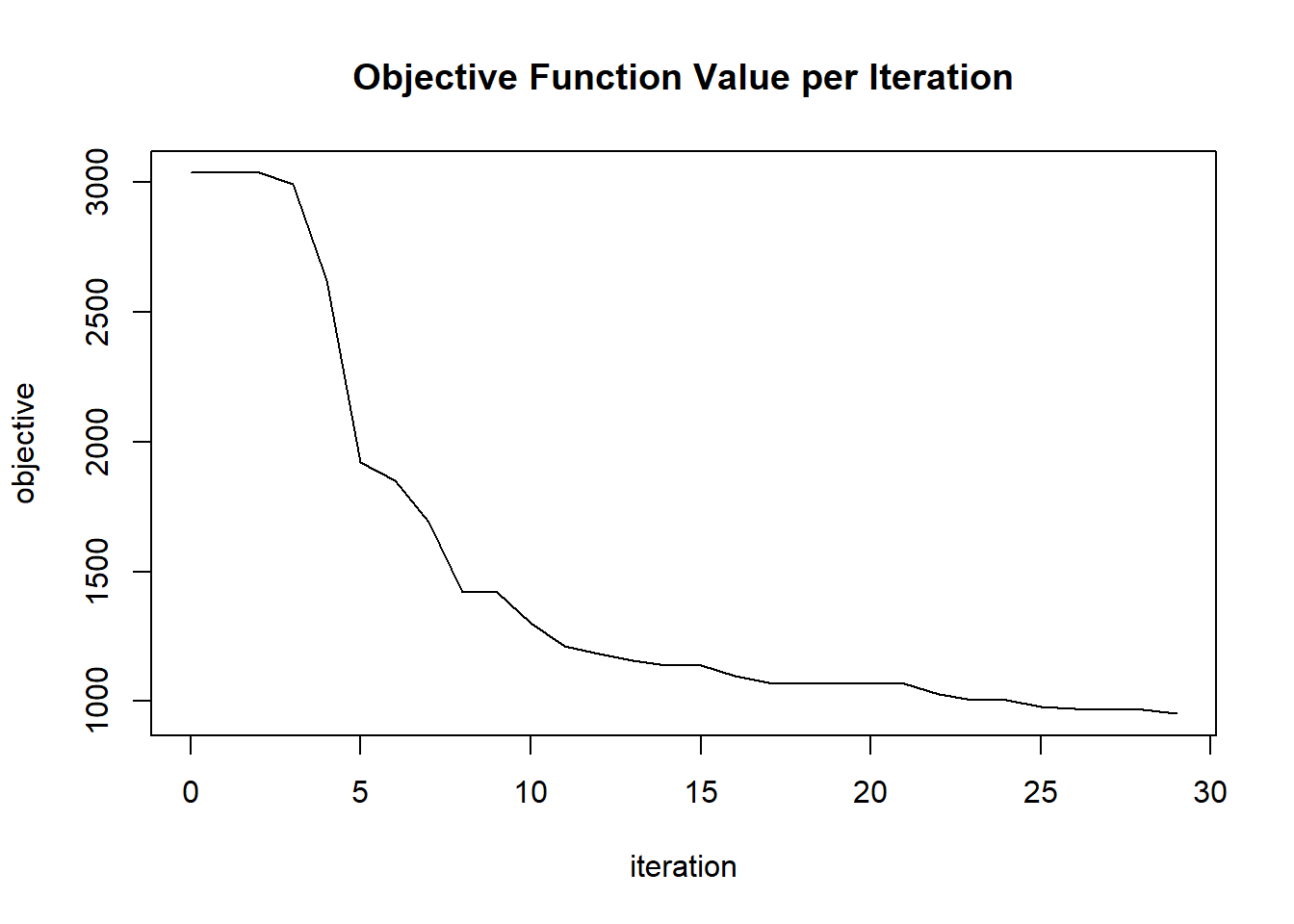

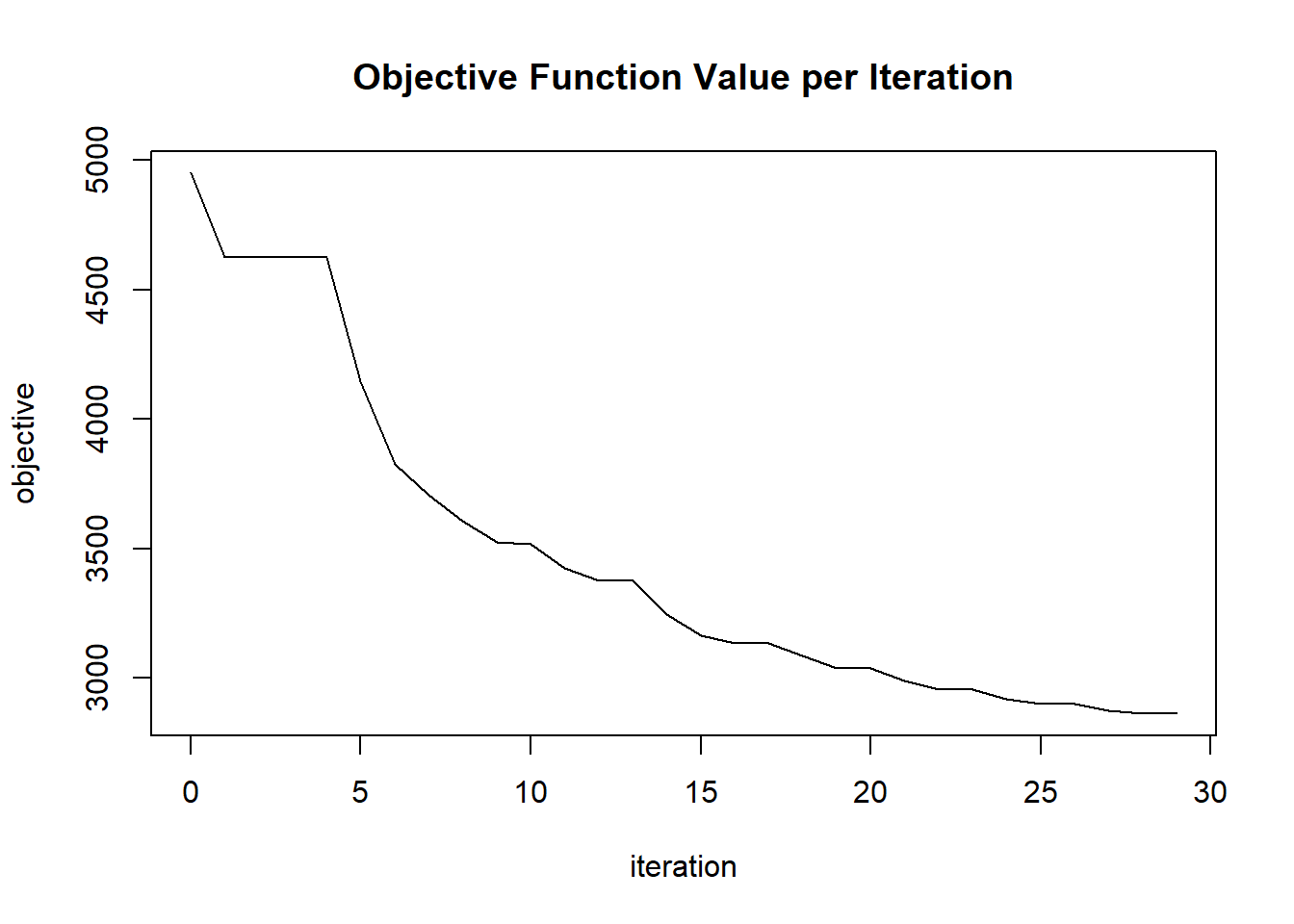

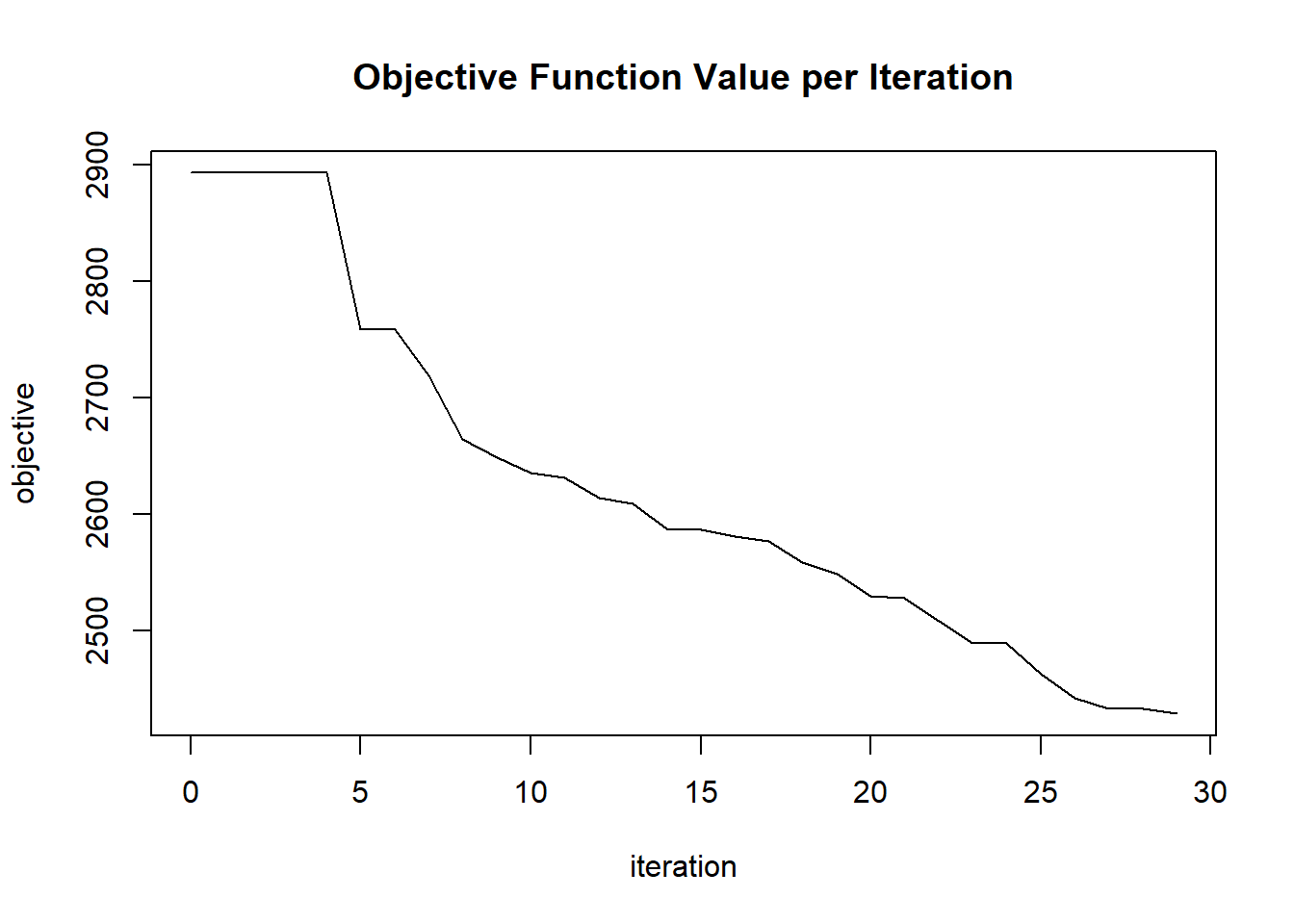

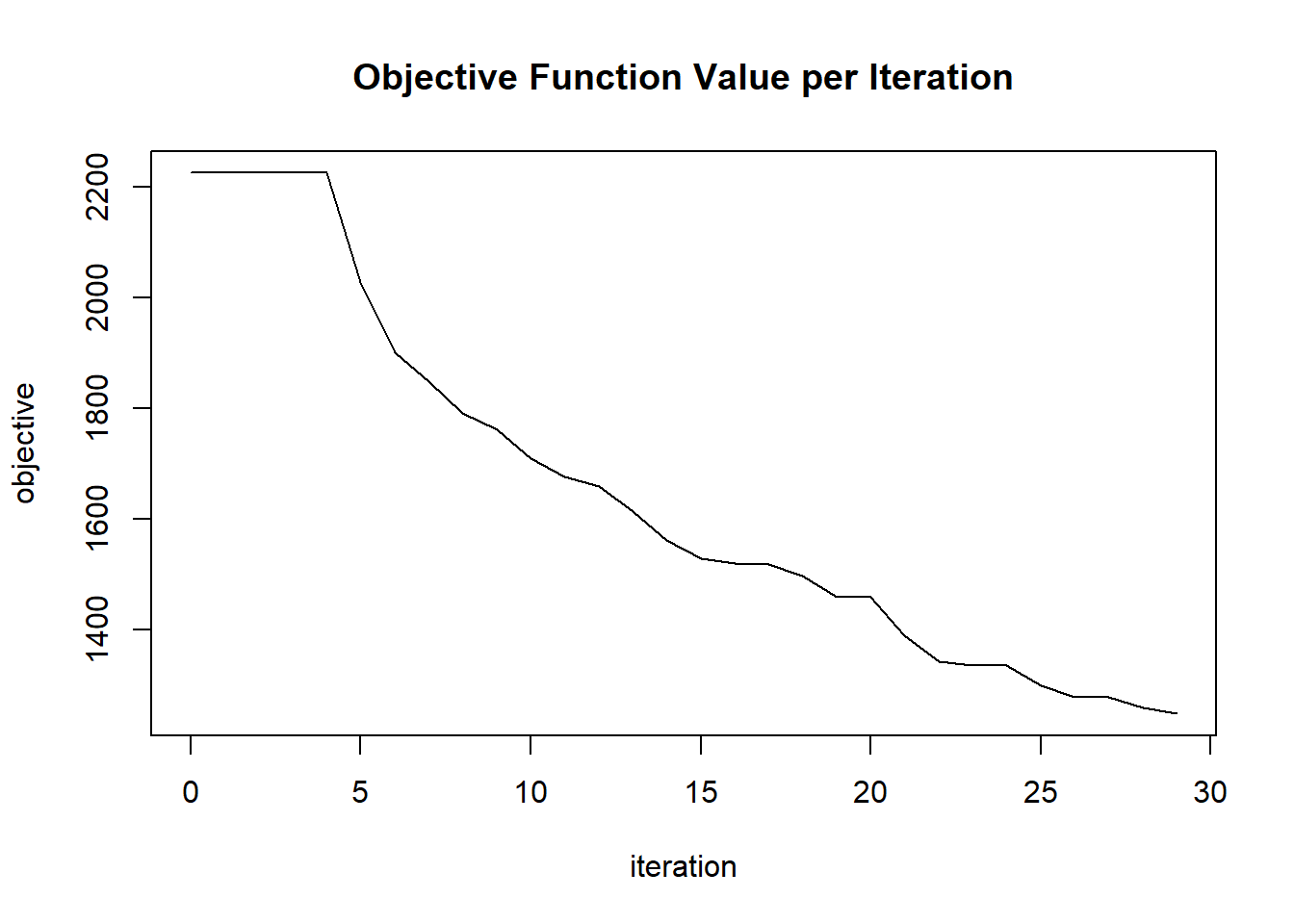

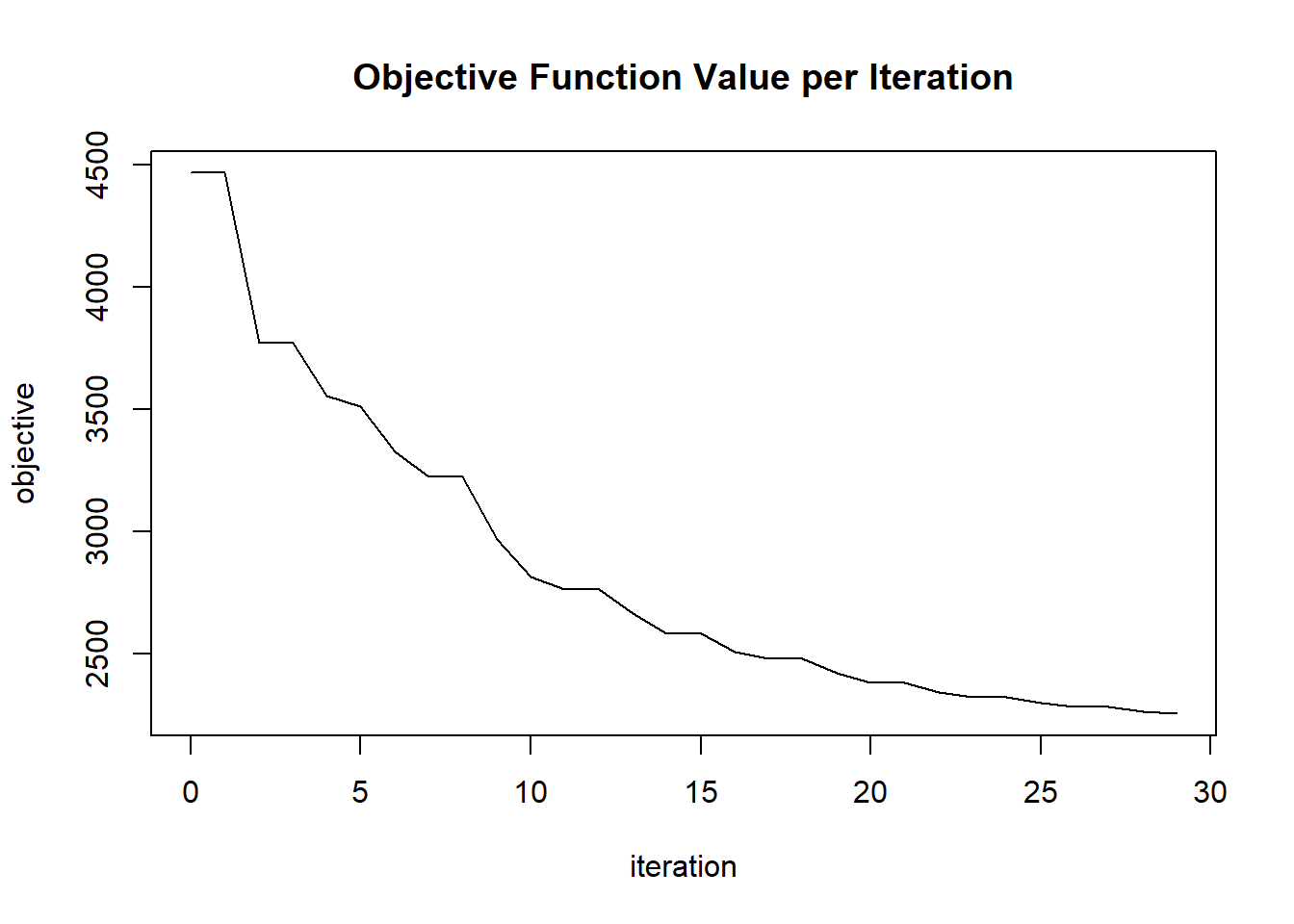

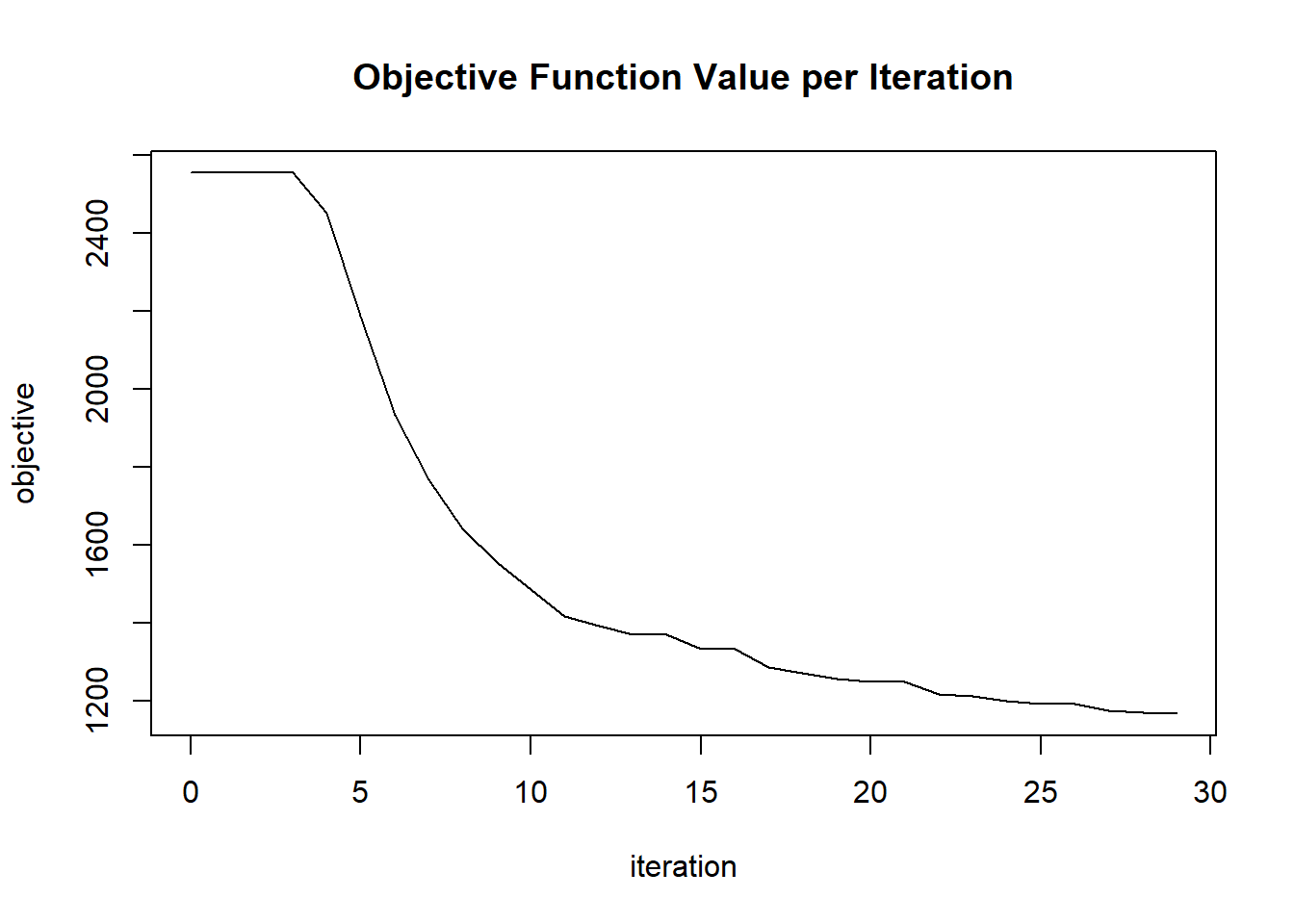

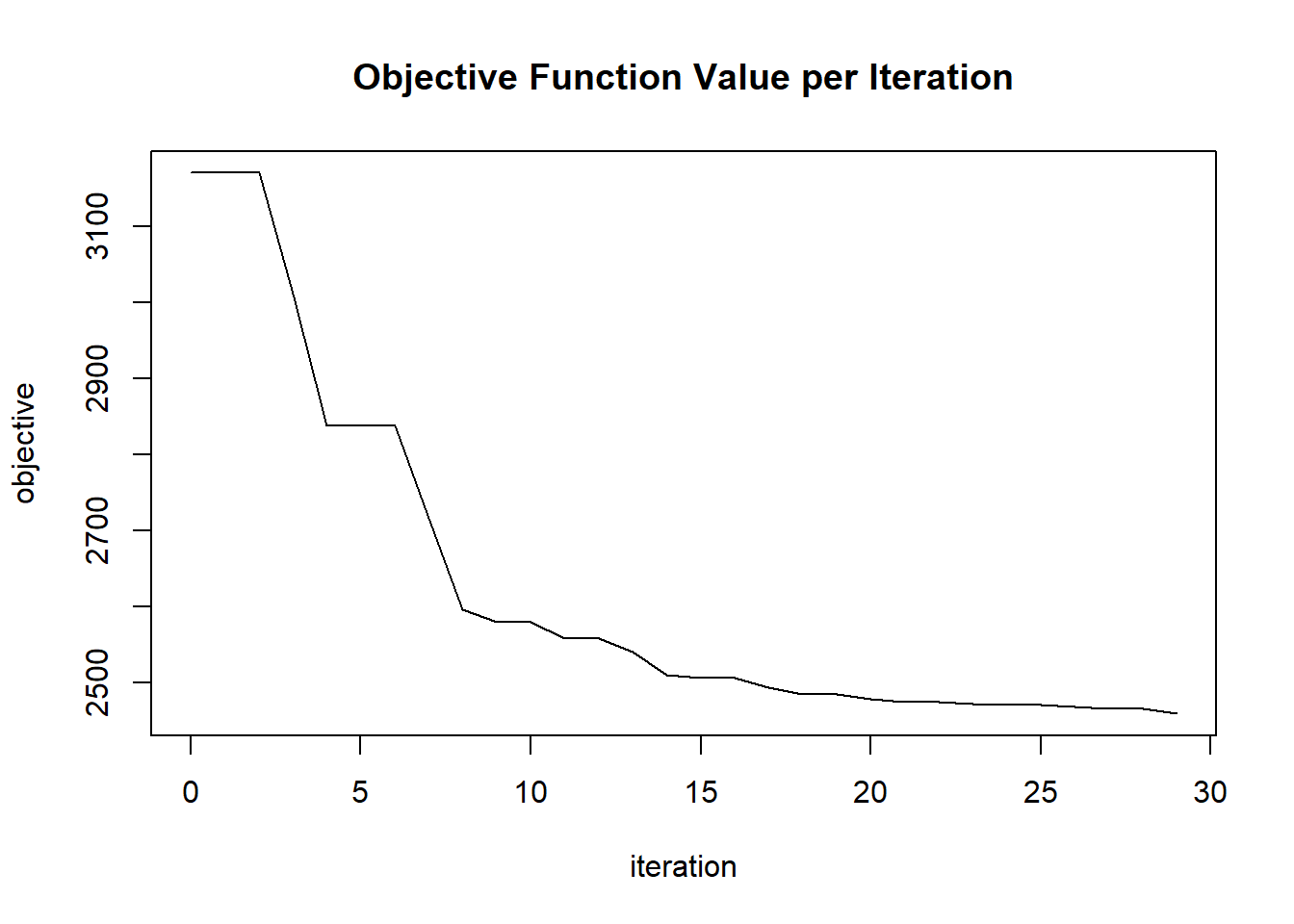

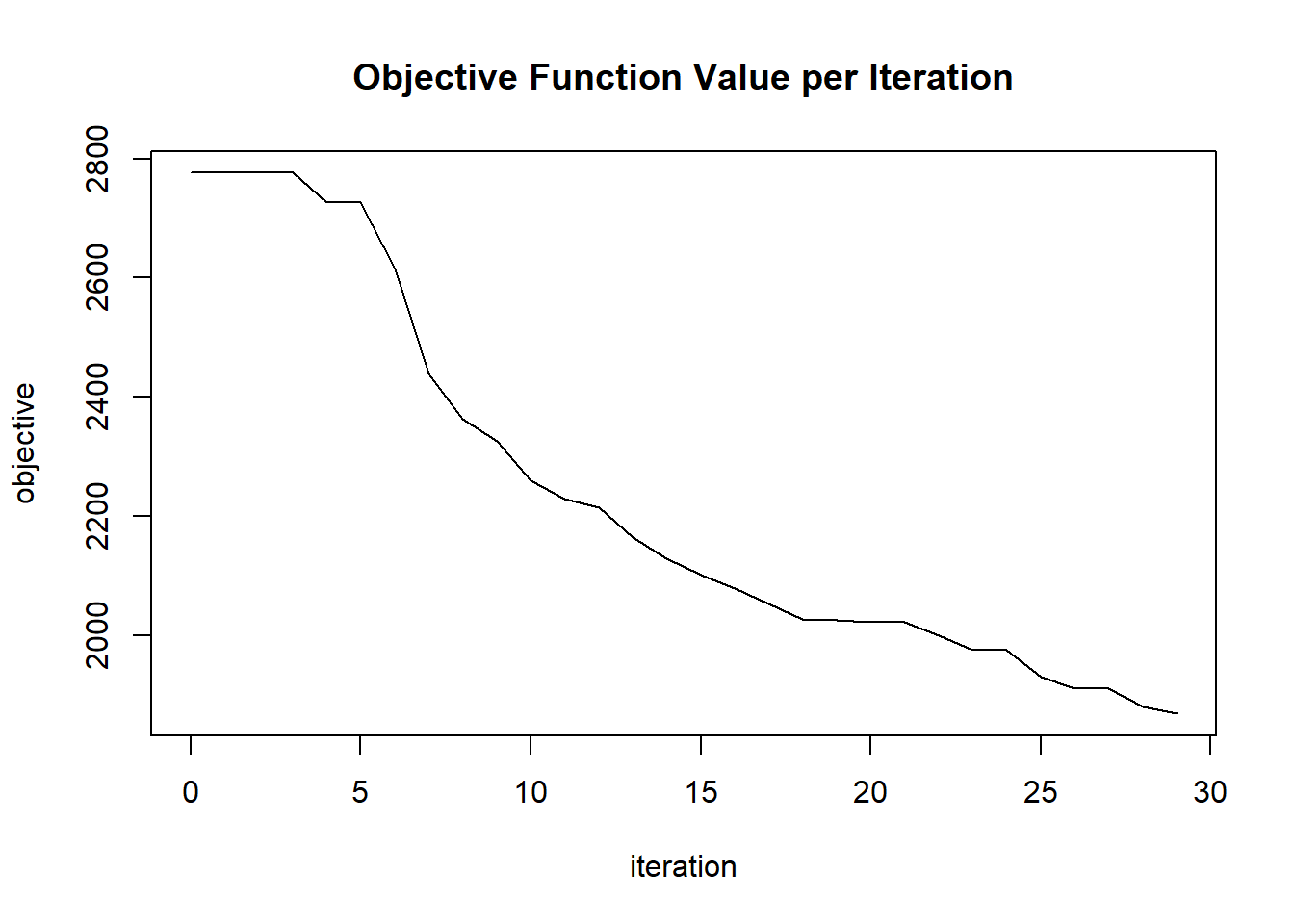

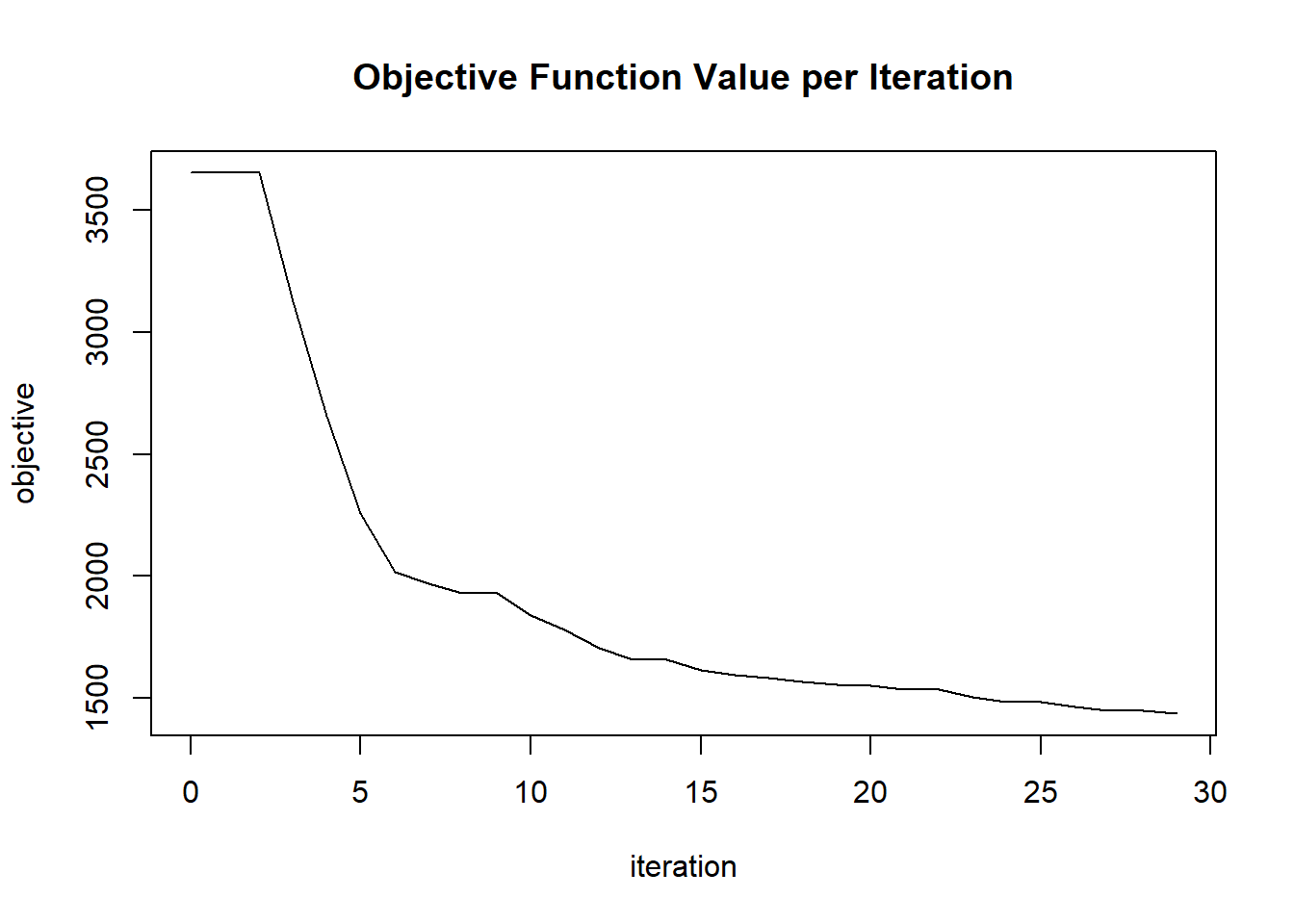

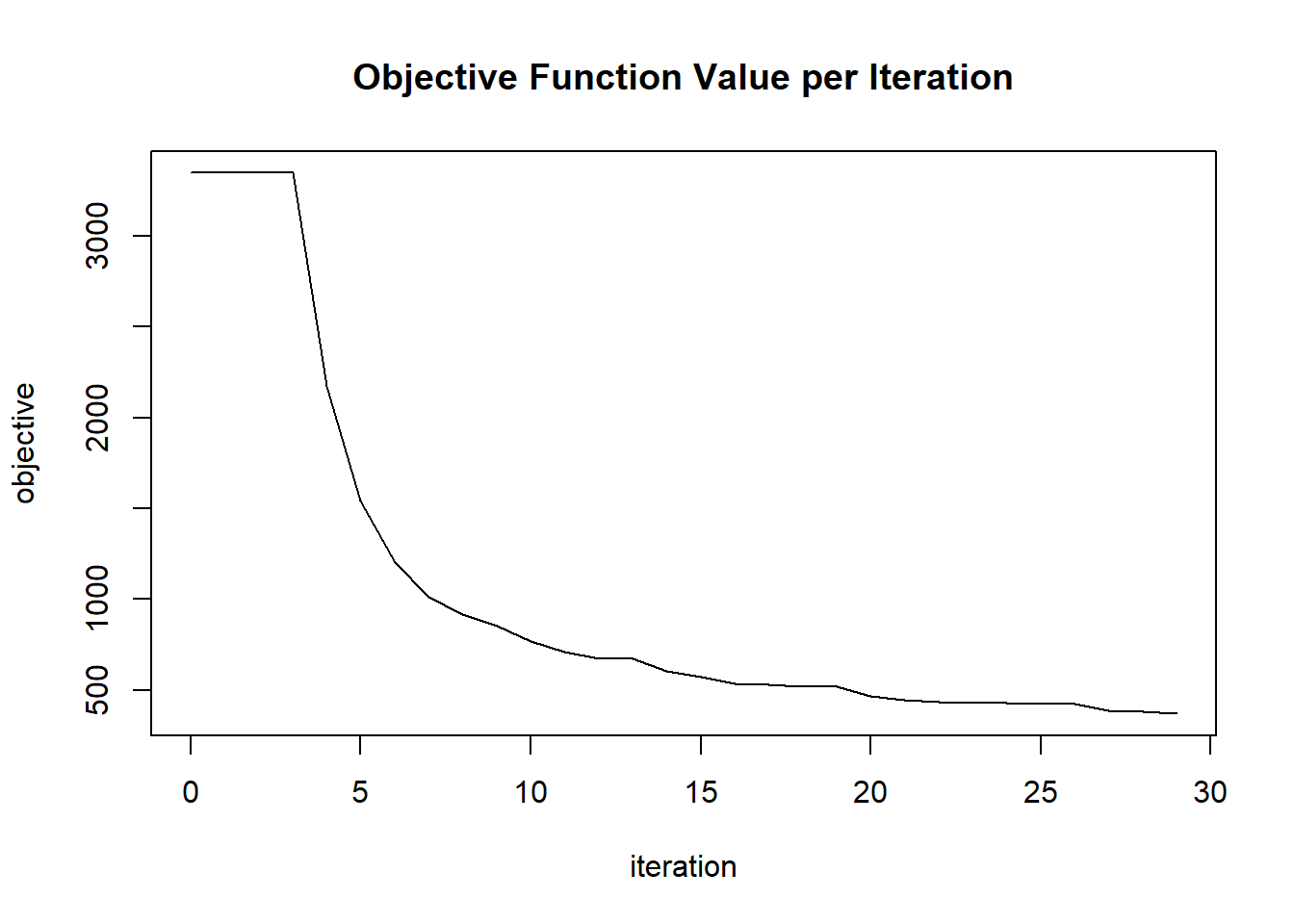

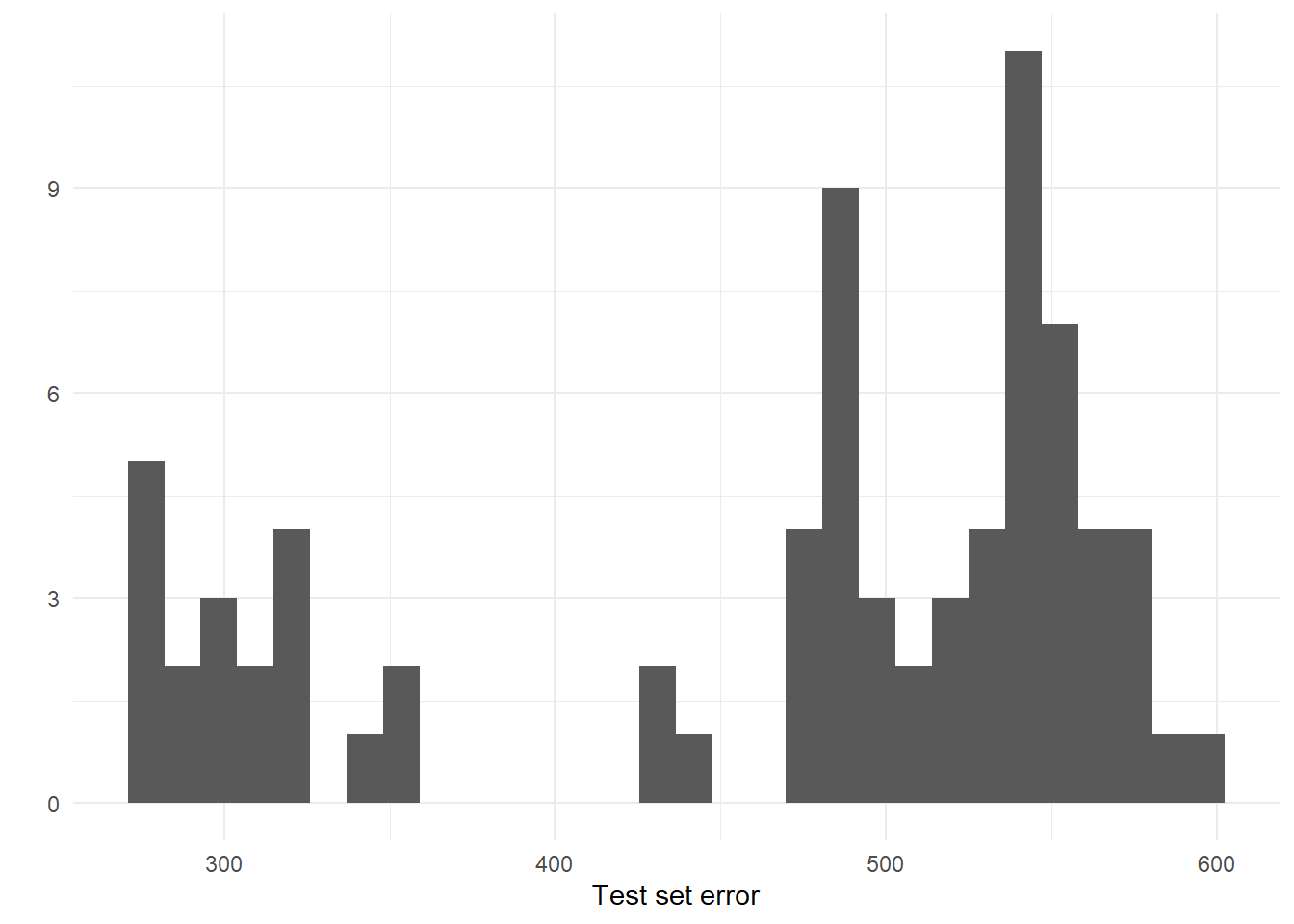

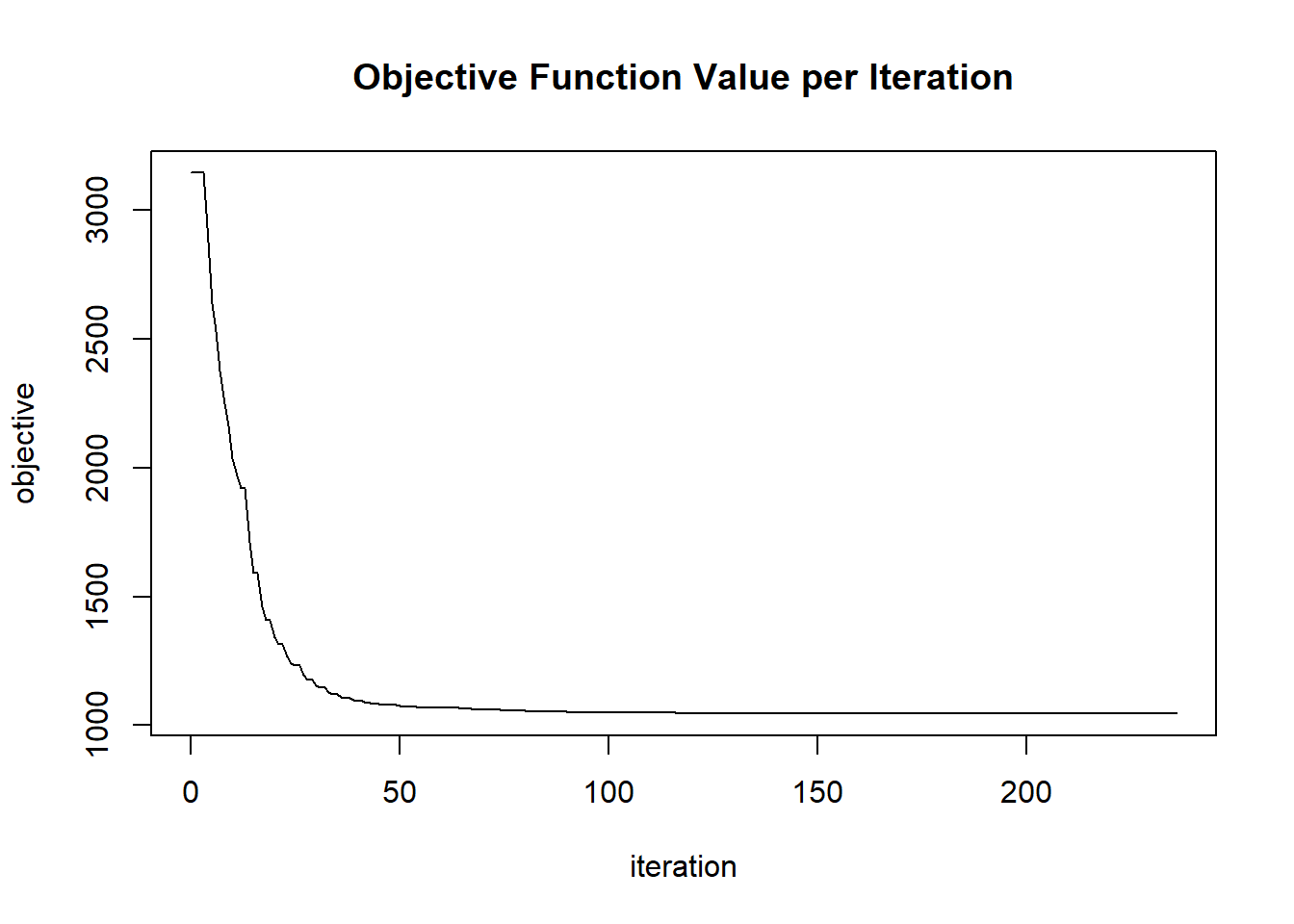

The results of this block are cached because they are slow to compute.

## [1] 75# Perform grid search - takes about 150 seconds.

system.time({

for (i in seq_len(nrow(params))) {

cat("Iteration", i, "of", nrow(params), "", paste0(round(i / nrow(params) * 100, 1), "%\n"))

print(params[i, ])

# Create model

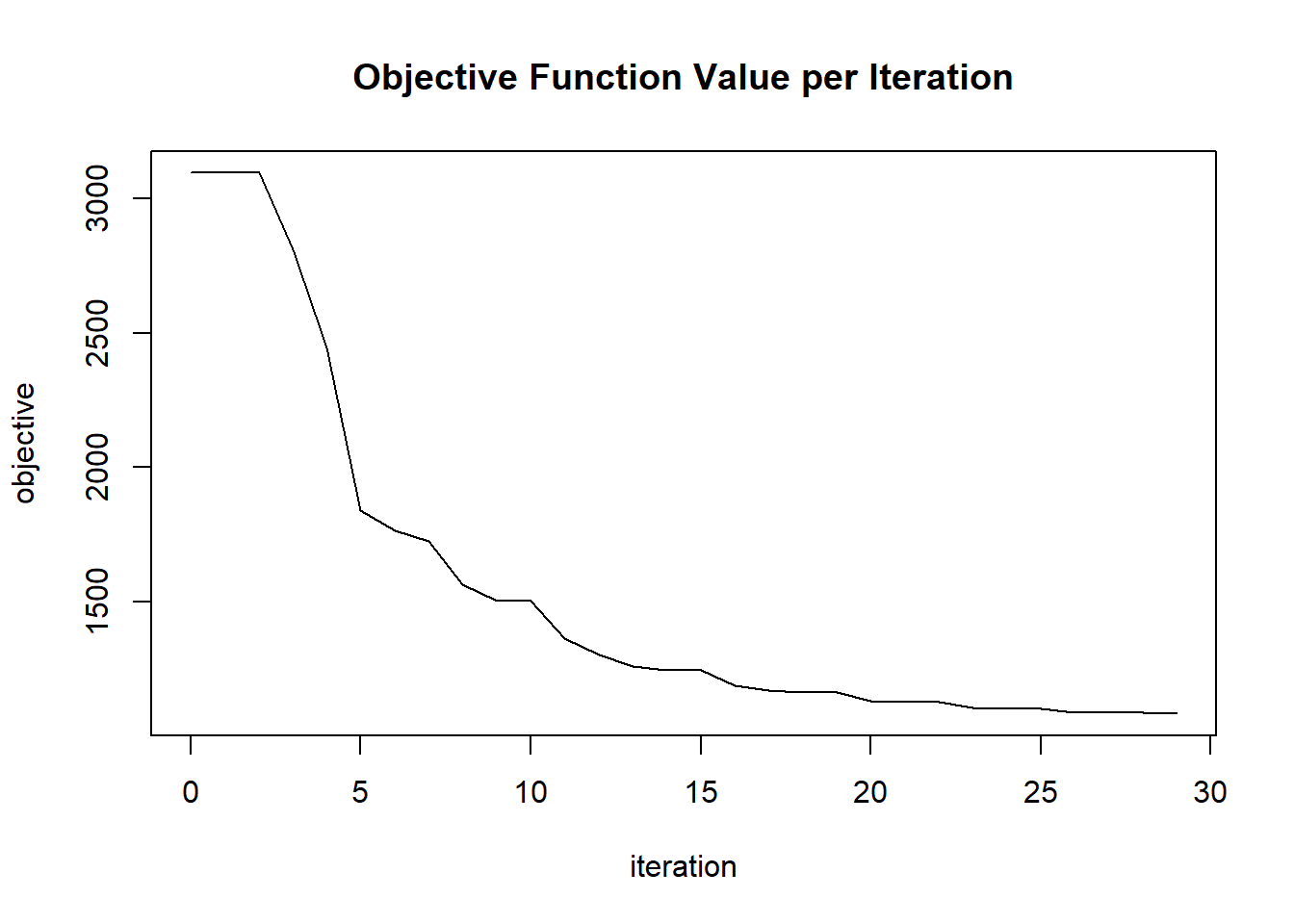

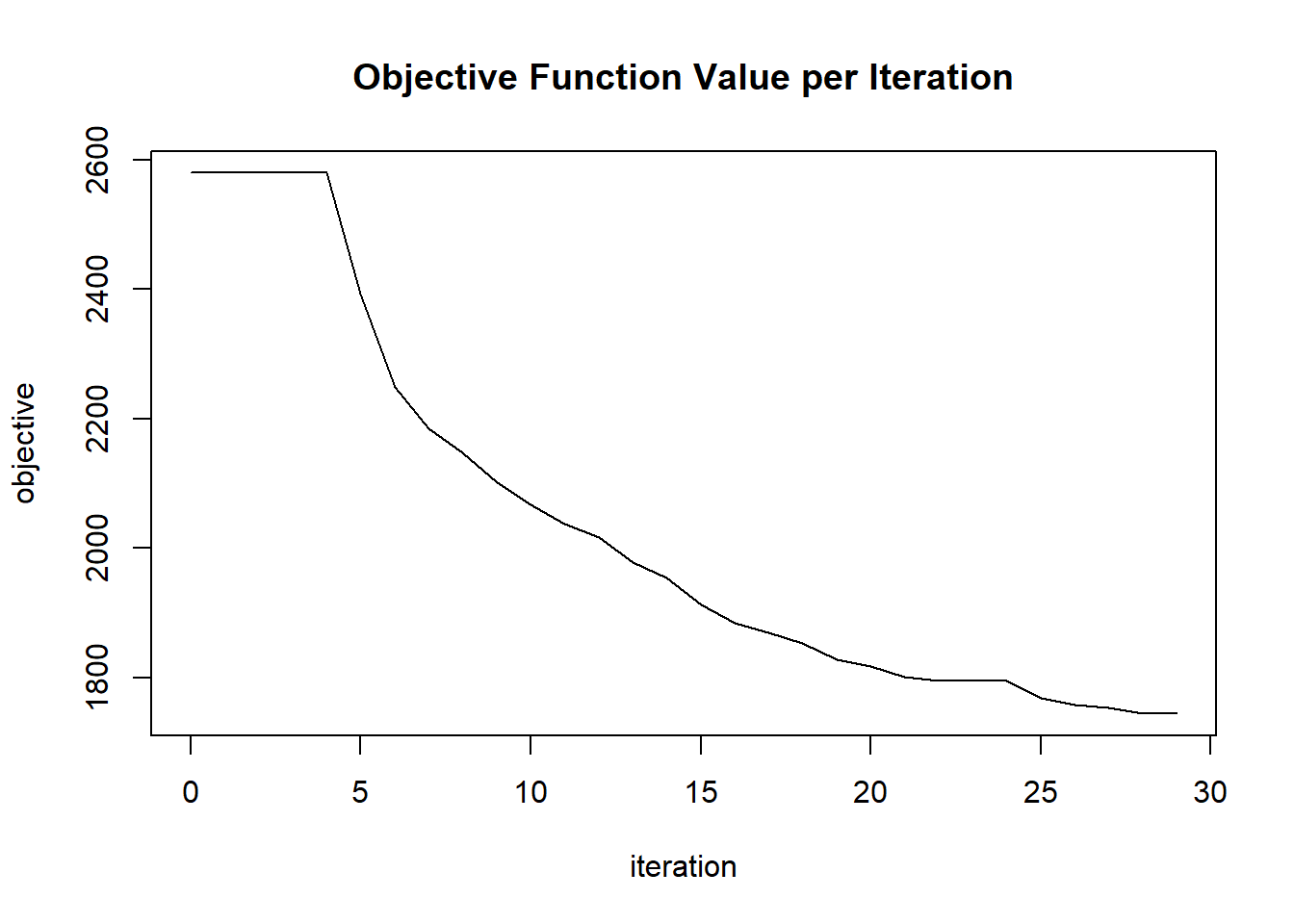

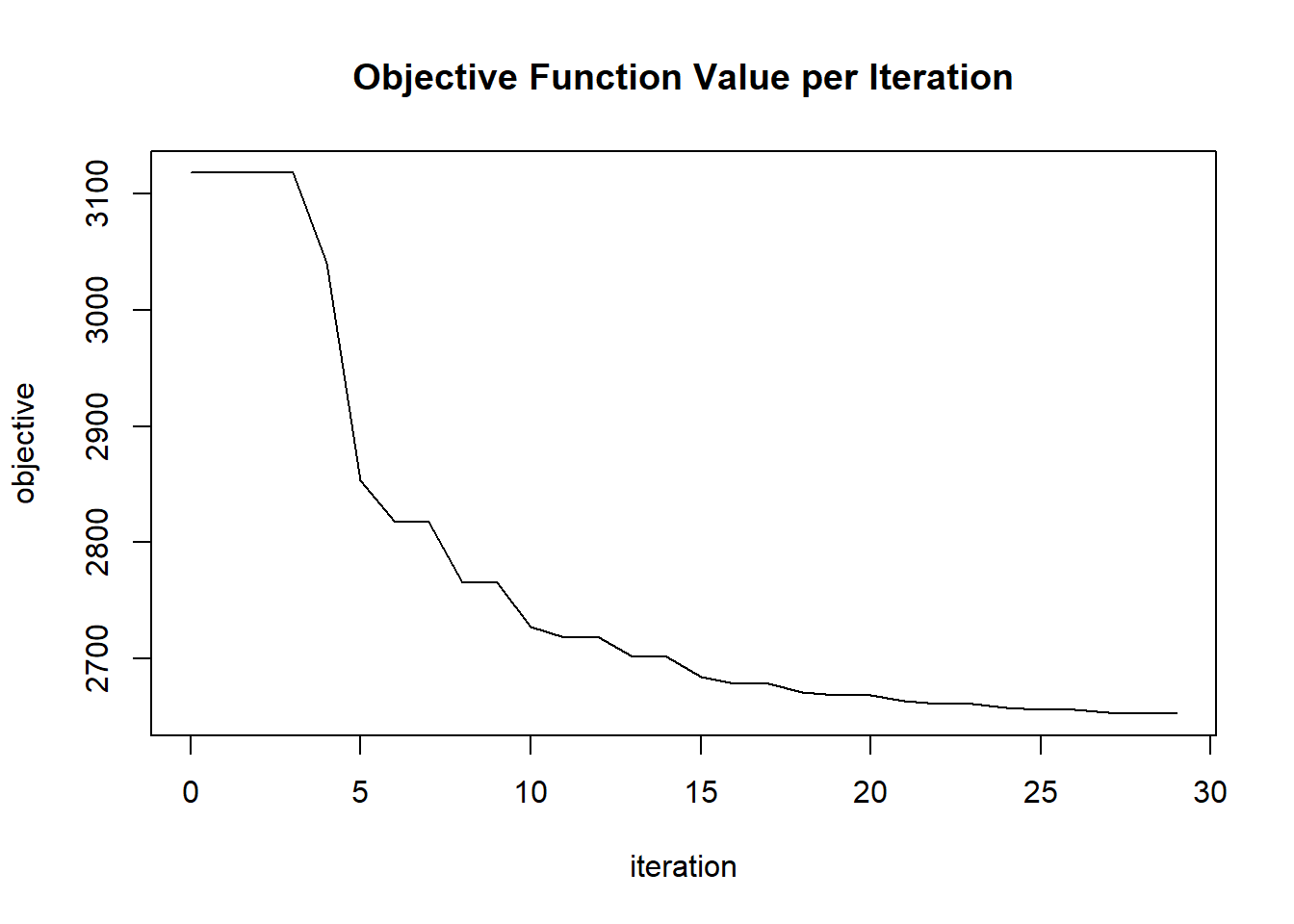

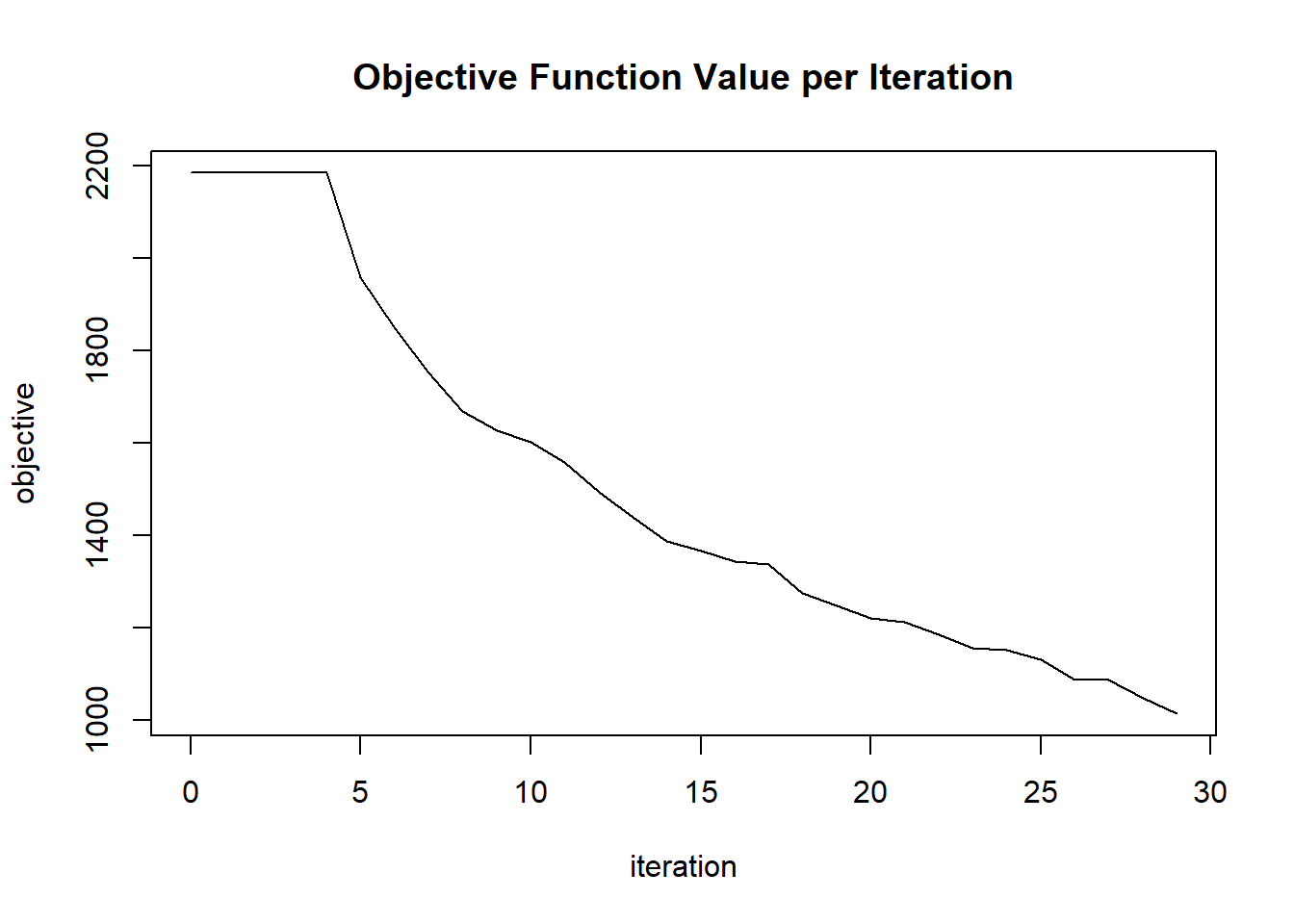

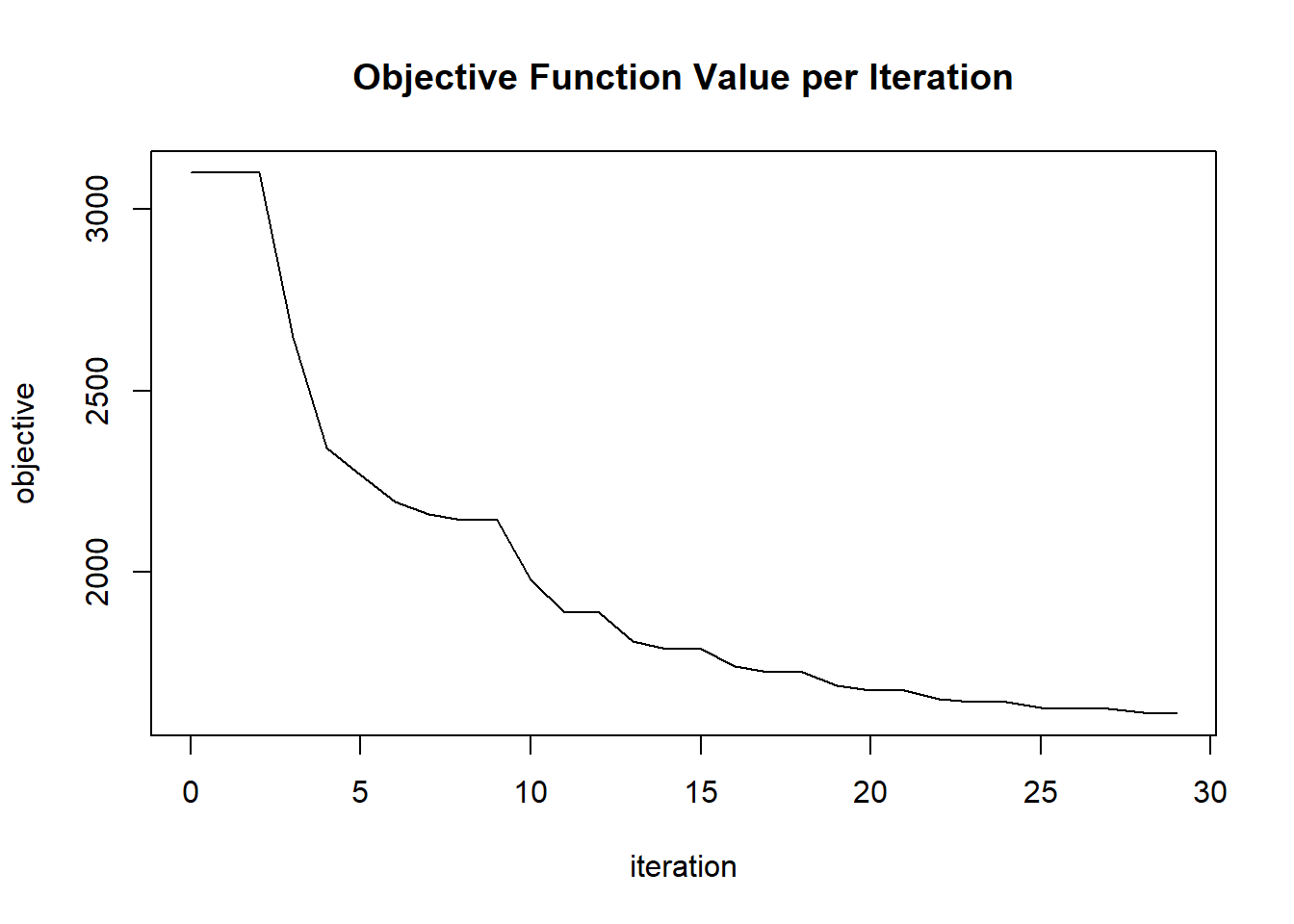

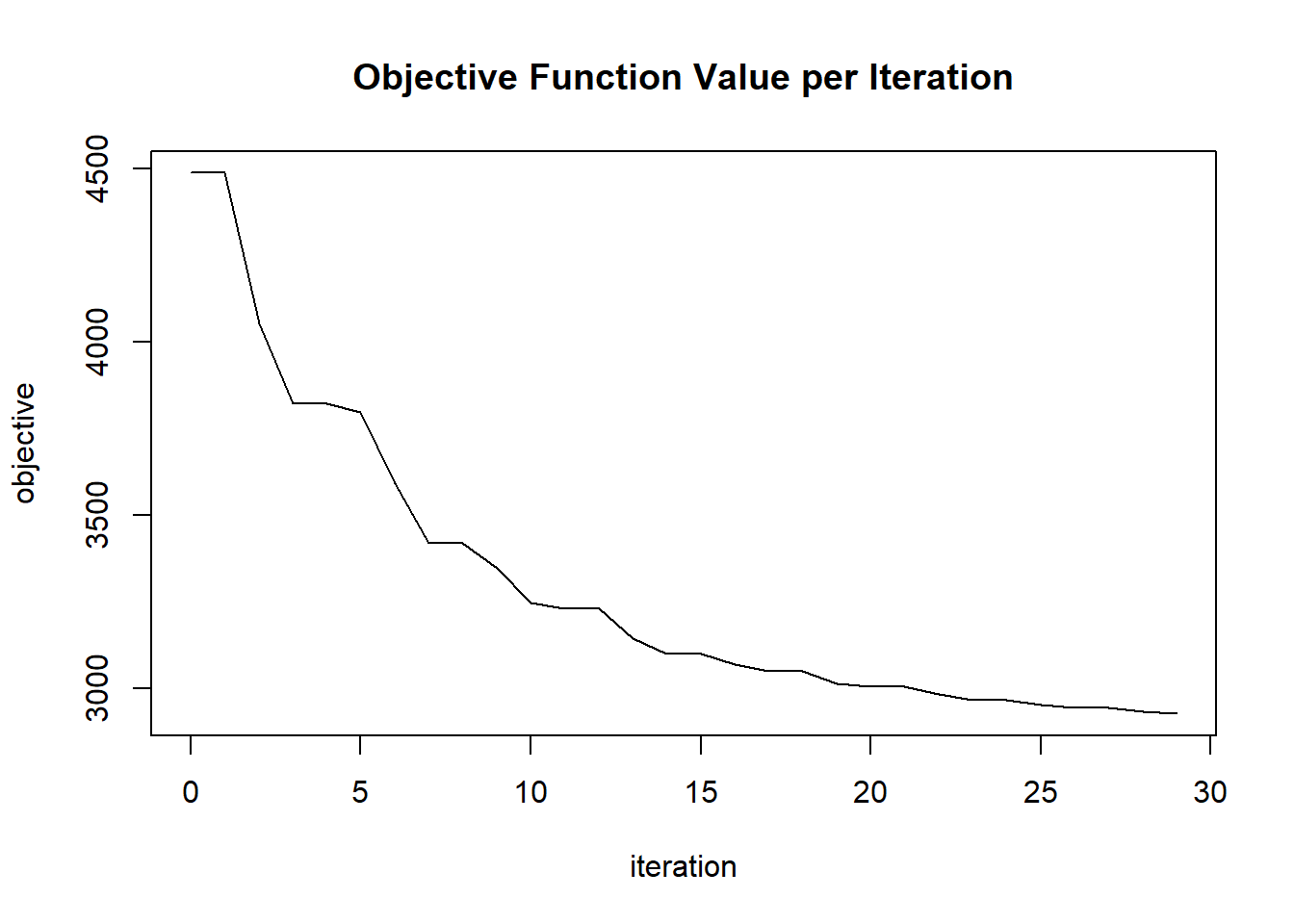

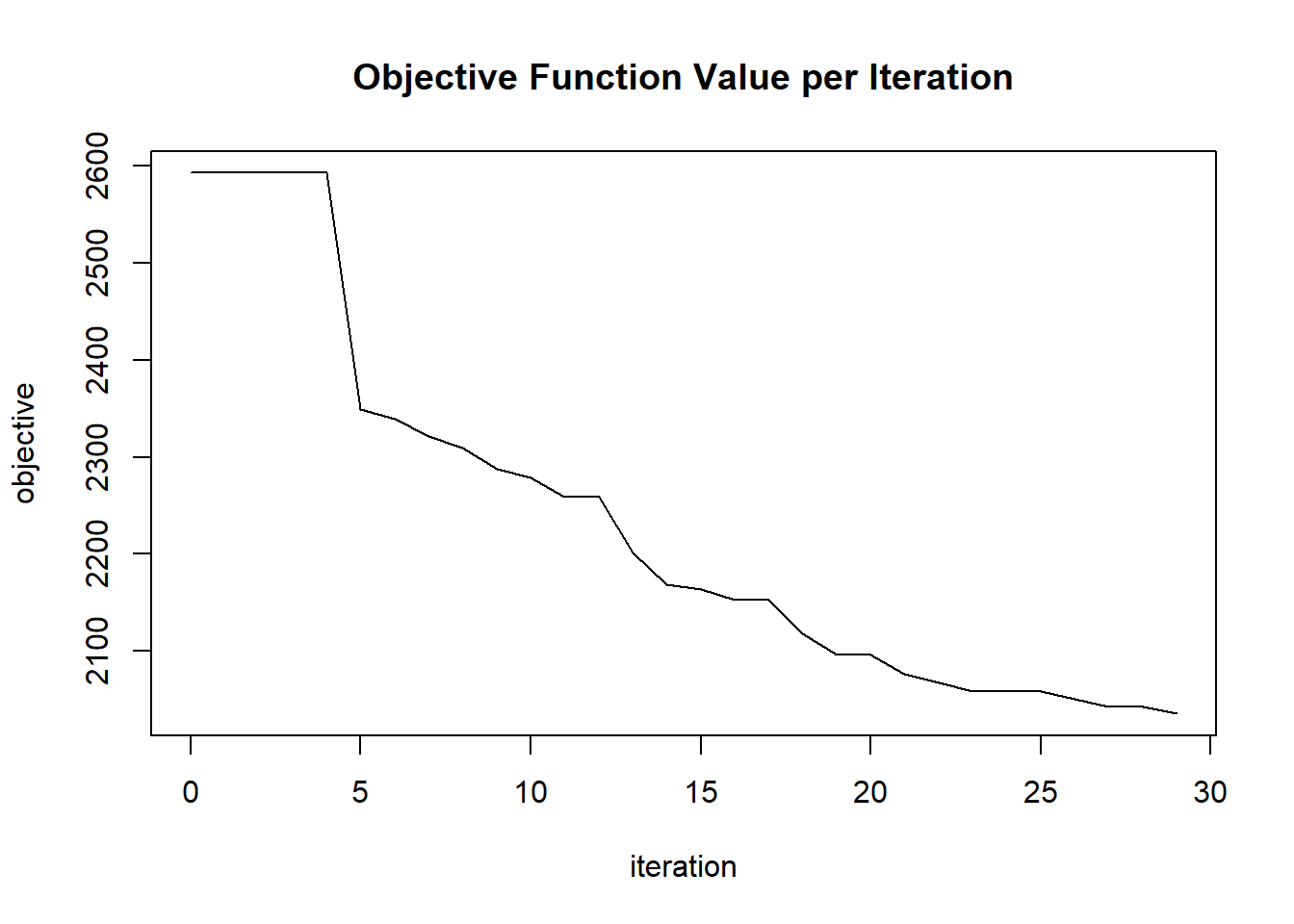

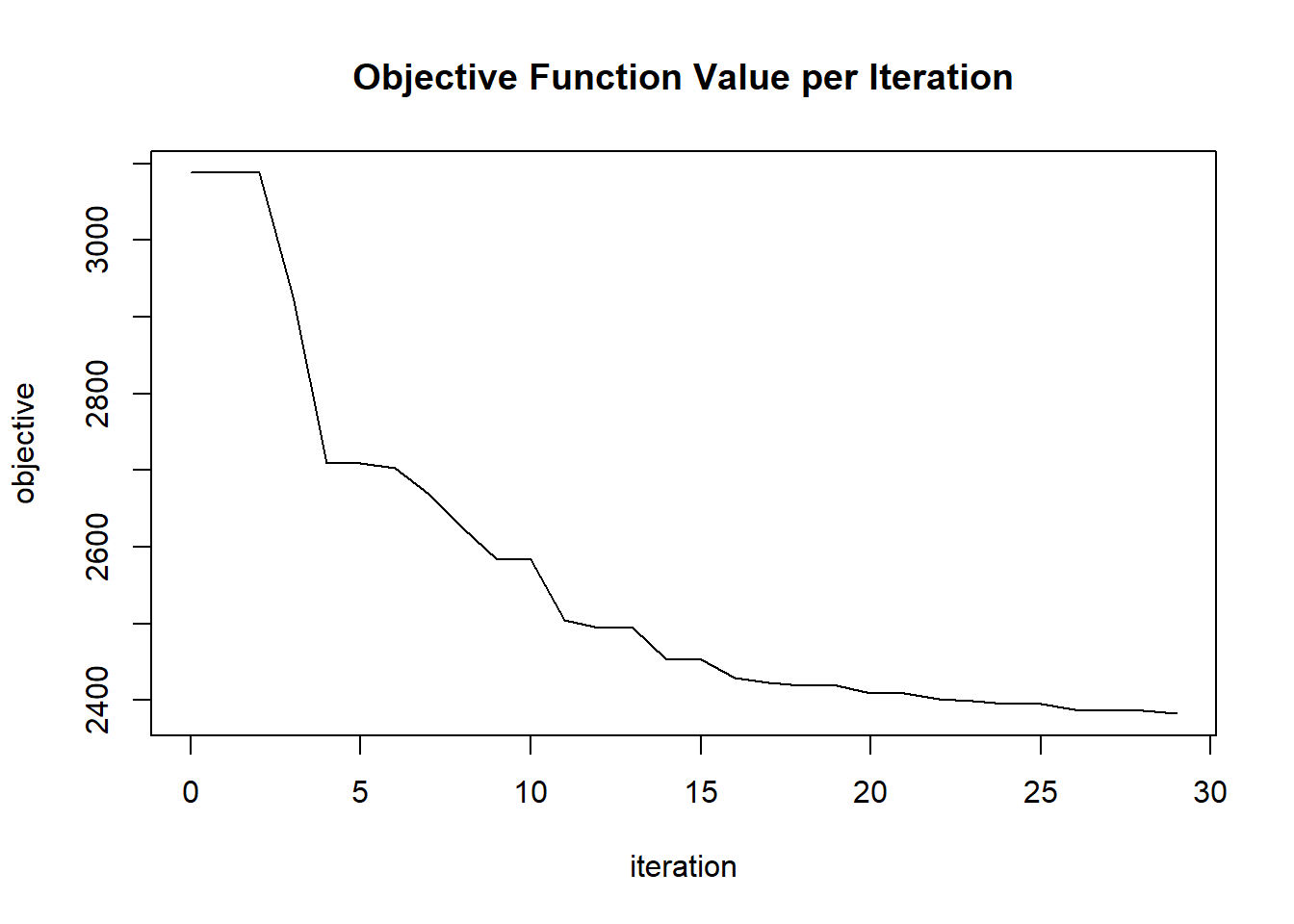

glrm_model = h2o::h2o.glrm(

training_frame = train,

# h2o requires that the validation frame have the same # of rows as the training data for some reason.

#validation_frame = valid,

k = params$k[i],

loss = "Quadratic",

regularization_x = params$regularization_x[i],

regularization_y = params$regularization_y[i],

gamma_x = params$gamma_x[i],

gamma_y = params$gamma_y[i],

transform = "STANDARDIZE",

# This is set artificially low so that it runs quickly during the tutorial.

max_iterations = 30,

# This is a more typical setting:

#max_iterations = 2000,

max_runtime_secs = 1000,

seed = 1,

loss_by_col_idx = losses$index,

loss_by_col = losses$loss)

summ_text = capture.output({ h2o::summary(glrm_model) })

glrm_sum[[i]] = summ_text

h2o::summary(glrm_model)

plot(glrm_model)

params$objective[i] = glrm_model@model$objective

# Predict on validation set and extract error

# Warning: this can throw java.lang.ArrayIndexOutOfBoundsException

try({

validate = h2o::h2o.performance(glrm_model, valid)

#print(validate@metrics)

glrm_metrics[[i]] = validate@metrics

params$error_num[i] = validate@metrics$numerr

params$error_cat[i] = validate@metrics$caterr

})

# Removing the model prevents the index error from occurring!

h2o::h2o.rm(glrm_model)

# Save after each iteration in case it crashes.

# This could go inside the try()

# params should be the first object.

save(params, glrm_metrics, glrm_sum,

file = "data/glrm-tuned-results.RData")

}

})## Iteration 1 of 75 1.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 233 5 L1 Quadratic 4 4 NA NA

## objective

## 233 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_1

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.04601 3078.15172

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1548.359

## Misclassification Error (Categorical): 330

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:07 0.257 sec 0 1.05000 4771.95362

## 2 2020-06-20 06:42:07 0.272 sec 1 1.10250 4264.58709

## 3 2020-06-20 06:42:07 0.280 sec 2 0.73500 4264.58709

## 4 2020-06-20 06:42:07 0.288 sec 3 0.49000 4264.58709

## 5 2020-06-20 06:42:07 0.300 sec 4 0.51450 4002.73529

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:07 0.428 sec 24 0.05678 3101.76007

## 26 2020-06-20 06:42:07 0.434 sec 25 0.05962 3089.45461

## 27 2020-06-20 06:42:07 0.437 sec 26 0.06260 3088.97874

## 28 2020-06-20 06:42:07 0.441 sec 27 0.06573 3087.10244

## 29 2020-06-20 06:42:07 0.445 sec 28 0.04382 3087.10244

## 30 2020-06-20 06:42:07 0.450 sec 29 0.04601 3078.15172

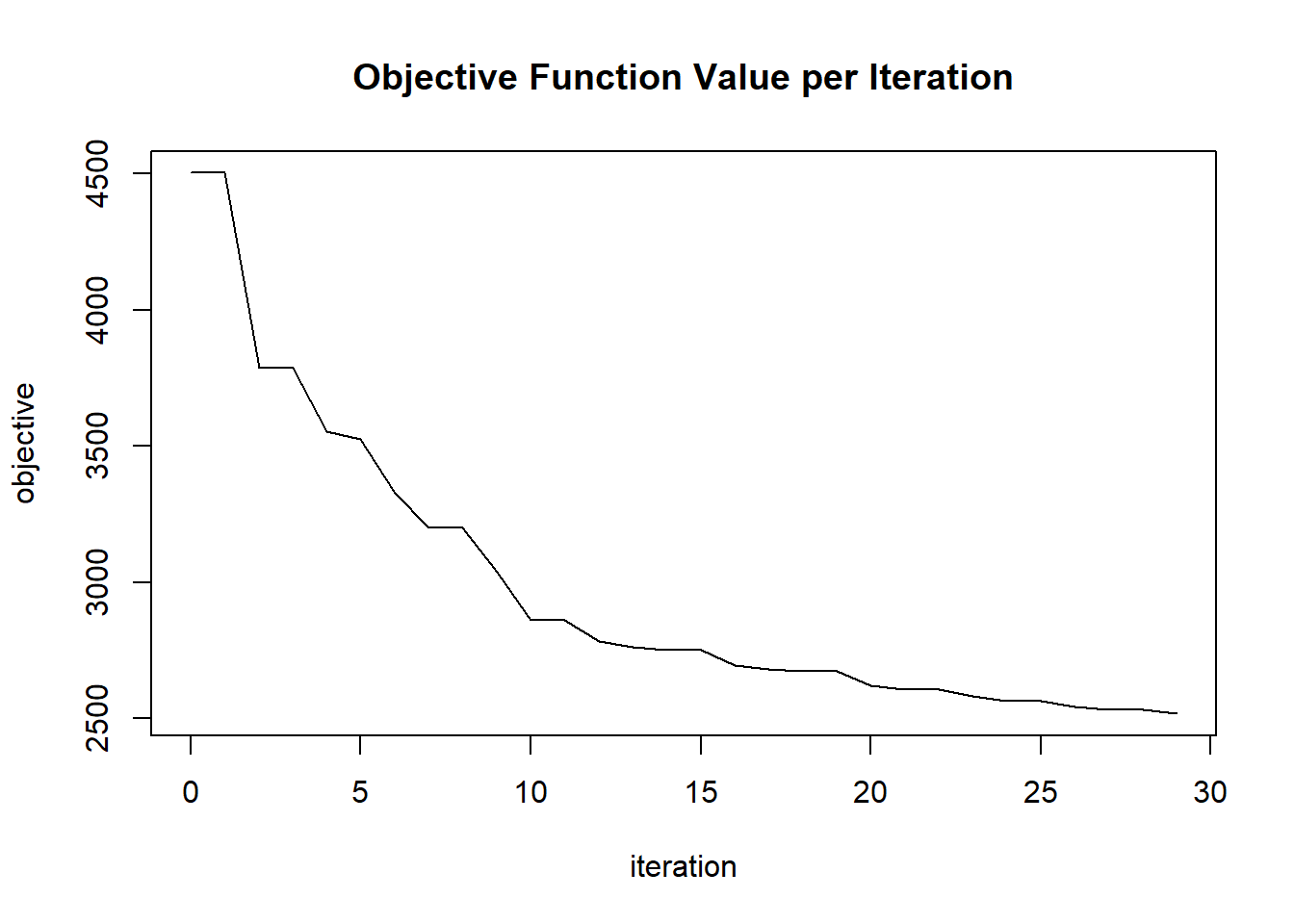

## Iteration 2 of 75 2.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 153 10 L1 Quadratic 4 1 NA NA

## objective

## 153 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_3

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.07247 2517.74751

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1340.119

## Misclassification Error (Categorical): 173

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:08 0.038 sec 0 1.05000 4502.23608

## 2 2020-06-20 06:42:08 0.042 sec 1 0.70000 4502.23608

## 3 2020-06-20 06:42:08 0.047 sec 2 0.73500 3788.22992

## 4 2020-06-20 06:42:08 0.053 sec 3 0.49000 3788.22992

## 5 2020-06-20 06:42:08 0.058 sec 4 0.51450 3551.78004

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:09 0.179 sec 24 0.14085 2563.08937

## 26 2020-06-20 06:42:09 0.187 sec 25 0.09390 2563.08937

## 27 2020-06-20 06:42:09 0.192 sec 26 0.09860 2543.64513

## 28 2020-06-20 06:42:09 0.197 sec 27 0.10353 2531.74728

## 29 2020-06-20 06:42:09 0.202 sec 28 0.06902 2531.74728

## 30 2020-06-20 06:42:09 0.206 sec 29 0.07247 2517.74751

## Iteration 3 of 75 4%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 1 3 None None 0 0 NA NA

## objective

## 1 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_5

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.03451 1903.07151

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1298.219

## Misclassification Error (Categorical): 325

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:10 0.026 sec 0 1.05000 2446.80542

## 2 2020-06-20 06:42:10 0.033 sec 1 0.70000 2446.80542

## 3 2020-06-20 06:42:10 0.038 sec 2 0.46667 2446.80542

## 4 2020-06-20 06:42:10 0.046 sec 3 0.31111 2446.80542

## 5 2020-06-20 06:42:10 0.053 sec 4 0.15556 2446.80542

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:10 0.162 sec 24 0.04259 1911.14025

## 26 2020-06-20 06:42:10 0.166 sec 25 0.04472 1910.48527

## 27 2020-06-20 06:42:10 0.171 sec 26 0.04695 1908.46755

## 28 2020-06-20 06:42:10 0.176 sec 27 0.04930 1905.78622

## 29 2020-06-20 06:42:10 0.183 sec 28 0.03287 1905.78622

## 30 2020-06-20 06:42:10 0.187 sec 29 0.03451 1903.07151

## Iteration 4 of 75 5.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 148 3 Quadratic Quadratic 4 1 NA NA

## objective

## 148 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_7

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.02921 2405.49655

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1358.582

## Misclassification Error (Categorical): 355

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:12 0.026 sec 0 1.05000 2871.85433

## 2 2020-06-20 06:42:12 0.032 sec 1 0.70000 2871.85433

## 3 2020-06-20 06:42:12 0.039 sec 2 0.46667 2871.85433

## 4 2020-06-20 06:42:12 0.044 sec 3 0.31111 2871.85433

## 5 2020-06-20 06:42:12 0.049 sec 4 0.32667 2822.44503

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:12 0.150 sec 24 0.05678 2415.05649

## 26 2020-06-20 06:42:12 0.154 sec 25 0.03785 2415.05649

## 27 2020-06-20 06:42:12 0.160 sec 26 0.03975 2410.09312

## 28 2020-06-20 06:42:12 0.164 sec 27 0.04173 2408.33081

## 29 2020-06-20 06:42:12 0.167 sec 28 0.02782 2408.33081

## 30 2020-06-20 06:42:12 0.169 sec 29 0.02921 2405.49655

## Iteration 5 of 75 6.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 160 3 L1 L1 4 1 NA NA

## objective

## 160 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_9

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.04601 2572.00653

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1452.309

## Misclassification Error (Categorical): 360

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:13 0.025 sec 0 1.05000 4147.94914

## 2 2020-06-20 06:42:13 0.027 sec 1 0.70000 4147.94914

## 3 2020-06-20 06:42:13 0.029 sec 2 0.46667 4147.94914

## 4 2020-06-20 06:42:13 0.031 sec 3 0.49000 3201.29998

## 5 2020-06-20 06:42:13 0.033 sec 4 0.51450 3066.89359

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:13 0.081 sec 24 0.05678 2599.59738

## 26 2020-06-20 06:42:13 0.084 sec 25 0.05962 2590.97615

## 27 2020-06-20 06:42:13 0.085 sec 26 0.06260 2582.01057

## 28 2020-06-20 06:42:13 0.087 sec 27 0.04173 2582.01057

## 29 2020-06-20 06:42:13 0.089 sec 28 0.04382 2575.75859

## 30 2020-06-20 06:42:13 0.090 sec 29 0.04601 2572.00653

## Iteration 6 of 75 8%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 62 5 L1 None 4 0 NA NA

## objective

## 62 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_11

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.04601 2170.55916

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1366.474

## Misclassification Error (Categorical): 243

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:15 0.056 sec 0 1.05000 4299.99970

## 2 2020-06-20 06:42:15 0.060 sec 1 0.70000 4299.99970

## 3 2020-06-20 06:42:15 0.063 sec 2 0.73500 4051.36990

## 4 2020-06-20 06:42:15 0.066 sec 3 0.77175 3736.02992

## 5 2020-06-20 06:42:15 0.068 sec 4 0.51450 3736.02992

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:15 0.119 sec 24 0.05678 2214.74930

## 26 2020-06-20 06:42:15 0.122 sec 25 0.05962 2200.28783

## 27 2020-06-20 06:42:15 0.124 sec 26 0.06260 2190.57979

## 28 2020-06-20 06:42:15 0.127 sec 27 0.04173 2190.57979

## 29 2020-06-20 06:42:15 0.130 sec 28 0.04382 2177.78252

## 30 2020-06-20 06:42:15 0.132 sec 29 0.04601 2170.55916

## Iteration 7 of 75 9.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 212 5 Quadratic L1 1 4 NA NA

## objective

## 212 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_13

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.17977 1737.18758

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1439.787

## Misclassification Error (Categorical): 126

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:17 0.049 sec 0 1.05000 2940.72016

## 2 2020-06-20 06:42:17 0.053 sec 1 0.70000 2940.72016

## 3 2020-06-20 06:42:17 0.056 sec 2 0.46667 2940.72016

## 4 2020-06-20 06:42:17 0.059 sec 3 0.31111 2940.72016

## 5 2020-06-20 06:42:17 0.064 sec 4 0.32667 2817.45159

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:17 0.117 sec 24 0.22184 1768.41663

## 26 2020-06-20 06:42:17 0.119 sec 25 0.23294 1765.68228

## 27 2020-06-20 06:42:17 0.122 sec 26 0.24458 1765.04244

## 28 2020-06-20 06:42:17 0.125 sec 27 0.25681 1763.97881

## 29 2020-06-20 06:42:17 0.129 sec 28 0.17121 1763.97881

## 30 2020-06-20 06:42:17 0.132 sec 29 0.17977 1737.18758

## Iteration 8 of 75 10.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 183 10 None L1 0 4 NA NA

## objective

## 183 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_15

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.17977 970.47294

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 761.7432

## Misclassification Error (Categorical): 55

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:18 0.035 sec 0 1.05000 4057.18290

## 2 2020-06-20 06:42:18 0.040 sec 1 0.70000 4057.18290

## 3 2020-06-20 06:42:18 0.046 sec 2 0.46667 4057.18290

## 4 2020-06-20 06:42:18 0.050 sec 3 0.49000 3545.77830

## 5 2020-06-20 06:42:18 0.053 sec 4 0.51450 3118.80625

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:18 0.164 sec 24 0.22184 1028.03950

## 26 2020-06-20 06:42:18 0.167 sec 25 0.23294 1016.89414

## 27 2020-06-20 06:42:18 0.172 sec 26 0.24458 1011.43623

## 28 2020-06-20 06:42:18 0.179 sec 27 0.16306 1011.43623

## 29 2020-06-20 06:42:18 0.184 sec 28 0.17121 983.41864

## 30 2020-06-20 06:42:18 0.189 sec 29 0.17977 970.47294

## Iteration 9 of 75 12%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 102 10 None L1 0 1 NA NA

## objective

## 102 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_17

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.28314 647.60549

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 551.2013

## Misclassification Error (Categorical): 25

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:20 0.027 sec 0 1.05000 3568.49501

## 2 2020-06-20 06:42:20 0.031 sec 1 0.70000 3568.49501

## 3 2020-06-20 06:42:20 0.034 sec 2 0.46667 3568.49501

## 4 2020-06-20 06:42:20 0.037 sec 3 0.31111 3568.49501

## 5 2020-06-20 06:42:20 0.041 sec 4 0.32667 2320.28803

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:20 0.110 sec 24 0.34941 703.21917

## 26 2020-06-20 06:42:20 0.113 sec 25 0.23294 703.21917

## 27 2020-06-20 06:42:20 0.117 sec 26 0.24458 673.13521

## 28 2020-06-20 06:42:20 0.120 sec 27 0.25681 660.26034

## 29 2020-06-20 06:42:20 0.124 sec 28 0.26965 650.69811

## 30 2020-06-20 06:42:20 0.127 sec 29 0.28314 647.60549

## Iteration 10 of 75 13.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 204 10 Quadratic Quadratic 1 4 NA NA

## objective

## 204 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_19

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.07247 1608.29066

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 886.3189

## Misclassification Error (Categorical): 97

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:22 0.035 sec 0 1.05000 3495.23576

## 2 2020-06-20 06:42:22 0.039 sec 1 0.70000 3495.23576

## 3 2020-06-20 06:42:22 0.043 sec 2 0.46667 3495.23576

## 4 2020-06-20 06:42:22 0.048 sec 3 0.49000 2652.37360

## 5 2020-06-20 06:42:22 0.051 sec 4 0.51450 2480.93892

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:22 0.126 sec 24 0.14085 1634.53029

## 26 2020-06-20 06:42:22 0.131 sec 25 0.09390 1634.53029

## 27 2020-06-20 06:42:22 0.135 sec 26 0.09860 1618.58550

## 28 2020-06-20 06:42:22 0.139 sec 27 0.10353 1618.39309

## 29 2020-06-20 06:42:22 0.144 sec 28 0.06902 1618.39309

## 30 2020-06-20 06:42:22 0.149 sec 29 0.07247 1608.29066

## Iteration 11 of 75 14.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 34 3 L1 None 1 0 NA NA

## objective

## 34 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_21

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.03451 2077.69944

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1326.069

## Misclassification Error (Categorical): 357

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:23 0.016 sec 0 1.05000 2393.57535

## 2 2020-06-20 06:42:23 0.018 sec 1 0.70000 2393.57535

## 3 2020-06-20 06:42:23 0.019 sec 2 0.46667 2393.57535

## 4 2020-06-20 06:42:23 0.021 sec 3 0.31111 2393.57535

## 5 2020-06-20 06:42:23 0.023 sec 4 0.15556 2393.57535

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:23 0.056 sec 24 0.04259 2085.11774

## 26 2020-06-20 06:42:23 0.057 sec 25 0.04472 2084.85913

## 27 2020-06-20 06:42:23 0.059 sec 26 0.02981 2084.85913

## 28 2020-06-20 06:42:23 0.061 sec 27 0.03130 2080.09261

## 29 2020-06-20 06:42:23 0.062 sec 28 0.03287 2077.80021

## 30 2020-06-20 06:42:23 0.063 sec 29 0.03451 2077.69944

## Iteration 12 of 75 16%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 36 10 L1 None 1 0 NA NA

## objective

## 36 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_23

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.11414 993.93860

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 585.2555

## Misclassification Error (Categorical): 52

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:25 0.035 sec 0 1.05000 2835.49005

## 2 2020-06-20 06:42:25 0.039 sec 1 0.70000 2835.49005

## 3 2020-06-20 06:42:25 0.047 sec 2 0.46667 2835.49005

## 4 2020-06-20 06:42:25 0.052 sec 3 0.31111 2835.49005

## 5 2020-06-20 06:42:25 0.055 sec 4 0.32667 2247.03667

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:25 0.146 sec 24 0.22184 1043.90853

## 26 2020-06-20 06:42:25 0.151 sec 25 0.14790 1043.90853

## 27 2020-06-20 06:42:25 0.155 sec 26 0.15529 1019.55837

## 28 2020-06-20 06:42:25 0.159 sec 27 0.16306 1012.07422

## 29 2020-06-20 06:42:25 0.164 sec 28 0.10870 1012.07422

## 30 2020-06-20 06:42:25 0.167 sec 29 0.11414 993.93860

## Iteration 13 of 75 17.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 63 10 L1 None 4 0 NA NA

## objective

## 63 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_25

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.07247 1959.41313

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1108.732

## Misclassification Error (Categorical): 140

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:26 0.025 sec 0 1.05000 4240.23364

## 2 2020-06-20 06:42:26 0.028 sec 1 0.70000 4240.23364

## 3 2020-06-20 06:42:26 0.031 sec 2 0.73500 3769.95950

## 4 2020-06-20 06:42:26 0.035 sec 3 0.49000 3769.95950

## 5 2020-06-20 06:42:26 0.038 sec 4 0.51450 3278.63616

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:26 0.104 sec 24 0.08943 2023.27074

## 26 2020-06-20 06:42:26 0.106 sec 25 0.09390 1999.13999

## 27 2020-06-20 06:42:26 0.109 sec 26 0.06260 1999.13999

## 28 2020-06-20 06:42:26 0.113 sec 27 0.06573 1975.68901

## 29 2020-06-20 06:42:26 0.117 sec 28 0.06902 1960.73931

## 30 2020-06-20 06:42:26 0.121 sec 29 0.07247 1959.41313

## Iteration 14 of 75 18.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 232 3 L1 Quadratic 4 4 NA NA

## objective

## 232 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_27

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.01855 3059.37324

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1629.755

## Misclassification Error (Categorical): 359

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:28 0.014 sec 0 1.05000 4600.37645

## 2 2020-06-20 06:42:28 0.015 sec 1 0.70000 4600.37645

## 3 2020-06-20 06:42:28 0.017 sec 2 0.73500 3735.98649

## 4 2020-06-20 06:42:28 0.018 sec 3 0.49000 3735.98649

## 5 2020-06-20 06:42:28 0.020 sec 4 0.51450 3573.62975

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:28 0.050 sec 24 0.05678 3071.61341

## 26 2020-06-20 06:42:28 0.051 sec 25 0.03785 3071.61341

## 27 2020-06-20 06:42:28 0.052 sec 26 0.03975 3064.04157

## 28 2020-06-20 06:42:28 0.053 sec 27 0.02650 3064.04157

## 29 2020-06-20 06:42:28 0.055 sec 28 0.02782 3059.37324

## 30 2020-06-20 06:42:28 0.056 sec 29 0.01855 3059.37324

## Iteration 15 of 75 20%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 151 3 L1 Quadratic 4 1 NA NA

## objective

## 151 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_29

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.02921 2719.04642

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1493.146

## Misclassification Error (Categorical): 360

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:29 0.011 sec 0 1.05000 4241.79446

## 2 2020-06-20 06:42:29 0.013 sec 1 0.70000 4241.79446

## 3 2020-06-20 06:42:29 0.014 sec 2 0.46667 4241.79446

## 4 2020-06-20 06:42:29 0.015 sec 3 0.49000 3172.19724

## 5 2020-06-20 06:42:29 0.018 sec 4 0.51450 3119.83804

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:29 0.045 sec 24 0.05678 2732.56924

## 26 2020-06-20 06:42:29 0.046 sec 25 0.05962 2728.80539

## 27 2020-06-20 06:42:29 0.047 sec 26 0.03975 2728.80539

## 28 2020-06-20 06:42:29 0.048 sec 27 0.04173 2723.86288

## 29 2020-06-20 06:42:29 0.049 sec 28 0.04382 2719.04642

## 30 2020-06-20 06:42:29 0.051 sec 29 0.02921 2719.04642

## Iteration 16 of 75 21.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 158 5 Quadratic L1 4 1 NA NA

## objective

## 158 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_31

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.04601 1744.85536

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1309.328

## Misclassification Error (Categorical): 180

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:31 0.018 sec 0 1.05000 2859.22655

## 2 2020-06-20 06:42:31 0.021 sec 1 0.70000 2859.22655

## 3 2020-06-20 06:42:31 0.024 sec 2 0.46667 2859.22655

## 4 2020-06-20 06:42:31 0.026 sec 3 0.31111 2859.22655

## 5 2020-06-20 06:42:31 0.029 sec 4 0.32667 2646.37972

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:31 0.078 sec 24 0.05678 1774.53466

## 26 2020-06-20 06:42:31 0.080 sec 25 0.05962 1763.00949

## 27 2020-06-20 06:42:31 0.082 sec 26 0.06260 1757.75766

## 28 2020-06-20 06:42:31 0.084 sec 27 0.06573 1757.09369

## 29 2020-06-20 06:42:31 0.086 sec 28 0.04382 1757.09369

## 30 2020-06-20 06:42:31 0.088 sec 29 0.04601 1744.85536

## Iteration 17 of 75 22.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 124 3 L1 Quadratic 1 1 NA NA

## objective

## 124 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_33

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.08560 2314.60930

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1343.625

## Misclassification Error (Categorical): 353

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:33 0.013 sec 0 1.05000 2699.00728

## 2 2020-06-20 06:42:33 0.015 sec 1 0.70000 2699.00728

## 3 2020-06-20 06:42:33 0.017 sec 2 0.46667 2699.00728

## 4 2020-06-20 06:42:33 0.019 sec 3 0.31111 2699.00728

## 5 2020-06-20 06:42:33 0.021 sec 4 0.15556 2699.00728

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:33 0.053 sec 24 0.06707 2325.07536

## 26 2020-06-20 06:42:33 0.055 sec 25 0.07043 2321.23030

## 27 2020-06-20 06:42:33 0.056 sec 26 0.07395 2319.25747

## 28 2020-06-20 06:42:33 0.057 sec 27 0.07765 2317.65464

## 29 2020-06-20 06:42:33 0.059 sec 28 0.08153 2316.28733

## 30 2020-06-20 06:42:33 0.060 sec 29 0.08560 2314.60930

## Iteration 18 of 75 24%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 172 3 None Quadratic 0 4 NA NA

## objective

## 172 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_35

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.04601 2098.79212

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1359.933

## Misclassification Error (Categorical): 339

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:34 0.011 sec 0 1.05000 3134.76905

## 2 2020-06-20 06:42:34 0.013 sec 1 0.70000 3134.76905

## 3 2020-06-20 06:42:34 0.015 sec 2 0.73500 3053.93990

## 4 2020-06-20 06:42:34 0.016 sec 3 0.49000 3053.93990

## 5 2020-06-20 06:42:34 0.018 sec 4 0.51450 2723.05849

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:34 0.050 sec 24 0.05678 2115.19723

## 26 2020-06-20 06:42:34 0.051 sec 25 0.05962 2106.47968

## 27 2020-06-20 06:42:34 0.053 sec 26 0.03975 2106.47968

## 28 2020-06-20 06:42:34 0.054 sec 27 0.04173 2102.54604

## 29 2020-06-20 06:42:34 0.056 sec 28 0.04382 2099.05102

## 30 2020-06-20 06:42:34 0.057 sec 29 0.04601 2098.79212

## Iteration 19 of 75 25.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 214 3 L1 L1 1 4 NA NA

## objective

## 214 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_37

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.13483 2559.79131

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1377.455

## Misclassification Error (Categorical): 345

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:36 0.011 sec 0 1.05000 2924.37776

## 2 2020-06-20 06:42:36 0.012 sec 1 0.70000 2924.37776

## 3 2020-06-20 06:42:36 0.014 sec 2 0.46667 2924.37776

## 4 2020-06-20 06:42:36 0.015 sec 3 0.31111 2924.37776

## 5 2020-06-20 06:42:36 0.017 sec 4 0.15556 2924.37776

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:36 0.040 sec 24 0.10564 2580.67789

## 26 2020-06-20 06:42:36 0.042 sec 25 0.11092 2577.74155

## 27 2020-06-20 06:42:36 0.043 sec 26 0.11647 2570.13709

## 28 2020-06-20 06:42:36 0.044 sec 27 0.12229 2567.53358

## 29 2020-06-20 06:42:36 0.046 sec 28 0.12841 2562.57976

## 30 2020-06-20 06:42:36 0.047 sec 29 0.13483 2559.79131

## Iteration 20 of 75 26.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 207 10 L1 Quadratic 1 4 NA NA

## objective

## 207 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_39

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.07247 1928.00010

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 907.1727

## Misclassification Error (Categorical): 99

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:37 0.022 sec 0 1.05000 3662.47013

## 2 2020-06-20 06:42:37 0.025 sec 1 0.70000 3662.47013

## 3 2020-06-20 06:42:37 0.029 sec 2 0.46667 3662.47013

## 4 2020-06-20 06:42:37 0.033 sec 3 0.49000 2923.23653

## 5 2020-06-20 06:42:37 0.037 sec 4 0.51450 2793.94544

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:37 0.118 sec 24 0.14085 1954.54243

## 26 2020-06-20 06:42:37 0.121 sec 25 0.14790 1943.67896

## 27 2020-06-20 06:42:37 0.125 sec 26 0.09860 1943.67896

## 28 2020-06-20 06:42:37 0.129 sec 27 0.10353 1932.56595

## 29 2020-06-20 06:42:37 0.133 sec 28 0.10870 1928.00010

## 30 2020-06-20 06:42:37 0.137 sec 29 0.07247 1928.00010

## Iteration 21 of 75 28%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 240 10 Quadratic L1 4 4 NA NA

## objective

## 240 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_41

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.11414 1911.97922

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 898.8717

## Misclassification Error (Categorical): 125

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:39 0.028 sec 0 1.05000 3703.59151

## 2 2020-06-20 06:42:39 0.032 sec 1 0.70000 3703.59151

## 3 2020-06-20 06:42:39 0.037 sec 2 0.46667 3703.59151

## 4 2020-06-20 06:42:39 0.041 sec 3 0.49000 3071.89741

## 5 2020-06-20 06:42:39 0.045 sec 4 0.51450 2805.45177

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:39 0.118 sec 24 0.14085 1977.93400

## 26 2020-06-20 06:42:39 0.123 sec 25 0.14790 1950.59167

## 27 2020-06-20 06:42:39 0.126 sec 26 0.15529 1941.76701

## 28 2020-06-20 06:42:39 0.130 sec 27 0.10353 1941.76701

## 29 2020-06-20 06:42:39 0.133 sec 28 0.10870 1922.02060

## 30 2020-06-20 06:42:39 0.136 sec 29 0.11414 1911.97922

## Iteration 22 of 75 29.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 159 10 Quadratic L1 4 1 NA NA

## objective

## 159 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_43

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.07247 1368.12017

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 677.1681

## Misclassification Error (Categorical): 87

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:40 0.022 sec 0 1.05000 3007.94952

## 2 2020-06-20 06:42:40 0.027 sec 1 0.70000 3007.94952

## 3 2020-06-20 06:42:40 0.032 sec 2 0.46667 3007.94952

## 4 2020-06-20 06:42:41 0.037 sec 3 0.49000 2659.83009

## 5 2020-06-20 06:42:41 0.042 sec 4 0.51450 2348.06378

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:41 0.108 sec 24 0.14085 1395.58157

## 26 2020-06-20 06:42:41 0.111 sec 25 0.09390 1395.58157

## 27 2020-06-20 06:42:41 0.115 sec 26 0.09860 1373.02331

## 28 2020-06-20 06:42:41 0.119 sec 27 0.10353 1368.35303

## 29 2020-06-20 06:42:41 0.123 sec 28 0.10870 1368.12017

## 30 2020-06-20 06:42:41 0.126 sec 29 0.07247 1368.12017

## Iteration 23 of 75 30.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 234 10 L1 Quadratic 4 4 NA NA

## objective

## 234 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_45

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.07247 3097.74184

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1577.28

## Misclassification Error (Categorical): 258

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:42 0.018 sec 0 1.05000 4888.30984

## 2 2020-06-20 06:42:42 0.023 sec 1 1.10250 4523.25134

## 3 2020-06-20 06:42:42 0.027 sec 2 0.73500 4523.25134

## 4 2020-06-20 06:42:42 0.031 sec 3 0.49000 4523.25134

## 5 2020-06-20 06:42:42 0.037 sec 4 0.51450 4062.66421

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:42 0.105 sec 24 0.14085 3125.30525

## 26 2020-06-20 06:42:42 0.109 sec 25 0.14790 3122.95615

## 27 2020-06-20 06:42:42 0.112 sec 26 0.09860 3122.95615

## 28 2020-06-20 06:42:42 0.115 sec 27 0.10353 3103.47638

## 29 2020-06-20 06:42:42 0.118 sec 28 0.10870 3097.74184

## 30 2020-06-20 06:42:42 0.121 sec 29 0.07247 3097.74184

## Iteration 24 of 75 32%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 161 5 L1 L1 4 1 NA NA

## objective

## 161 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_47

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.04601 2416.52572

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1419.86

## Misclassification Error (Categorical): 261

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:44 0.024 sec 0 1.05000 4449.74084

## 2 2020-06-20 06:42:44 0.026 sec 1 0.70000 4449.74084

## 3 2020-06-20 06:42:44 0.029 sec 2 0.73500 3723.18153

## 4 2020-06-20 06:42:44 0.031 sec 3 0.49000 3723.18153

## 5 2020-06-20 06:42:44 0.034 sec 4 0.51450 3319.63788

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:44 0.094 sec 24 0.08943 2447.75348

## 26 2020-06-20 06:42:44 0.097 sec 25 0.09390 2442.79407

## 27 2020-06-20 06:42:44 0.099 sec 26 0.06260 2442.79407

## 28 2020-06-20 06:42:44 0.101 sec 27 0.06573 2429.09068

## 29 2020-06-20 06:42:44 0.103 sec 28 0.06902 2416.52572

## 30 2020-06-20 06:42:44 0.105 sec 29 0.04601 2416.52572

## Iteration 25 of 75 33.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 216 10 L1 L1 1 4 NA NA

## objective

## 216 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_49

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.17977 1772.02475

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 718.659

## Misclassification Error (Categorical): 67

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:45 0.017 sec 0 1.05000 3769.59059

## 2 2020-06-20 06:42:45 0.020 sec 1 0.70000 3769.59059

## 3 2020-06-20 06:42:45 0.025 sec 2 0.46667 3769.59059

## 4 2020-06-20 06:42:45 0.029 sec 3 0.49000 3196.92762

## 5 2020-06-20 06:42:45 0.033 sec 4 0.51450 2852.78647

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:45 0.096 sec 24 0.34941 1807.26389

## 26 2020-06-20 06:42:45 0.099 sec 25 0.23294 1807.26389

## 27 2020-06-20 06:42:45 0.102 sec 26 0.24458 1781.30373

## 28 2020-06-20 06:42:45 0.106 sec 27 0.25681 1773.04977

## 29 2020-06-20 06:42:45 0.110 sec 28 0.26965 1772.02475

## 30 2020-06-20 06:42:45 0.114 sec 29 0.17977 1772.02475

## Iteration 26 of 75 34.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 135 10 L1 L1 1 1 NA NA

## objective

## 135 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_51

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.11414 1252.36736

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 611.2258

## Misclassification Error (Categorical): 50

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:47 0.023 sec 0 1.05000 3107.65051

## 2 2020-06-20 06:42:47 0.028 sec 1 0.70000 3107.65051

## 3 2020-06-20 06:42:47 0.033 sec 2 0.46667 3107.65051

## 4 2020-06-20 06:42:47 0.037 sec 3 0.49000 3045.91653

## 5 2020-06-20 06:42:47 0.040 sec 4 0.51450 2703.91261

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:47 0.110 sec 24 0.22184 1289.71785

## 26 2020-06-20 06:42:47 0.113 sec 25 0.14790 1289.71785

## 27 2020-06-20 06:42:47 0.116 sec 26 0.15529 1274.07942

## 28 2020-06-20 06:42:47 0.119 sec 27 0.16306 1265.16554

## 29 2020-06-20 06:42:47 0.122 sec 28 0.10870 1265.16554

## 30 2020-06-20 06:42:47 0.125 sec 29 0.11414 1252.36736

## Iteration 27 of 75 36%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 101 5 None L1 0 1 NA NA

## objective

## 101 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_53

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.33445 1177.62532

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1434.829

## Misclassification Error (Categorical): 95

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:48 0.012 sec 0 1.05000 2385.75844

## 2 2020-06-20 06:42:48 0.014 sec 1 0.70000 2385.75844

## 3 2020-06-20 06:42:48 0.015 sec 2 0.46667 2385.75844

## 4 2020-06-20 06:42:48 0.017 sec 3 0.31111 2385.75844

## 5 2020-06-20 06:42:48 0.019 sec 4 0.15556 2385.75844

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:48 0.056 sec 24 0.41274 1286.26662

## 26 2020-06-20 06:42:48 0.058 sec 25 0.43337 1259.55898

## 27 2020-06-20 06:42:48 0.060 sec 26 0.45504 1237.60458

## 28 2020-06-20 06:42:48 0.061 sec 27 0.47779 1228.32777

## 29 2020-06-20 06:42:48 0.063 sec 28 0.31853 1228.32777

## 30 2020-06-20 06:42:48 0.065 sec 29 0.33445 1177.62532

## Iteration 28 of 75 37.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 149 5 Quadratic Quadratic 4 1 NA NA

## objective

## 149 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_55

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.04601 1931.61877

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1346.045

## Misclassification Error (Categorical): 173

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:50 0.016 sec 0 1.05000 3016.58214

## 2 2020-06-20 06:42:50 0.017 sec 1 0.70000 3016.58214

## 3 2020-06-20 06:42:50 0.019 sec 2 0.46667 3016.58214

## 4 2020-06-20 06:42:50 0.021 sec 3 0.49000 2851.17913

## 5 2020-06-20 06:42:50 0.023 sec 4 0.51450 2507.73659

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:50 0.064 sec 24 0.08943 1951.85283

## 26 2020-06-20 06:42:50 0.065 sec 25 0.09390 1945.27278

## 27 2020-06-20 06:42:50 0.067 sec 26 0.06260 1945.27278

## 28 2020-06-20 06:42:50 0.069 sec 27 0.06573 1936.11036

## 29 2020-06-20 06:42:50 0.071 sec 28 0.06902 1931.61877

## 30 2020-06-20 06:42:50 0.073 sec 29 0.04601 1931.61877

## Iteration 29 of 75 38.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 33 10 Quadratic None 1 0 NA NA

## objective

## 33 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_57

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.17977 681.11182

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 559.3386

## Misclassification Error (Categorical): 44

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:52 0.042 sec 0 1.05000 2777.66708

## 2 2020-06-20 06:42:52 0.047 sec 1 0.70000 2777.66708

## 3 2020-06-20 06:42:52 0.055 sec 2 0.46667 2777.66708

## 4 2020-06-20 06:42:52 0.060 sec 3 0.31111 2777.66708

## 5 2020-06-20 06:42:52 0.064 sec 4 0.32667 2009.62535

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:52 0.143 sec 24 0.22184 743.00722

## 26 2020-06-20 06:42:52 0.146 sec 25 0.23294 722.05962

## 27 2020-06-20 06:42:52 0.150 sec 26 0.24458 720.93798

## 28 2020-06-20 06:42:52 0.154 sec 27 0.16306 720.93798

## 29 2020-06-20 06:42:52 0.157 sec 28 0.17121 692.39390

## 30 2020-06-20 06:42:52 0.161 sec 29 0.17977 681.11182

## Iteration 30 of 75 40%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 58 3 Quadratic None 4 0 NA NA

## objective

## 58 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_59

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.03451 2137.97996

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1342.545

## Misclassification Error (Categorical): 355

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:53 0.011 sec 0 1.05000 2521.45078

## 2 2020-06-20 06:42:53 0.012 sec 1 0.70000 2521.45078

## 3 2020-06-20 06:42:53 0.014 sec 2 0.46667 2521.45078

## 4 2020-06-20 06:42:53 0.015 sec 3 0.31111 2521.45078

## 5 2020-06-20 06:42:53 0.016 sec 4 0.15556 2521.45078

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:53 0.039 sec 24 0.04259 2148.31707

## 26 2020-06-20 06:42:53 0.041 sec 25 0.04472 2147.07827

## 27 2020-06-20 06:42:53 0.042 sec 26 0.04695 2145.09626

## 28 2020-06-20 06:42:53 0.043 sec 27 0.03130 2145.09626

## 29 2020-06-20 06:42:53 0.045 sec 28 0.03287 2138.99783

## 30 2020-06-20 06:42:53 0.046 sec 29 0.03451 2137.97996

## Iteration 31 of 75 41.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 231 10 Quadratic Quadratic 4 4 NA NA

## objective

## 231 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_61

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.02921 2299.44292

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1194.597

## Misclassification Error (Categorical): 129

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:55 0.030 sec 0 1.05000 3706.85791

## 2 2020-06-20 06:42:55 0.034 sec 1 0.70000 3706.85791

## 3 2020-06-20 06:42:55 0.039 sec 2 0.73500 3491.52619

## 4 2020-06-20 06:42:55 0.044 sec 3 0.77175 3226.04579

## 5 2020-06-20 06:42:55 0.048 sec 4 0.81034 3219.54303

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:55 0.142 sec 24 0.08943 2319.50966

## 26 2020-06-20 06:42:55 0.146 sec 25 0.05962 2319.50966

## 27 2020-06-20 06:42:55 0.150 sec 26 0.06260 2306.58959

## 28 2020-06-20 06:42:55 0.153 sec 27 0.04173 2306.58959

## 29 2020-06-20 06:42:55 0.156 sec 28 0.04382 2299.44292

## 30 2020-06-20 06:42:55 0.160 sec 29 0.02921 2299.44292

## Iteration 32 of 75 42.7%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 152 5 L1 Quadratic 4 1 NA NA

## objective

## 152 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_63

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.04601 2564.77247

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1471.568

## Misclassification Error (Categorical): 256

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:56 0.016 sec 0 1.05000 4487.48202

## 2 2020-06-20 06:42:56 0.019 sec 1 0.70000 4487.48202

## 3 2020-06-20 06:42:56 0.021 sec 2 0.73500 3612.17655

## 4 2020-06-20 06:42:56 0.024 sec 3 0.49000 3612.17655

## 5 2020-06-20 06:42:56 0.025 sec 4 0.51450 3424.12459

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:56 0.068 sec 24 0.08943 2593.95334

## 26 2020-06-20 06:42:56 0.070 sec 25 0.09390 2586.73186

## 27 2020-06-20 06:42:56 0.072 sec 26 0.06260 2586.73186

## 28 2020-06-20 06:42:56 0.074 sec 27 0.06573 2572.14357

## 29 2020-06-20 06:42:56 0.077 sec 28 0.06902 2564.77247

## 30 2020-06-20 06:42:56 0.079 sec 29 0.04601 2564.77247

## Iteration 33 of 75 44%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 181 3 None L1 0 4 NA NA

## objective

## 181 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_65

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.17977 2081.97962

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1562.542

## Misclassification Error (Categorical): 263

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:42:58 0.011 sec 0 1.05000 2981.05265

## 2 2020-06-20 06:42:58 0.012 sec 1 0.70000 2981.05265

## 3 2020-06-20 06:42:58 0.014 sec 2 0.46667 2981.05265

## 4 2020-06-20 06:42:58 0.016 sec 3 0.31111 2981.05265

## 5 2020-06-20 06:42:58 0.018 sec 4 0.32667 2961.15593

##

## ---

## timestamp duration iterations step_size objective

## 25 2020-06-20 06:42:58 0.050 sec 24 0.22184 2147.31713

## 26 2020-06-20 06:42:58 0.052 sec 25 0.23294 2112.08129

## 27 2020-06-20 06:42:58 0.053 sec 26 0.24458 2101.64469

## 28 2020-06-20 06:42:58 0.056 sec 27 0.25681 2092.79617

## 29 2020-06-20 06:42:58 0.057 sec 28 0.26965 2081.97962

## 30 2020-06-20 06:42:58 0.059 sec 29 0.17977 2081.97962

## Iteration 34 of 75 45.3%

## k regularization_x regularization_y gamma_x gamma_y error_num error_cat

## 130 3 Quadratic L1 1 1 NA NA

## objective

## 130 NA

## Model Details:

## ==============

##

## H2ODimReductionModel: glrm

## Model Key: GLRM_model_R_1592660518623_67

## Model Summary:

## number_of_iterations final_step_size final_objective_value

## 1 30 0.03451 2144.58357

##

## H2ODimReductionMetrics: glrm

## ** Reported on training data. **

##

## Sum of Squared Error (Numeric): 1309.475

## Misclassification Error (Categorical): 355

## Number of Numeric Entries: 1833

## Number of Categorical Entries: 1143

##

##

##

## Scoring History:

## timestamp duration iterations step_size objective

## 1 2020-06-20 06:43:00 0.011 sec 0 1.05000 2507.76069

## 2 2020-06-20 06:43:00 0.014 sec 1 0.70000 2507.76069